Software Testing with LLMs: A Comprehensive Review

Utilization of LLMs in Software Testing

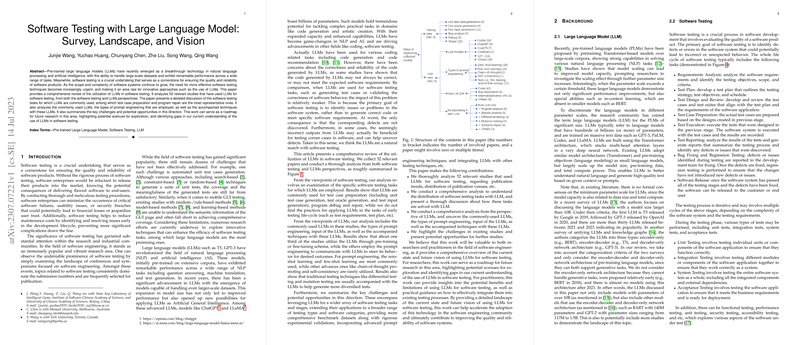

The integration of pre-trained LLMs into software testing denotes a promising approach, particularly as software systems become increasingly complex. This paper rigorously analyzes current methodologies and advancements in applying LLMs for software testing. It focuses on 102 relevant studies, presenting a broad spectrum of software testing tasks, including but not limited to test case preparation, program diagnostics, and bug repair.

Insights from Software Testing Tasks

Test Case Generation

One of the paramount uses of LLMs is observed in the generation of unit test cases. With software testing grappling with challenges such as automated unit test case generation, LLMs offer a notable advantage by leveraging their inherent ability to comprehend and process large codebases. They facilitate automated test creation that significantly improves coverage and test quality. The paper categorizes the application of LLMs into pre-training or fine-tuning with domain-specific datasets alongside prompt engineering techniques to steer LLM behaviors towards generating desirable testing outcomes.

Program Repair

Another vital application of LLMs is in program repair, where they have been used to debug and rectify software defects. Utilizing a combination of pre-training for domain-specific adaptation and carefully engineered prompts, LLMs have shown significant potential in identifying and fixing errors in code. This approach has presented notable efficiency in patching known vulnerabilities, showcasing the LLMs’ ability to tackle complex software debugging tasks.

Test Oracle Generation and Input Generation

The generation of test oracles and systematic test inputs presents challenges such as the oracle problem in software testing. Here, LLMs have shown promise by being utilized in differential testing approaches and generating metamorphic relations to tackle these issues effectively. Furthermore, LLMs have been applied to generate diversified test inputs for various types of software, demonstrating flexibility across different application domains.

Utilization Aspects of LLMs

LLM Models in Use

A remarkable aspect highlighted is the varied use of specific LLMs such as ChatGPT, Codex, and CodeT5, among others, depending on the nature and requirements of the testing task. ChatGPT emerges as the most frequently utilized model, attributing to its architectural design optimized for understanding natural language and code.

Prompt Engineering Techniques

The paper delineates various prompt engineering strategies adopted to enhance LLM performance in software testing tasks. These strategies range from zero-shot and few-shot learning to more sophisticated methods like chain-of-thought prompting. Each technique offers unique benefits in refining the model's output towards more relevant and accurate test artifacts.

Challenges and Future Directions

Despite the substantial advancements and successful application of LLMs in software testing, several challenges remain. These include achieving high coverage in test case generation, addressing the test oracle problem, and the need for rigorous evaluation frameworks to measure LLM performance accurately. Moreover, the potential benefits of exploring LLMs in early-stage testing activities, non-functional testing, and the integration of advanced prompt engineering techniques present avenues for future research.

Real-world Applications and Integration

The paper also casts light on the real-world applicability challenges of employing LLMs in software testing, emphasizing the need for domain-specific fine-tuning and prompt engineering to meet industry-specific requirements. Furthermore, it suggests the exploration of combining traditional testing techniques with LLM capabilities to enhance testing efficacy and coverage.

Conclusion

In conclusion, the paper provides a comprehensive analysis of using LLMs in software testing, summarizing current practices, challenges, and future research opportunities. It underlines the potential of LLMs to revolutionize software testing methodologies but also calls attention to the need for further investigation and development to fully leverage LLM capabilities in practical and diverse testing scenarios.