Overview of "A Comprehensive Overview of LLMs"

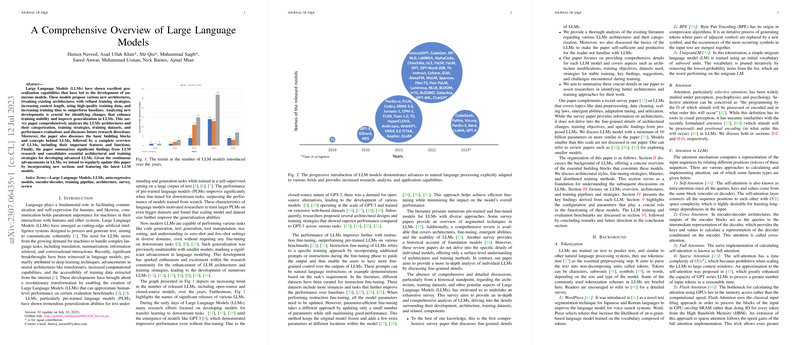

The paper "A Comprehensive Overview of LLMs" offers an extensive survey of developments and research directions within the domain of LLMs. The authors provide a thorough examination of the advancements, challenges, and methodologies associated with LLMs, presenting insights that can aid researchers and practitioners in understanding the current landscape and future potential of these models.

Architectures and Training Strategies

The paper meticulously reviews various architectural choices and training strategies that have been instrumental in the evolution of LLMs. The discussion highlights the differences between encoder-decoder, decoder-only, and encoder-only architectures, emphasizing their suitability for specific tasks such as NLU, NLG, and sequence-to-sequence modeling. Notably, the encoder-decoder architecture is favored for its versatility in mode-switching and effectiveness in various contexts.

Pre-trained and Instruction-tuned Models

A significant portion of the paper is dedicated to exploring key pre-trained models, including T5, GPT-3, mT5, and others. These models have demonstrated remarkable capabilities in zero-shot and few-shot learning, often surpassing traditional models in comprehensive benchmarks. Instruction-tuning, highlighted through models like T0 and Flan, is emphasized as a crucial step in enhancing zero-shot performance and generalization to unseen tasks, with CoT training unlocking reasoning capabilities.

Insights into Fine-Tuning and Alignment

The exploration of fine-tuning strategies, including parameter-efficient methods such as adapter tuning, LoRA, and prompt tuning, offers valuable insights into improving model adaptation without full retraining. The alignment with human preferences is also discussed, focusing on techniques like RLHF and semi-automated alignment, which are pivotal in ensuring ethical and aligned outputs from LLMs.

Efficient Utilization and Multimodal Integration

The authors address the challenges associated with deploying LLMs, presenting methods like quantization, pruning, and multimodal integration. With advancements in efficient attention mechanisms and parameter-efficient fine-tuning, the models can be adapted for real-world applications without substantial computational overhead.

Evaluation and Datasets

A comprehensive look into evaluation datasets and methodologies provides a foundation for assessing the performance of LLMs in tasks ranging from language understanding to mathematical reasoning. The paper also discusses the construction and significance of diverse training datasets that cater to specific linguistic and domain-specific needs.

Challenges and Future Directions

The paper identifies several challenges facing LLMs, including computational costs, biases, overfitting, and interpretability. It calls for further exploration into areas like model scalability, adversarial robustness, and multi-modality. The authors propose enhancing the framework of LLMs through refined control mechanisms and prompt engineering to mitigate issues like hallucination and bias.

Conclusion and Implications

By offering a seasoned perspective on the progress and hurdles in LLM research, the paper serves as a comprehensive guide for developing improved models. It underscores the transformative potential of LLMs across domains, encouraging ongoing research to address existing challenges and leverage the strengths of these models for broader applications.

The authors’ structured approach and detailed exploration of LLMs provide an invaluable resource, aiding both seasoned researchers and newcomers in navigating the complexities and opportunities within this rapidly evolving field.