MMBench: Evaluating Multi-modal Models

The paper "MMBench: Is Your Multi-modal Model an All-around Player?" presents a novel approach to benchmarking large vision-LLMs (LVLMs). Given recent advances in LVLMs, which exhibit significant capabilities in perception and reasoning, assessing these models comprehensively has become challenging. Traditional benchmarks like VQAv2 and COCO Caption offer quantitative metrics but lack detailed ability delineation. Meanwhile, subjective benchmarks such as OwlEval, relying on human evaluation, face scalability and bias issues. MMBench addresses these limitations with a more systematic and objective evaluation methodology.

Core Contributions

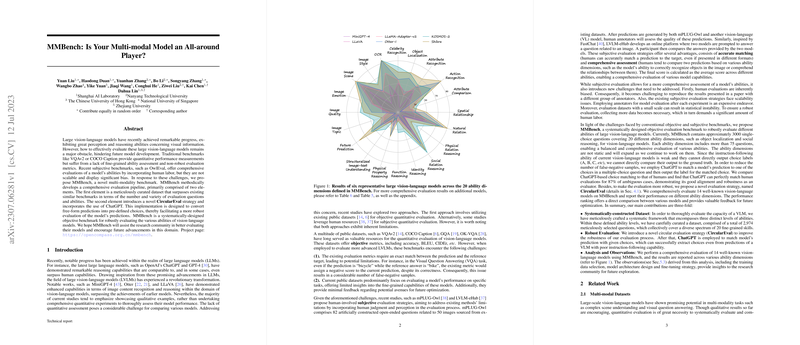

MMBench is primarily composed of two innovative components: a curated dataset and a new evaluation strategy, namely CircularEval. The dataset surpasses existing benchmarks in the variety and number of evaluation questions across 20 ability dimensions. CircularEval is designed to yield more robust predictions by converting free-form outputs to pre-defined choices using ChatGPT. This approach ensures that models are evaluated on their true ability to provide coherent and contextually relevant predictions.

Numerical Results and Claims

The empirical evaluation illustrated with MMBench is extensive, covering 14 well-known vision-LLMs. Notably, the integration of object localization data in the training set significantly enhances model performance, particularly for Kosmos-2 and Shikra. These models demonstrate superior performance across numerous L-2 abilities. In contrast, models like OpenFlamingo and MMGPT exhibit lower performance levels, underscoring the diverse strengths and weaknesses of current LVLMs.

Implications and Future Directions

This research has practical implications in the field of multi-modal AI, providing a robust tool for the comprehensive assessment of model capabilities. The inclusion of abilities like fine-grained perception, logical, and social reasoning reflects the need for nuanced evaluation criteria that can better guide future model developments. Moreover, the methods introduced with MMBench, especially the use of CircularEval and leveraging LLMs like ChatGPT for choice extraction, highlight potential avenues for enhancing evaluation protocols.

Future research can expand upon this benchmark by incorporating additional ability dimensions, adapting the evaluation method for few-shot learning, and exploring its application across other AI systems. The novel integration of robust evaluation strategies could also influence the development of more sophisticated training paradigms for LVLMs.

Conclusion

MMBench represents a significant stride toward more detailed and reliable evaluation of multi-modal models. By addressing the limitations of previous benchmarks and proposing a novel evaluation framework, it sets the stage for more rigorous and comprehensive assessment of complex AI systems in the domain of vision-language interaction.