Semantic-SAM: Segment and Recognize Anything at Any Granularity

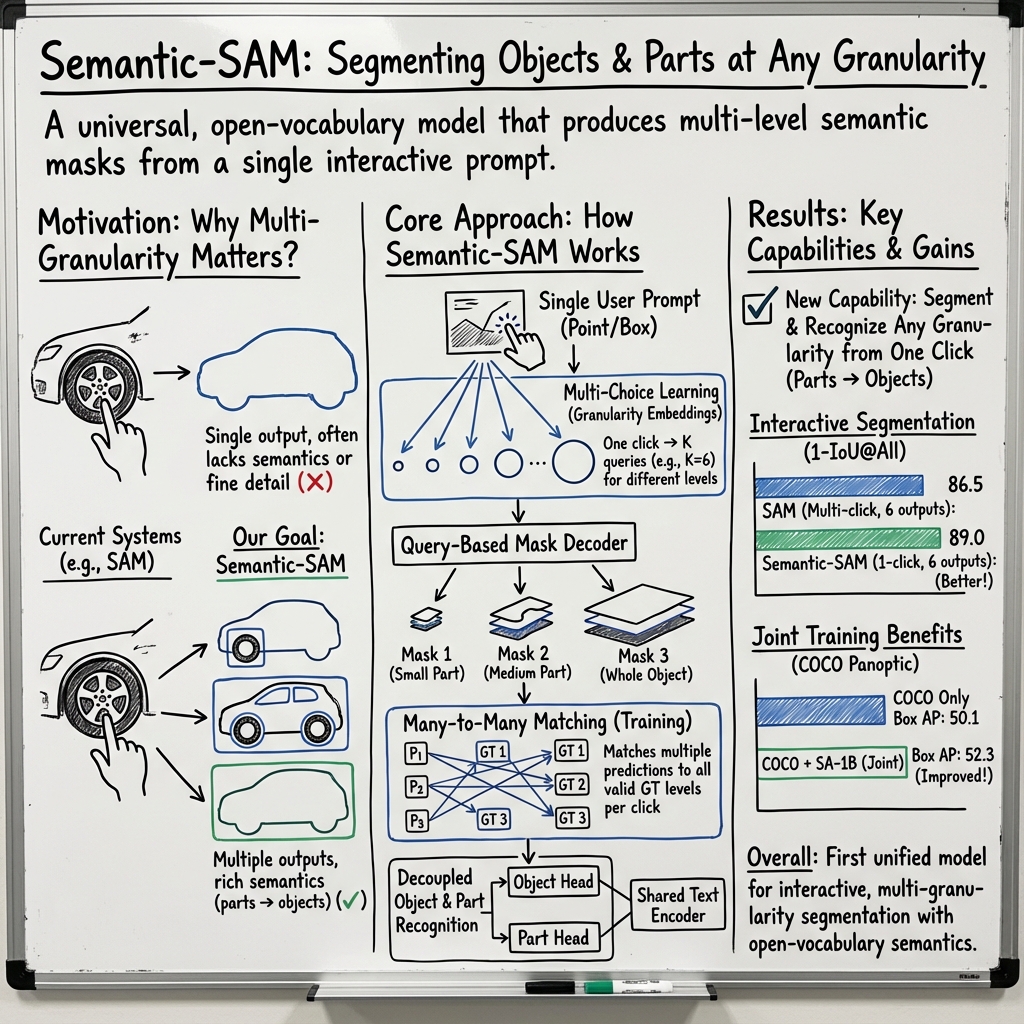

Abstract: In this paper, we introduce Semantic-SAM, a universal image segmentation model to enable segment and recognize anything at any desired granularity. Our model offers two key advantages: semantic-awareness and granularity-abundance. To achieve semantic-awareness, we consolidate multiple datasets across three granularities and introduce decoupled classification for objects and parts. This allows our model to capture rich semantic information. For the multi-granularity capability, we propose a multi-choice learning scheme during training, enabling each click to generate masks at multiple levels that correspond to multiple ground-truth masks. Notably, this work represents the first attempt to jointly train a model on SA-1B, generic, and part segmentation datasets. Experimental results and visualizations demonstrate that our model successfully achieves semantic-awareness and granularity-abundance. Furthermore, combining SA-1B training with other segmentation tasks, such as panoptic and part segmentation, leads to performance improvements. We will provide code and a demo for further exploration and evaluation.

- Contour detection and hierarchical image segmentation. IEEE transactions on pattern analysis and machine intelligence, 33(5):898–916, 2010.

- Multiscale combinatorial grouping. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 328–335, 2014.

- Yolact: Real-time instance segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 9157–9166, 2019.

- End-to-end object detection with transformers. In European Conference on Computer Vision, pages 213–229. Springer, 2020.

- Emerging properties in self-supervised vision transformers. In Proceedings of the IEEE/CVF international conference on computer vision, pages 9650–9660, 2021.

- Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE transactions on pattern analysis and machine intelligence, 40(4):834–848, 2017.

- Rethinking atrous convolution for semantic image segmentation. arXiv preprint arXiv:1706.05587, 2017.

- Microsoft COCO captions: Data collection and evaluation server. arXiv preprint arXiv:1504.00325, 2015.

- Detect what you can: Detecting and representing objects using holistic models and body parts, 2014.

- Focalclick: towards practical interactive image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1300–1309, 2022.

- Masked-attention mask transformer for universal image segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1290–1299, 2022.

- The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3213–3223, 2016.

- Part-aware panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5485–5494, 2021.

- Open-vocabulary panoptic segmentation with maskclip. arXiv preprint arXiv:2208.08984, 2022.

- The pascal visual object classes challenge 2012 (voc2012) development kit. Pattern Analysis, Statistical Modelling and Computational Learning, Tech. Rep, 8(5), 2011.

- Object detection with discriminatively trained part-based models. IEEE transactions on pattern analysis and machine intelligence, 32(9):1627–1645, 2009.

- A survey on image segmentation. Pattern recognition, 13(1):3–16, 1981.

- Open-vocabulary image segmentation. arXiv preprint arXiv:2112.12143, 2021.

- Leo Grady. Random walks for image segmentation. IEEE transactions on pattern analysis and machine intelligence, 28(11):1768–1783, 2006.

- Efficient hierarchical graph-based video segmentation. In 2010 ieee computer society conference on computer vision and pattern recognition, pages 2141–2148. IEEE, 2010.

- Lvis: A dataset for large vocabulary instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5356–5364, 2019.

- Multiple choice learning: Learning to produce multiple structured outputs. Advances in neural information processing systems, 25, 2012.

- Partimagenet: A large, high-quality dataset of parts. arXiv preprint arXiv:2112.00933, 2021.

- Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, pages 2961–2969, 2017.

- Multi-task fusion for efficient panoptic-part segmentation. arXiv preprint arXiv:2212.07671, 2022.

- Oneformer: One transformer to rule universal image segmentation. arXiv preprint arXiv:2211.06220, 2022.

- Learning semantic neural tree for human parsing. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XIII 16, pages 205–221. Springer, 2020.

- Scaling up visual and vision-language representation learning with noisy text supervision. In ICML, 2021.

- Fashionpedia: Ontology, segmentation, and an attribute localization dataset. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part I 16, pages 316–332. Springer, 2020.

- Panoptic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 9404–9413, 2019.

- Segment anything, 2023.

- Language-driven semantic segmentation. arXiv preprint arXiv:2201.03546, 2022.

- Mask dino: Towards a unified transformer-based framework for object detection and segmentation. arXiv preprint arXiv:2206.02777, 2022.

- Holistic, instance-level human parsing. arXiv preprint arXiv:1709.03612, 2017.

- Panoptic-partformer: Learning a unified model for panoptic part segmentation. In European Conference on Computer Vision, pages 729–747. Springer, 2022.

- Lazy snapping. ACM Transactions on Graphics (ToG), 23(3):303–308, 2004.

- Interactive image segmentation with latent diversity. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pages 577–585, 2018.

- Panoptic segformer: Delving deeper into panoptic segmentation with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 1280–1289, 2022.

- Microsoft coco: Common objects in context. In ECCV, 2014.

- Simpleclick: Interactive image segmentation with simple vision transformers. arXiv preprint arXiv:2210.11006, 2022.

- Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 10012–10022, 2021.

- Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 3431–3440, 2015.

- Decoupled weight decay regularization. arXiv preprint arXiv:1711.05101, 2017.

- Cityscapes-panoptic-parts and pascal-panoptic-parts datasets for scene understanding. arXiv preprint arXiv:2004.07944, 2020.

- Image segmentation using deep learning: A survey. IEEE transactions on pattern analysis and machine intelligence, 2021.

- OpenAI. Chatgpt. https://openai.com/blog/chatgpt, 2022.

- OpenAI. Gpt-4 technical report, 2023.

- Learning transferable visual models from natural language supervision. In International Conference on Machine Learning, pages 8748–8763. PMLR, 2021.

- PACO: Parts and attributes of common objects. In arXiv preprint arXiv:2301.01795, 2023.

- Denseclip: Language-guided dense prediction with context-aware prompting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18082–18091, 2022.

- Faster r-cnn: Towards real-time object detection with region proposal networks. Advances in neural information processing systems, 28:91–99.

- High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 10684–10695, 2022.

- Objects365: A large-scale, high-quality dataset for object detection. In Proceedings of the IEEE/CVF international conference on computer vision, pages 8430–8439, 2019.

- Apollocar3d: A large 3d car instance understanding benchmark for autonomous driving. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 5452–5462, 2019.

- Going denser with open-vocabulary part segmentation, 2023.

- Attention is all you need. Advances in neural information processing systems, 30, 2017.

- The caltech-ucsd birds-200-2011 dataset. technical report, 2011.

- Seggpt: Segmenting everything in context. arXiv preprint arXiv:2304.03284, 2023.

- Groupvit: Semantic segmentation emerges from text supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 18134–18144, 2022.

- Open-vocabulary panoptic segmentation with text-to-image diffusion models. arXiv preprint arXiv:2303.04803, 2023.

- Deep interactive object selection. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 373–381, 2016.

- Unified contrastive learning in image-text-label space. In CVPR, 2022.

- Parsing r-cnn for instance-level human analysis. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 364–373, 2019.

- Mp-former: Mask-piloted transformer for image segmentation. arXiv preprint arXiv:2303.07336, 2023.

- A simple framework for open-vocabulary segmentation and detection. arXiv preprint arXiv:2303.08131, 2023.

- Adding conditional control to text-to-image diffusion models. arXiv preprint arXiv:2302.05543, 2023.

- Scene parsing through ade20k dataset. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 633–641, 2017.

- Semantic understanding of scenes through the ade20k dataset, 2018.

- Generalized decoding for pixel, image, and language. arXiv preprint arXiv:2212.11270, 2022.

- Segment everything everywhere all at once. arXiv preprint arXiv:2304.06718, 2023.

- Object detection in 20 years: A survey. arXiv preprint arXiv:1905.05055, 2019.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper introduces Semantic-SAM, a computer vision system that can “cut out” parts of an image (called segmentation) and also name what those parts are. The special thing about it is that it can do this at any level of detail—from a tiny part like a person’s head, to a whole object like a car, to an entire scene. With just a single click on the image, it can offer several good mask options at different sizes and name them (like “wheel,” “car,” or “road”).

What questions are the researchers trying to answer?

The authors focus on three simple questions:

- Can one model both find image regions and understand what they are (semantics), at the same time?

- Can one click from a user produce several useful answers, from small parts to whole objects (multi-granularity)?

- If we train on many different datasets—some about whole objects, some about parts, and some with lots of masks but no labels—will the model get better at everything?

How does the system work?

Think of image segmentation like coloring in a picture: the model needs to decide which pixels belong to what. Semantic-SAM improves this process in a few key ways:

- Mixing many types of data: The team combines seven datasets. Some have labels for whole objects (like “dog” or “car”), some have labels for parts (like “leg” or “wheel”), and one giant dataset (SA-1B) has tons of mask shapes without names. Training on all of these together helps the model learn both shape and meaning.

- One click, many answers: Usually, models try to pick just one best mask for a click. But a single click could reasonably refer to a small part (like an eye) or the whole object (like a face). So Semantic-SAM turns every click into several “queries,” each tuned to a different detail level (from small to large). It’s like asking: “Give me the small version, the medium version, and the large version of what you think I clicked.”

- Matching multiple guesses to multiple truths: During training, when the model makes several mask guesses for a click, the system lines them up with several correct masks (if they exist) using a smart pairing process. This is like grading a test that has several acceptable answers and rewarding the model for each correct one, not just one.

- Learning object names and part names separately: The model learns to recognize “objects” (like “car”) and “parts” (like “wheel”) with two connected heads, both using a shared LLM. This helps it transfer “part” knowledge across different objects—for example, knowing that “head” applies to humans, dogs, and many animals.

- Prompts: The model accepts point and box prompts. A point click is turned into a tiny box so both prompts share the same format. The system then decodes image features to produce masks and labels for each detail level.

In everyday terms: Semantic-SAM is like a super-smart photo selection tool. You click once, and it shows several clean cutouts from small detail to whole object, and it can tell you what each cutout is called.

What did they find?

Here are the main takeaways, in plain language:

- Better one-click selections: Compared to SAM (a popular earlier model), Semantic-SAM usually gives more accurate results from a single click.

- More complete “levels” per click: It produces more diverse and higher-quality options (from small parts to large objects) with one click, and these options match the true variety seen in real images better than before.

- Naming things at multiple levels: It’s not just cutting out shapes—it’s also better at labeling both whole objects and their parts.

- Training on lots of different data helps: Adding the huge unlabeled mask dataset (SA-1B) to normal labeled datasets improved performance on other tasks (like detecting and segmenting objects in the COCO benchmark). For example, it boosted object detection and mask accuracy by around 1–2 points (a meaningful gain in this field).

- You don’t need all the data to benefit: The gains mostly show up after using a fraction of the huge dataset, which is practical and efficient.

Why this is important: It makes image editing, selection, and understanding more reliable and flexible. You get multiple good answers per click, instead of forcing one “best guess,” and the system actually understands what the regions are.

Why does this matter, and what could it lead to?

Because Semantic-SAM can segment and recognize anything at different detail levels, it can make image tools more helpful and easier to use:

- Photo and video editing: One click to select a small part (like a logo) or the whole object (like a car), with cleaner edges and better options.

- Design and creativity: Quickly swap or inpaint parts (“change the wheels,” “replace the sky”) based on precise selections.

- Education and AR: Label parts of animals, machines, or scenes, from tiny details to full objects.

- Robotics and self-driving: Understand scenes at different levels to make safer, more informed decisions.

- Faster dataset labeling: Give multiple correct cutout options automatically, saving human effort.

In short, Semantic-SAM brings us closer to universal, interactive image understanding: it follows your intent, gives several sensible choices, and knows what it’s looking at—from the smallest part to the whole thing.

Collections

Sign up for free to add this paper to one or more collections.