Improving Automatic Parallel Training via Balanced Memory Workload Optimization

The paper "Improving Automatic Parallel Training via Balanced Memory Workload Optimization" introduces a novel system framework named Galvatron-BMW aimed at addressing the complex challenge of efficiently training Transformer models across multiple GPUs. Transformers have emerged as pivotal models in various domains, including NLP, CV, and recommendation systems, due to their state-of-the-art performance. However, their deployment is resource-intensive, necessitating intricate parallelism strategies to manage computational and memory requirements effectively.

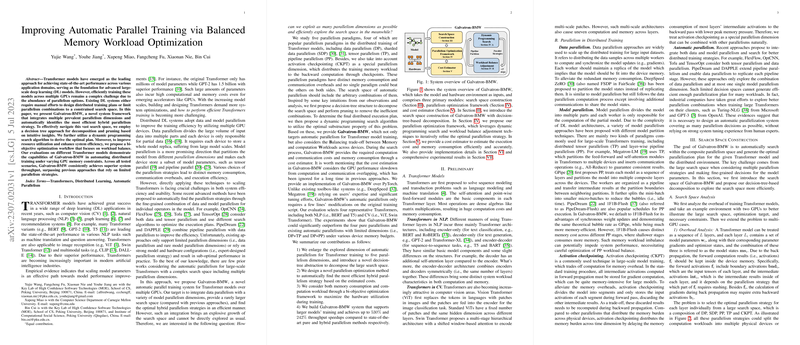

Overview of Galvatron-BMW

Galvatron-BMW presents a comprehensive solution by automating the selection of efficient hybrid parallelism strategies for distributed training, leveraging a multi-faceted approach to parallelization. It incorporates multiple prevalent dimensions of parallelism — Data Parallelism (DP), Sharded Data Parallelism (SDP), Tensor Parallelism (TP), Pipeline Parallelism (PP), and Activation Checkpointing (CKPT) — addressing both memory consumption and computational overhead. These dimensions are automatically integrated through a decision tree approach for structured decomposition of the vast search space. This method is further refined by employing dynamic programming to derive optimal plans, coupling these with a bi-objective optimization workflow focusing on workload balance to enhance resource utilization and system efficiency.

Numerical Results and Framework Validation

The paper provides empirical evidence of Galvatron-BMW's efficacy through evaluations using various Transformer models under different GPU memory constraints. The results indicate that Galvatron-BMW consistently surpasses existing approaches, achieving significant throughput improvements. For instance, its advanced hybrid parallelism strategies enable it to accommodate larger batch sizes compared to other models while managing memory constraints more effectively. This accomplishment underscores the framework's capacity to enhance the overall system performance.

Implications and Future Directions

The implications of this research are substantial, both practically and theoretically. From a practical standpoint, Galvatron-BMW facilitates more efficient training of large-scale deep learning models, potentially reducing the time and cost associated with these processes. Theoretically, it contributes to the ongoing discourse on optimizing distributed training systems and may inspire future research into even more seamless integration of multiple parallelization dimensions using automatic, intelligent strategies.

Looking forward, potential advancements may include extending this framework to heterogeneous environments and addressing training efficiency for even larger models with dynamic, complex architectures. Additionally, exploring further automation in adjusting for variable hardware configurations could also significantly benefit the field.

In conclusion, the paper offers an insightful approach toward automatic hybrid parallelism in distributed deep learning, providing robust tools for improving throughput and efficiency in large-scale model training. Galvatron-BMW sets a precedent for future developments in this domain, promising enhancements in both the deployment and the theoretical understanding of parallel training strategies.