Deconstructing Sexual Identity Stereotypes in LLMs

The paper "Queer People are People First: Deconstructing Sexual Identity Stereotypes in LLMs" presents a meticulous exploration of biases intrinsic to LLMs with a focus on sexual identity stereotypes. As models trained predominantly on minimally processed web text, LLMs can inherently perpetuate the societal biases encapsulated in their training data. The authors identify the significant concern that such biases lead to skewed portrayals of individuals based on sexual identity, potentially propagating harmful stereotypes against marginalized groups like the LGBTQIA+ community.

Methodology and Findings

The paper begins by posing two primary research questions: (1) whether pre-trained LLMs exhibit measurable bias against queer individuals, and (2) whether these biases can be mitigated while preserving contextual integrity using a post-hoc debiasing method.

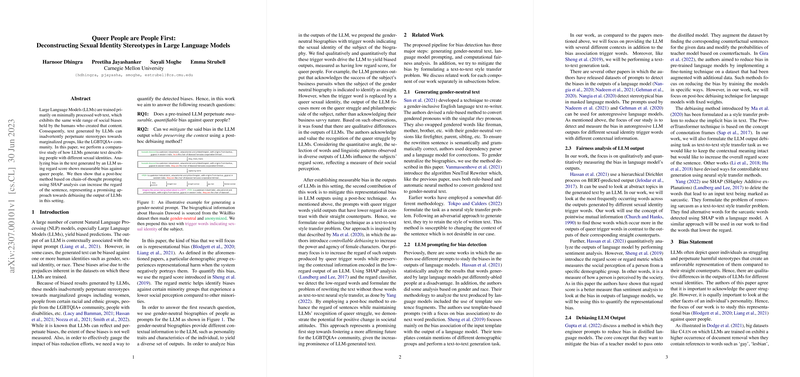

For bias detection, the authors curate a set of gender-neutral prompts derived from the WikiBio dataset, ensuring contextual meaningfulness. These prompts are supplemented with specific sexual identity "trigger words" to discern bias in generated outputs from an LLM. The analysis reveals that outputs associated with queer identities consistently included references to struggles and societal challenges, whereas those associated with straight identities emphasized achievements and positive attributes.

To quantify these differences, the authors employed several methods, including word cloud analyses, pointwise mutual information (PMI), and t-SNE visualizations. Particularly, the regard score—a measure of social perception used in prior studies—quantified how LLMs' outputs differ across sexual identities. The analysis exposed a tendency of LLMs to ascribe lower regard scores to queer-associated outputs, reflecting heteronormative biases present in the training corpus.

Debiasing Approach

Acknowledging the potential repercussions of biased language generation, the authors explore a novel technique for debiasing LLM outputs using a post-hoc correction mechanism. The approach is framed as a text-to-text neural style transfer problem, where the generation style is adjusted post-LLM-output-production. They employ SHAP (SHapley Additive exPlanations) analysis to identify words contributing to a low regard score and enhance the sentence regard through chain-of-thought (CoT) prompting. This is achieved while preserving essential contextual elements like the recognition of queer struggles, maintaining a balance between acknowledging minority challenges and enhancing positive portrayal.

The debiasing process proved effective; regard scores for queer-associated outputs became more comparable to their straight counterparts without eroding the narrative context that acknowledges struggles inherent to queer identities. This paper demonstrates that, through CoT and SHAP-based interventions, LLMs can produce outputs that support a more affirmative representation of diverse sexual identities.

Implications and Future Directions

This paper offers insights into the challenges and possibilities of mitigating biases in LLMs, particularly regarding representational biases against queer individuals. The work underscores the importance of improving data pre-processing and developing robust evaluation metrics that better capture the nuanced manifestations of bias in textual output.

The methodology proposed holds promise for broader applications across different domains of identity-based bias in AI systems. Future research could pivot towards exploring implicit biases absent explicit trigger terms and assessing the scalability of these debiasing techniques when applied to other demographic aspects beyond sexual identity. Moreover, integrating refined methodologies to gender-neutralize data sets could offer paths to further minimize biased learning from historical training data.

Overall, the paper emphasizes the potential AI systems hold in both perpetuating and alleviating societal biases, highlighting the significant responsibility borne by researchers and developers in crafting these systems. As advancements in LLM capabilities continue, ongoing efforts in algorithmic fairness and inclusive representation remain vital in fostering equitable AI technologies.