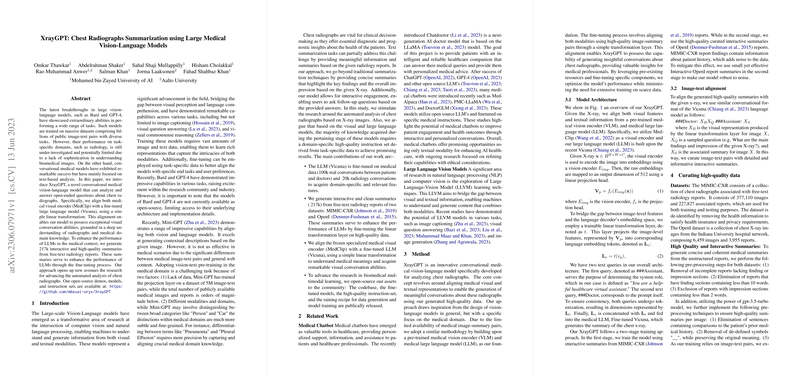

Summary of "XrayGPT: Chest Radiographs Summarization using Large Medical Vision-LLMs"

This paper introduces XrayGPT, an innovative model designed to enhance the automated analysis of chest radiographs through a multimodal approach integrating both vision and language capabilities. The researchers aim to address the gap in performance of generic vision-LLMs, like GPT-4, when applied to specialized domains such as radiology.

Methodology

- Model Architecture: XrayGPT synergizes a medical visual encoder, MedClip, with a fine-tuned LLM, Vicuna. A linear transformation aligns these components, facilitating effective radiological image understanding and textual dialogue generation. This alignment strategy is critical in bridging the gap between the dense medical imaging features and language representations.

- Data Utilization: The model is enhanced through the creation of approximately 217k high-quality interactive summaries derived from MIMIC-CXR and OpenI datasets. These summaries provide valuable fine-tuning data that imbue the LLM with domain-specific knowledge, allowing for improved interpretability and interaction with radiological data.

- Training Process: XrayGPT undergoes a two-stage training regimen. Initially, it ingests image-text pairs to form foundational image-report relationships. Subsequently, it refines these insights by engaging with high-quality curated datasets to focus on radiology-specific narratives.

Evaluation and Results

The researchers employed various metrics, including Rogue scores, to quantitatively assess XrayGPT’s performance. Compared to the baseline model, MiniGPT-4, XrayGPT demonstrated substantial improvements, notably a 19% increase in R-1 score, underscoring its superior capability for summarizing radiological findings.

Qualitative assessments reveal that XrayGPT can generate both detailed findings and concise impressions, simulate interactive dialogues akin to a radiologist's consultation, and even offer treatment recommendations based on the analysis provided.

Implications and Future Directions

The implications of this research are significant for the field of biomedical multimodal learning. XrayGPT not only advances automated radiographic summarization but also pushes the boundaries of conversational AI within healthcare. By making the model and its assets open-source, the authors encourage the community to explore further improvements and applications, potentially extending to other specialized medical imaging domains.

Moving forward, the integration of such models in clinical settings could revolutionize diagnostic workflows, offering support to radiologists through preliminary analyses and enhancing patient engagement through interactive analysis explanations. Future research could explore scalability, adaptation to other medical imaging modalities, and enhancement of interpretability and ethical considerations in AI-generated medical content.