Introduction to AGI in Medical Imaging

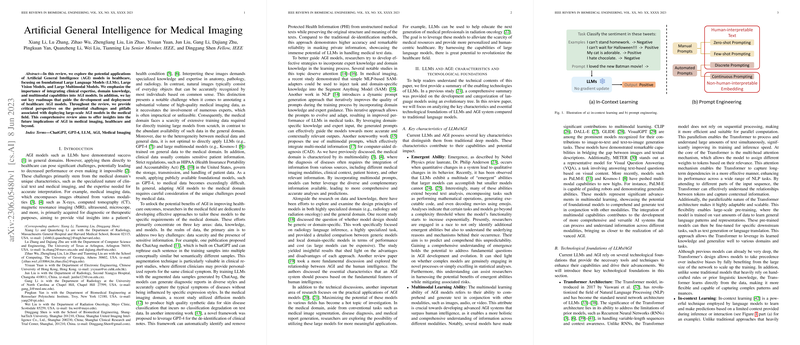

The integration of AGI into the healthcare sector is a profound advancement aimed at enhancing patient outcomes and efficiency in care delivery. AGI models, particularly those categorized as LLMs, Large Vision Models (LVMs), and Large Multimodal Models (LMMs), promise to revolutionize healthcare. These technologies harness the convergence of clinical expertise, domain-specific knowledge, and multimodal data interpretation to create potent tools within medical practice.

Adapting AGI to Health Care Challenges

Efficiently adapting AGI to healthcare necessitates confronting unique challenges. AGI must accommodate the detail-oriented and specialized nature of clinical data. The medical imaging arena showcases this necessity starkly - images derived from MRI and CT scans, among other sources, require nuanced interpretation that merges anatomical, pathological, and radiological expertise. Shortages of high-quality annotated medical data, privacy concerns – accentuated by legislations like HIPAA in the U.S. – and the sensitive nature of clinical information are significant hurdles in leveraging these sophisticated AI models within the medical domain.

Applications and Developing Strategies

The roadmap to AGI application in healthcare is multi-faceted, involving expert-in-the-loop methodologies, domain-specific tailoring, and prompt tuning to refine AGI outputs. LLMs like ChatGPT have potential for educational roles in medicine, patient consultations, and relieving clinical workloads. The synergy of text, image, and potentially genetic data presents a monumental stride toward an integrated diagnostic approach. This amalgamation necessitates strategies ensuring data accessibility and quality, as AGI seeks to align itself closely with detailed clinical diagnostics and procedures.

The prospective integration of LLMs in healthcare does not solely imply automated processes but rather speaks to a complementary relationship between these models and human medical practitioners. AGI holds the capacity to support and empower the medical field, serving as an auxiliary to the expertise of healthcare professionals.

Challenges in Practical Application

Despite the considerable promise, AGI's application is not straightforward. Medical AI must navigate prompt crafting intricacies, legal and ethical data concerns, and the transformation of raw healthcare data into an intelligible AI-compatible format while circumventing privacy constraints. For instance, the implementation of healthcare-specific LVMs like SAM and AIGC applications involves the necessity of high-quality annotations aligned with rigorous clinical standards. Addressing these challenges requires mitigative strategies that combine robust supervision, training on diverse and representative data, and establishing fail-safes for model predictions to minimize risks.

Conclusion

The migration of AGI into healthcare is a paradigm shift that brings with it a host of transformative implications for patient care. Integrating expert knowledge into AGI models and embedding these models within multimodal healthcare systems is essential for realizing their full potential. While the journey is fraught with challenges, ranging from ethical considerations to the need for extensive data, the collaboration between AI specialists and clinicians will be paramount. As we continue to refine these technologies, we edge closer to a future where AGI is an integral component of medical protocols, augmenting the expertise of healthcare professionals and enhancing patient care significantly.