Advancing LLM Efficiency and Adaptability with Outlier-aware Weight Quantization and Weak Column Tuning

Introduction

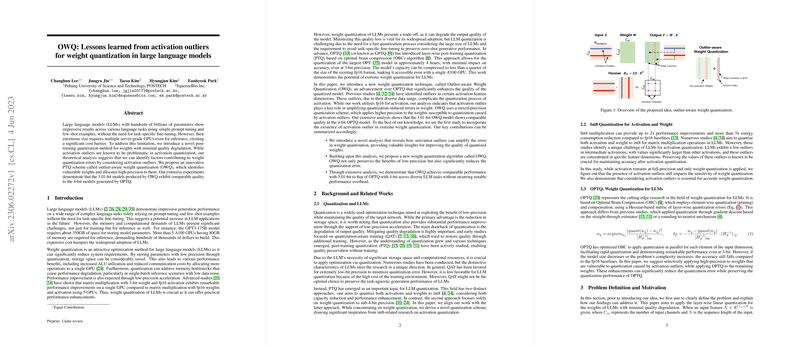

Deploying LLMs effectively in real-world applications remains a formidable challenge due to their extensive memory and computation requirements. Recent advancements in weight quantization protocols, such as the OPTQ approach, have made strides toward alleviating these issues by compressing models into manageable sizes without a significant loss in performance. This paper introduces a novel technique dubbed Outlier-aware Weight Quantization (OWQ), building on these precedents but incorporating a crucial innovation geared towards minimizing footprints of LLMs through highly sensitive, structured weight preservation.

Outlier-aware Weight Quantization (OWQ)

OWQ methodologically identifies and preserves a subset of weights particularly susceptible to quality degradation upon quantization—referred to as "weak columns." By granting these columns exemption from aggressive quantization, OWQ effectively reduces overall error, significantly preserving model quality even at extreme low-precision levels (e.g., 3.1 bits). Extensive empirical assessments affirm that the OWQ approach considerably improves upon the previous state-of-the-art quantization methods, including the highly-regarded OPTQ, particularly in the domain of fine-tuning and inference efficiency.

Weak Column Tuning (WCT)

An integral advancement presented in this paper is the introduction of Weak Column Tuning (WCT), a parameter-efficient fine-tuning scheme compatible with the OWQ-optimized models. WCT strategically updates only the high-precision weak columns identified during the OWQ process, offering an adept balance between adaptability to task-specific shifts and maintenance of a minimal computational overhead. This approach yields formidable performance enhancements against leading fine-tuning paradigms, including QLoRA, underscoring the dual advantage in memory efficiency and task adaptability introduced by OWQ.

Experimental Validation

The superiority of OWQ and WCT over existing methods is extensively validated across a variety of benchmarks and model configurations. For models quantized to 3.01 bits using OWQ, near-equivalent performance to 4-bit models quantized with conventional techniques is achieved, marking a significant leap in quantization efficiency. Additionally, the fine-tuning capabilities of WCT, when applied to pre-quantized models, outperform existing parameter-efficient tuning methods both in terms of reduced memory footprint and improved task-specific performance.

Future Directions

While the current instantiation of OWQ and WCT marks a substantial step forward in the practical deployment of LLMs, it also opens several avenues for future research. Exploring dynamically adaptive quantization schemes that can respond to variable task demands and model configurations could further enhance the versatility and efficiency of LLM deployments. Furthermore, integrating OWQ and WCT principles with emerging LLM architectures could catalyze the development of even more robust, adaptive, and efficient models suitable for a broader range of applications.

Conclusion

The OWQ technique, when coupled with the WCT scheme, represents a significant advancement in the optimization of LLMs for practical deployment. By addressing the challenge of maintaining model quality in extremely low-precision quantization scenarios and introducing an efficient mechanism for task-specific fine-tuning, this research paves the way for wider adoption and application of LLMs across diverse computational settings. The profound implications for both the theoretical understanding of model quantization and the practical deployment of LLMs warrant further investigation into this promising domain.