The paper "A Two-Stage Decoder for Efficient ICD Coding" addresses the challenge of automating the International Classification of Diseases (ICD) coding from clinical notes, a complex multilabel text classification task characterized by noisy inputs and long-tailed label distribution. Manual ICD coding, laborious and error-prone, involves extracting diagnoses and procedures into specific codes that have evolved from around 15,000 in ICD-9 to 140,000 in ICD-10. Leveraging this complexity for automated solutions requires innovative strategies given the vast label space.

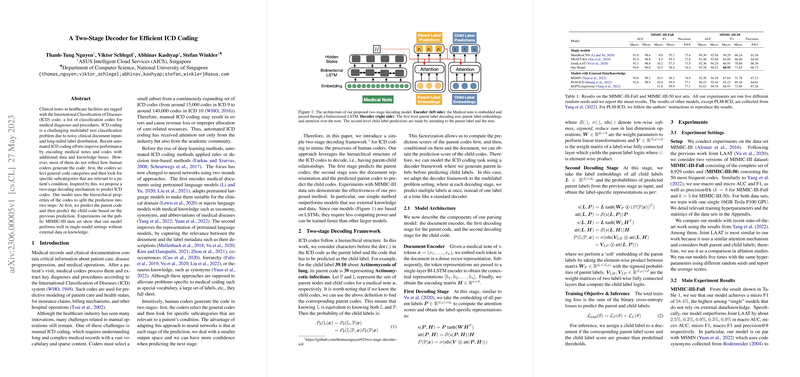

The proposed solution introduces a two-stage decoding framework inspired by the human coding process. Human coders typically select broader, parent categories before zooming in on specific subcategories. Analogously, this framework utilizes the hierarchical nature of ICD codes, initiating with a prediction of the parent code, and subsequently determining the child code conditioned on the previously predicted parent.

Main Contributions and Methodology:

- Two-Stage Decoding Framework:

- First Stage: Predicts general, parent-level ICD codes. This stage involves encoding medical notes using a bidirectional LSTM (Long Short-Term Memory) network, followed by attention mechanisms to compute label-specific representations.

- Second Stage: Predicts more granular, child-level ICD codes based on both the medical document and the determined parent codes. This stage refines the predictions further by attending to both parent and document encodings.

- Model Architecture:

- Utilizes token embeddings passed through a single-layer BI-LSTM to obtain contextual representations used in both decoding stages. The hierarchical relationships are explicitly captured, mimicking human cognitive processes.

- Both stages include attention mechanisms to focus on relevant parts of the input space, thereby reducing the search space and improving prediction confidence.

- Empirical Evaluation:

- Conducted on the MIMIC-III dataset, the proposed model outperforms several single-model baselines, achieving a micro F1 score of 58.4% on the full dataset, and strongly competes even against models utilizing external data.

- Particularly notable is the model's efficiency, requiring significantly less computational power (1.25 hours per training epoch) than more complex state-of-the-art methods like MSMN.

- Ablation Studies:

- Validated that explicitly modeling hierarchical information improves both parent and child label prediction performance.

- Showed the model's robustness across different frequency groups of ICD codes, highlighting its ability to maintain accuracy even with infrequently occurring labels.

The paper accentuates that integrating hierarchical structures into neural network architectures can significantly enhance multilabel classification tasks like ICD coding. The proposed method not only achieves competitive performance but does so with computational efficiency, making it a valuable approach for real-world applications where computational resources might be limited. Future work could explore the integration of richer document or label representations, potentially leveraging the increasing availability of domain-specific pretrained LLMs.