RWKV: Reinventing RNNs for the Transformer Era

The landscape of NLP has been dramatically reshaped by the advent of Transformer models, with their self-attention mechanism enabling unparalleled advancements in various tasks. Despite their success, Transformers come with intrinsic limitations, most notably their quadratic computational and memory complexities concerning sequence length. On the other hand, Recurrent Neural Networks (RNNs) exhibit linear scaling but falter in performance due to non-parallelizability and scalability issues. This paper introduces a novel architecture termed Receptance Weighted Key Value (RWKV), aiming to meld the strengths of both RNNs and Transformers while mitigating their respective limitations.

Research Motivation and Approach

Transformers' impact on NLP tasks is profound, but their scalability is hindered by the quadratic complexity of their self-attention mechanism. However, the linear scaling of memory and computation in RNNs presents an alluring alternative if the performance bottleneck can be overcome. RWKV leverages linear attention mechanisms, reformulating the model to function as either a Transformer or an RNN. This dual functionality allows RWKV to harness parallelizable computation during training while maintaining constant computational and memory complexity during inference.

The core architecture of RWKV integrates:

- Linear Attention: Reformulating attention mechanisms to achieve linear, rather than quadratic, complexity.

- Receptance Mechanism: Incorporating channel-directed attention to enable efficient handling of long-range dependencies.

- Parallelizable Training: Leveraging Transformer-like parallel training.

- Efficient Inference: Utilizing RNN-like constant-complexity inference.

Experimental Validation

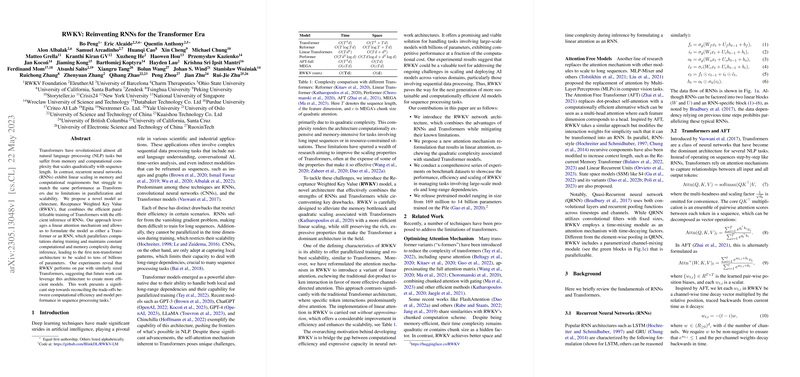

RWKV models, scaled up to 14 billion parameters, exhibit performance parity with similarly-sized Transformers, demonstrating RWKV's competitive edge without the quadratic scaling drawback. Specific evaluations across twelve NLP tasks, such as ARC Challenge and LAMBADA, illustrate RWKV's efficient performance, as encapsulated in model architecture diagrams and result plots. These results underscore RWKV's potential as a computationally efficient model for handling vast parameter spaces effectively.

Performance and Complexity

A pivotal advantage of RWKV lies in its computational efficiency:

- Time and Space Complexity: Traditional Transformers operate with complexities of and , respectively. Conversely, RWKV boasts a complexity of for both time and space, thus significantly reducing the computational overhead.

- Scalability: The models ranging from 169 million to 14 billion parameters trained on extensive datasets demonstrate effective scaling without prohibitive computational costs.

Future Directions and Implications

RWKV's innovative architecture bridges a crucial gap between computational efficiency and representational capacity, presenting a framework that could potentially redefine AI models' scalability in sequence processing. These sustainable and cost-effective models enable broader deployment in resource-constrained environments, heralding significant implications for both practical applications and theoretical research.

Speculative Developments

Looking forward, enhancements in RWKV could include:

- Improving Time-Decay Formulations: Refining the mechanisms that dictate the relevance of past information.

- Cross-Attention Substitution: Replacing traditional cross-attention mechanisms in encoder-decoder architectures with RWKV-style computations.

- Customizability through Prompt Tuning: Exploring the manipulation of hidden states to refine behavior predictability and model interpretability.

- Expanded State Memory: Increasing the internal state capacity to enhance long-range dependency modeling.

Conclusions

RWKV represents a significant advancement in neural network design, uniting RNN and Transformer advantages while curtailing their limitations. By reformulating attention and leveraging channel-directed mechanisms, RWKV achieves linear computational complexity, making it a compelling choice for large-scale sequence processing tasks. This contribution lays a foundation for more efficient and sustainable AI models, potentially transforming how we approach and deploy large-scale AI systems.

In conclusion, RWKV paves the way for the next generation of efficient and scalable neural architectures, striking a critical balance between performance and computational feasibility. This architecture's ability to manage extensive parameter spaces with constrained resources portends a promising avenue for future developments within the NLP and broader AI communities.