SoundStorm: Efficient Parallel Audio Generation

The paper "SoundStorm: Efficient Parallel Audio Generation" introduces an innovative model designed to generate high-quality audio efficiently through a non-autoregressive approach. SoundStorm leverages semantic tokens from AudioLM and implements bidirectional attention along with confidence-based parallel decoding to produce neural audio codec tokens. The method offers a significant computational advantage over traditional autoregressive methods, achieving two orders of magnitude faster generation speeds while maintaining audio quality and consistency.

Overview

SoundStorm addresses the computational complexity inherent in modeling high-rate discrete audio representations. The authors identify the challenges of using autoregressive models to generate audio, particularly the quadratic complexity and memory limitations that arise due to token sequence lengths and codebook size. The paper proposes a solution through a non-autoregressive framework that capitalizes on the residual vector quantization (RVQ) structure of audio tokens, thus optimizing the trade-off between perceptual quality and runtime efficiency.

The foundational elements of SoundStorm include:

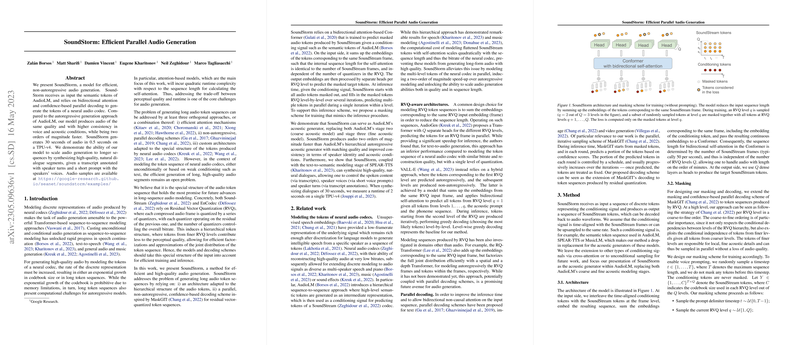

- Bidirectional Attention: Utilizing bidirectional attention mechanisms, the model processes audio tokens in parallel, which significantly reduces computation times compared to unidirectional, autoregressive sequences.

- Parallel Decoding Scheme: Drawing inspiration from MaskGIT, the model employs a parallel, confidence-based decoding that fills masked tokens iteratively. This approach is adapted to exploit the hierarchical token structure inherent in RVQ levels.

- Architecture Design: The architecture is tailored to the hierarchical token properties, using embeddings and predictively modeling tokens across different RVQ levels. This design ensures that the model remains efficient when scaling to longer audio sequences.

Experimental Evaluation

Experiments outlined in the paper demonstrate that SoundStorm effectively matches the audio quality of existing autoregressive models while outperforming them in runtime. Highlights of the model's performance include:

- Impressive Speed: SoundStorm generates 30 seconds of audio in 0.5 seconds on a TPU-v4, which is substantially faster than comparable autoregressive models.

- Speech Intelligibility and Consistency: The model exhibits improvements in speech intelligibility (as measured by WER and CER) and maintains better voice preservation and acoustic consistency over extended durations—highlighting its robustness in generating coherent audio over time.

- Dialogue Synthesis: By combining SoundStorm with a text-to-semantic model, the authors successfully scale text-to-speech synthesis for natural, multi-speaker dialogues, thereby illustrating the practical applicability of the system.

Implications and Future Directions

The efficient parallel architecture of SoundStorm points towards potential advancements in audio generation tasks, particularly for applications requiring fast and scalable solutions such as real-time dialogue synthesis, voice cloning for accessibility tools, and multimedia content production. The system's ability to integrate seamlessly with existing semantic token pipelines, like those of AudioLM, SPEAR-TTS, and MusicLM, further enhances its utility across various audio generation contexts.

While SoundStorm achieves excellent results, future research could explore expanding its application to broader types of audio content beyond speech, such as environmental sounds or musical compositions. Additionally, the continued development of robust ethical frameworks to mitigate misuse of such technologies is crucial, emphasizing the importance of secure and responsible deployment of voice generation systems.

In conclusion, SoundStorm represents a significant step forward in efficient audio generation. Its blend of parallelism, structured token modeling, and capability to handle extended audio sequences offers considerable implications for the evolution of audio synthesis technologies and their applications in artificial intelligence.