Distilling Step-by-Step: Enhancing NLP Models with Reduced Data and Model Sizes

The paper "Distilling Step-by-Step! Outperforming Larger LLMs with Less Training Data and Smaller Model Sizes" addresses a crucial challenge in the field of NLP: the deployment inefficiencies of LLMs. High memory usage and computational requirements make LLMs impractical for many real-world applications. Smaller, task-specific models present a viable alternative but traditionally require extensive data for finetuning or distillation to reach comparable performance.

Key Contributions

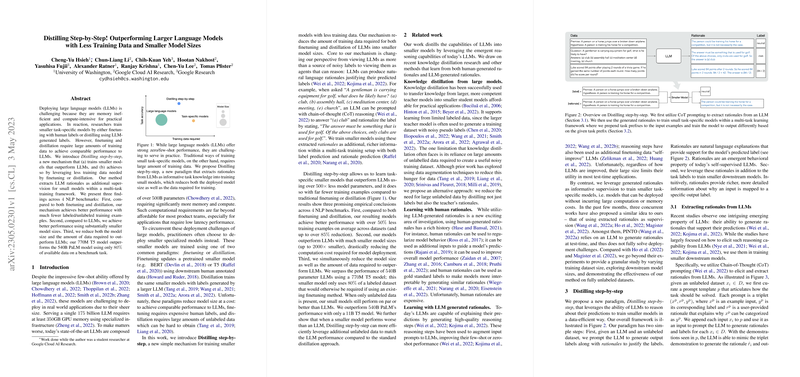

The authors introduce a novel mechanism termed "Distilling step-by-step," which significantly mitigates the data requirements and model sizes typically necessary for fine-tuning or distillation. This method leverages the ability of LLMs to generate rationales — explanations that accompany predictions — as a form of enhanced supervision within a multi-task training framework. By integrating these rationales, smaller models can be trained to outperform LLMs using only a fraction of the data and parameters.

Experimental Outcomes

The paper evaluates this approach across four NLP benchmarks: e-SNLI, ANLI, CQA, and SVAMP, with significant findings that enhance understanding in several domains:

- Data Efficiency: The method reduced the required training examples by over 50% on average. For instance, in the e-SNLI dataset, the proposed method achieved performance that surpassed standard finetuning using only 12.5% of the data.

- Model Efficiency: Distilling step-by-step enabled models significantly smaller than LLMs, such as the 770M T5 model, to exceed the performance of a 540B parameter PaLM model with substantially less data.

- Comparison with Traditional Methods: Compared to both finetuning and traditional distillation approaches, the new strategy showed consistent improvement across all datasets and reduced overhead both in terms of data and computational cost.

Implications and Future Directions

From a practical standpoint, the implications of this work are substantial. By reducing the dependency on large-scale datasets and massive computational infrastructure, this approach democratizes access to advanced NLP capabilities. Organizations with limited resources can deploy high-performance models without investing excessively in hardware or acquiring vast amounts of annotated data.

Theoretically, this work propels forward our understanding of knowledge distillation and highlights the utility of LLM-generated rationales as a critical training component. Future research could explore the integration of these techniques across other domains and further refine the quality of extracted rationales.

Additionally, there's potential to extend these methods to other complex NLP tasks and adopt smaller models in resource-constrained environments. The framework's adaptability to different LLMs also opens avenues for testing with various model architectures to further validate its robustness.

Conclusion

Distilling step-by-step offers a compelling strategy for advancing NLP model efficiency, providing a pragmatic path forward in addressing the computational challenges inherent in current LLM architectures. Its innovative approach marks a step toward more sustainable, scalable, and accessible AI applications.