An Overview of TALLRec: Aligning LLMs with Recommendation Tasks

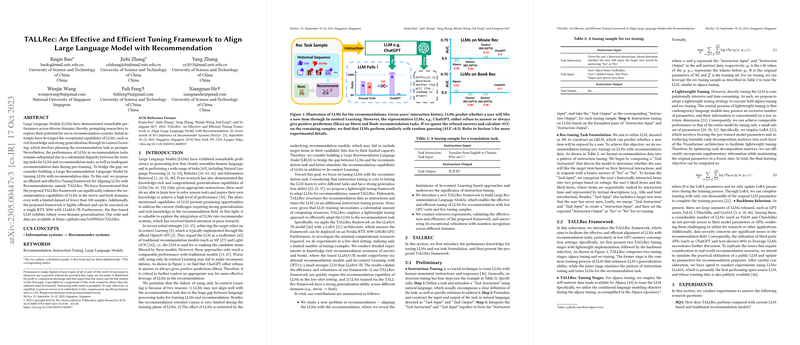

The paper “TALLRec: An Effective and Efficient Tuning Framework to Align LLM with Recommendation” explores the integration of LLMs into recommendation systems. LLMs are proficient in generating human-like text and managing a variety of language tasks. Despite these capabilities, there is still a significant gap in adapting them to recommendation tasks due to divergences between LLMs' training focuses and the requirements of recommendation systems. The authors propose TALLRec, a tuning framework specifically designed to address this gap and enhance LLM performance in recommendation tasks.

The primary contributions of this paper include the development of a lightweight tuning framework that adapts LLMs for movie and book recommendations while maintaining computational efficiency, thus offering a potential solution for cross-domain recommendations. TALLRec demonstrates notable enhancements in recommendation accuracy, even when tested on limited data sets of fewer than 100 samples. This result has been achieved using a single RTX 3090 with LLaMA-7B, underscoring the practicality of the approach in constrained resource environments.

Key Contributions

- Identification of Gaps in Existing LLMs for Recommendation: The authors identify a significant performance gap when LLMs are directly applied to recommendation tasks using techniques like In-context Learning. This gap is attributed to the differences in tasks involved in LLM training versus those in recommendation tasks and a lack of suitable pre-training data.

- The TALLRec Framework:

The TALLRec framework involves two main tuning stages: - Alpaca Tuning: This stage employs self-instruct data to enhance LLMs' generalization abilities for better adaptability to new tasks. - Rec-tuning: Instruction tuning is leveraged to align LLMs specifically with recommendation tasks by tuning on recommendation data.

- Implementation of Lightweight Tuning: By utilizing LoRA (Low-Rank Adaptation), the framework effectively adjusts only a fraction of the model parameters, achieving significant results with reduced computational demands.

- Performance Evaluation: TALLRec has been evaluated in few-shot learning scenarios, demonstrating superior performance over traditional recommendation methods and existing LLM-based models. Moreover, it exhibits strong cross-domain generalization abilities, achieving comparable performance across varied domains such as movies and books.

Implications and Future Directions

The implications of this research are twofold: theoretical and practical. Theoretically, the work suggests that aligning LLMs with domain-specific tasks through bespoke frameworks can unlock significant improvements in model performance, fostering further research in domain adaptation of LLMs. Practically, TALLRec provides a computationally efficient methodology that can be deployed with constrained resources, making it accessible for extensive application across different domains.

Future developments could consider extending this framework to include enhanced context-based leverage of textual data and context modalities within recommendation systems. Additionally, exploring more intricate models and robust datasets could provide a more comprehensive understanding of the potential capabilities and limitations of LLMs in recommendation contexts. The promising results in cross-domain recommendations also pave the way for multi-domain recommendation systems that can seamlessly integrate diverse user preferences.

In summary, by incorporating LLMs into recommendation scenarios with the TALLRec framework, this work offers a structured approach to leveraging the nuanced capabilities of LLMs, serving as an important stepping stone for future research avenues in enhancing recommendation systems through advanced machine learning techniques.