Evaluating the Logical Reasoning Ability of ChatGPT and GPT-4

The presented paper scrutinizes the logical reasoning proficiency of the Generative Pretrained Transformer models ChatGPT and GPT-4. Logical reasoning remains a formidable challenge in the field of Natural Language Understanding (NLU), seeking to emulate a core component of human intelligence. Despite ongoing developments in this domain, the incorporation of logical reasoning into LLMs continues to pose significant difficulties, hampered by factors like ambiguity, scalability, and the complexity of real-world scenarios.

Experiment Overview

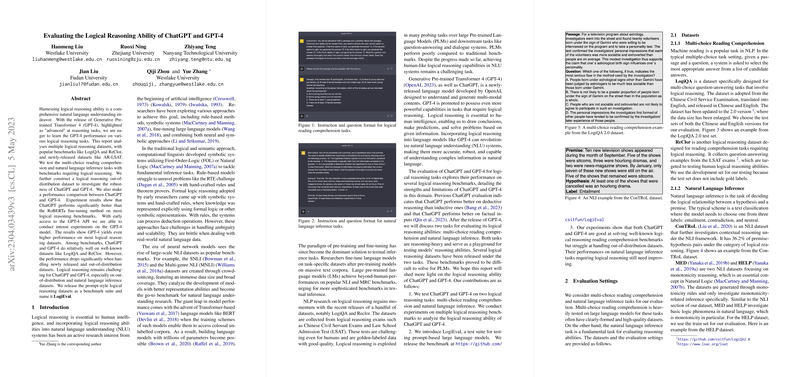

The authors employ a spectrum of established and emerging datasets to evaluate the logical reasoning capabilities of ChatGPT and GPT-4 across different tasks. These tasks are divided into two key categories: multi-choice reading comprehension and natural language inference (NLI). Datasets such as LogiQA, ReClor, AR-LSAT, ConTRoL, MED, and HELP serve as benchmarks to assess the models' performance.

- Multi-choice Reading Comprehension: This task involves selecting the correct answer from a set of options based on a given passage. The paper employs datasets like LogiQA and ReClor, which challenge the logical reasoning capacity with complex questions.

- Natural Language Inference: This task focuses on establishing the logical relationship between a premise and a hypothesis, categorized as entailment, contradiction, or neutral. Here, benchmarks such as ConTRoL and TaxiNLI provide a comprehensive evaluation framework.

Experimental Findings

Performance Analysis:

- Performance Superiority:

- The results indicate that both ChatGPT and GPT-4 outperform traditional models like RoBERTa on multiple logical reasoning datasets. GPT-4, in particular, demonstrates superior performance on well-known benchmarks like LogiQA with an accuracy of ~72% and ReClor achieving up to 87%.

- Challenges on New and Out-of-distribution Data:

- Both models face considerable challenges when confronted with out-of-distribution datasets. The performance drop is pronounced in these scenarios, with ChatGPT and GPT-4 achieving significantly lower accuracy on AR-LSAT and new LogiQA data. This highlights a generalization issue when models encounter unfamiliar datasets.

Technical Insights:

- Logical and Scope Errors:

- The analysis reveals common errors made by GPT-4, such as logical inconsistencies and scope errors. These errors underscore the difficulty LLMs face in logical precision and representation nuances.

- In-Context Learning and Chain-of-Thought Prompting:

- In-context learning improves model performance, allowing it to adapt to new information more effectively within a conversation. Chain-of-thought (CoT) prompting, which guides the reasoning process with structured thinking, also enhances performance, suggesting a potential avenue to bolster model reasoning capabilities.

Implications and Future Directions

The findings underscore the significant strides made with LLMs like GPT-4 in logical reasoning tasks. However, the diminished performance on out-of-distribution data indicates a crucial area for further research. Efforts must focus on equipping models with a more generalized understanding, potentially through diverse dataset exposure and advanced reasoning algorithms.

Looking forward, it is imperative to explore more sophisticated training regimes and benchmarks to challenge these models further, facilitating advancements towards robust NLU systems capable of emulating genuine human-like logical reasoning.