DoctorGLM: Fine-tuning a Chinese Medical LLM

The paper "DoctorGLM: Fine-tuning your Chinese Doctor is not a Herculean Task" addresses the challenges and solutions associated with adapting LLMs such as ChatGPT for the medical domain in non-English languages, specifically Chinese. This research presents DoctorGLM, an LLM fine-tuned to address these challenges, particularly focusing on affordability and customizability in a healthcare context.

LLMs like ChatGPT and GPT-4 have demonstrated significant capabilities in language processing; however, they lack specialization in medical contexts, often leading to inaccuracies in diagnoses and medical advice. Furthermore, these models predominantly excel in English, presenting a barrier for non-English speakers. DoctorGLM aims to mitigate these issues by developing a Chinese medical dialogue model trained on specific datasets.

Approach

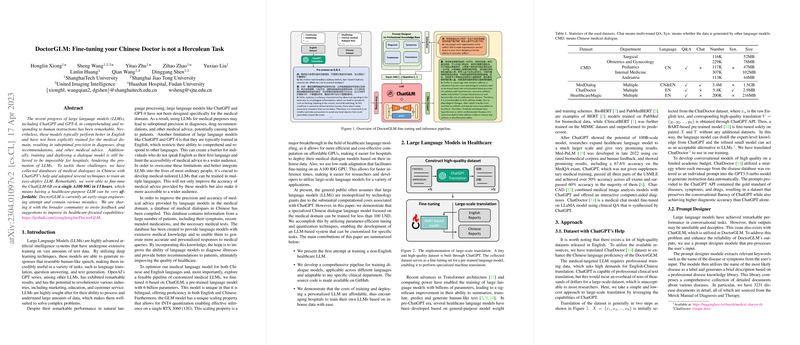

DoctorGLM was fine-tuned using ChatGLM-6B, a bilingual LLM optimized for both Chinese and English, on a single A100 80G GPU in 13 hours. This process required a relatively low financial investment, demonstrating that deploying specialized LLMs is feasible within the budget constraints of healthcare institutions.

The authors utilized various techniques to build a comprehensive pipeline for training dialogue models. A critical innovation presented is the utilization of ChatGPT to develop a high-quality dataset by translating existing English medical datasets into Chinese. This method significantly reduces overhead costs associated with dataset curation.

To enhance reliability, the research incorporates a prompt designer to preprocess user inputs, leveraging professional disease libraries for generating contextually accurate prompts. Such preprocessing aims to augment the expertise of the LLM, potentially increasing the accuracy of the medical advice it provides.

Results and Implications

Though DoctorGLM exhibits some limitations, such as occasional loss of output coherence and slower inference times compared to non-specialized LLMs, it offers significant insights into cost-effective LLM training for healthcare. Training and deploying specialized LLMs at a low cost allows hospitals and healthcare providers to tailor models to specific medical needs efficiently.

This research opens discussions about the democratization of AI in healthcare, suggesting a future where medical facilities are not reliant on large technology companies for AI solutions. This could lead to the development of more personalized healthcare solutions that reflect local medical knowledge and linguistic nuances.

Conclusion and Future Directions

DoctorGLM represents an early-stage engineering endeavor but sets a promising precedent for fine-tuning general LLMs into specific-use cases. The open-source release of the methodology invites further contributions, potentially leading to enhancements in LLM accuracy and efficiency in medical contexts.

Future research may explore reinforcement learning approaches to better align LLM outputs with human medical judgment, improve quantization techniques for more efficient deployment, and refine training processes to prevent capability degradation. These developments could further lower the barrier to entry for medical institutions looking to harness LLMs, leading to widespread adoption and improved patient care outcomes globally.

The paper underscores a shift towards more accessible AI technologies in healthcare, aligning LLMs more closely with specialized, practical applications to bridge the gap between general-purpose AI and domain-specific needs.