Analysis of "FastViT: A Fast Hybrid Vision Transformer using Structural Reparameterization"

The paper introduces FastViT, a novel hybrid vision transformer architecture designed to optimize the latency-accuracy trade-off in computer vision tasks. By amalgamating transformer and convolutional designs, FastViT outperforms existing models in terms of speed and efficiency on multiple tasks, including image classification, detection, segmentation, and 3D mesh regression.

Key Contributions

1. Structural Reparameterization with RepMixer:

The paper introduces RepMixer, a token mixing operator that leverages structural reparameterization to eliminate skip-connections in the network. This architectural choice significantly reduces memory access costs and subsequently lowers latency. It represents a departure from conventional metaformer architectures which heavily rely on skip connections.

2. Train-time Overparameterization:

FastViT employs overparameterization only during training, using large kernel convolutions in conjunction with depthwise and pointwise convolutions. These design choices enhance model capacity without substantially affecting latency, evidenced by a 0.9% improvement in Top-1 accuracy on ImageNet.

3. Large Kernel Convolutions:

The utility of large kernel convolutions is further articulated as an efficient substitute for self-attention layers in early network stages. This element fortifies the receptive field, optimizing both performance and robustness to corruptions and out-of-distribution samples.

Performance and Comparisons

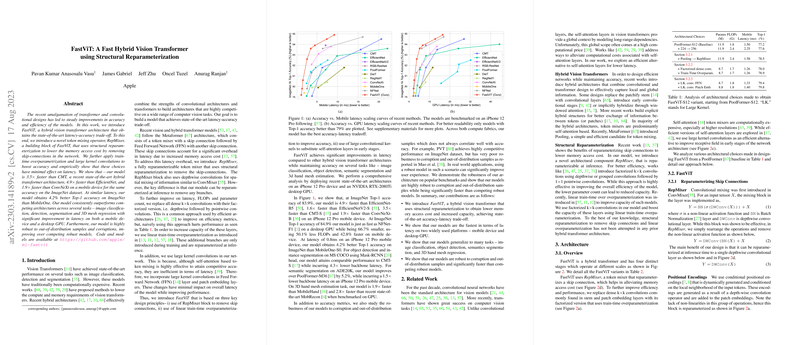

FastViT demonstrates significant numerical superiority over leading models across various tasks. For instance, it achieves a 4.2% superior Top-1 accuracy on ImageNet over MobileOne at similar latency levels. Moreover, experimental results show FastViT to be several times faster than models like CMT, EfficientNet, and ConvNeXt on mobile devices, utilizing optimal computation strategies without substantial overhead on GPU.

The robustness evaluations indicate FastViT's resilience against real-world corruptions. FastViT outperforms architectures like PVT in robustness benchmarks, which underscores the efficacy of its large kernel convolutions and hybrid design strategy.

Implications and Future Speculations

This research makes significant strides in efficiently deploying vision transformers on mobile and desktop platforms. The structural reparameterization strategy, combined with train-time overparameterization, may inform future architectural designs seeking to enhance efficiency without sacrificing performance.

In the AI landscape, where computational resources are often a limiting factor, the concepts brought forth by FastViT could catalyze further exploration into efficiency-centered design paradigms. Additionally, the robustness characteristics of FastViT imply it could be particularly beneficial in applications requiring high reliability, such as autonomous driving and medical imaging.

Conclusion

FastViT sets a new benchmark in hybrid vision transformer design, achieving a balanced synergy between convolutional and transformer components. By leveraging innovative architectural choices, it achieves state-of-the-art performance across various benchmarks, presenting a compelling case for its adoption in resource-constrained environments. This work not only represents a performance leap in current tasks but also lays groundwork for more resilient and versatile AI systems in the future.