LERF: Language Embedded Radiance Fields

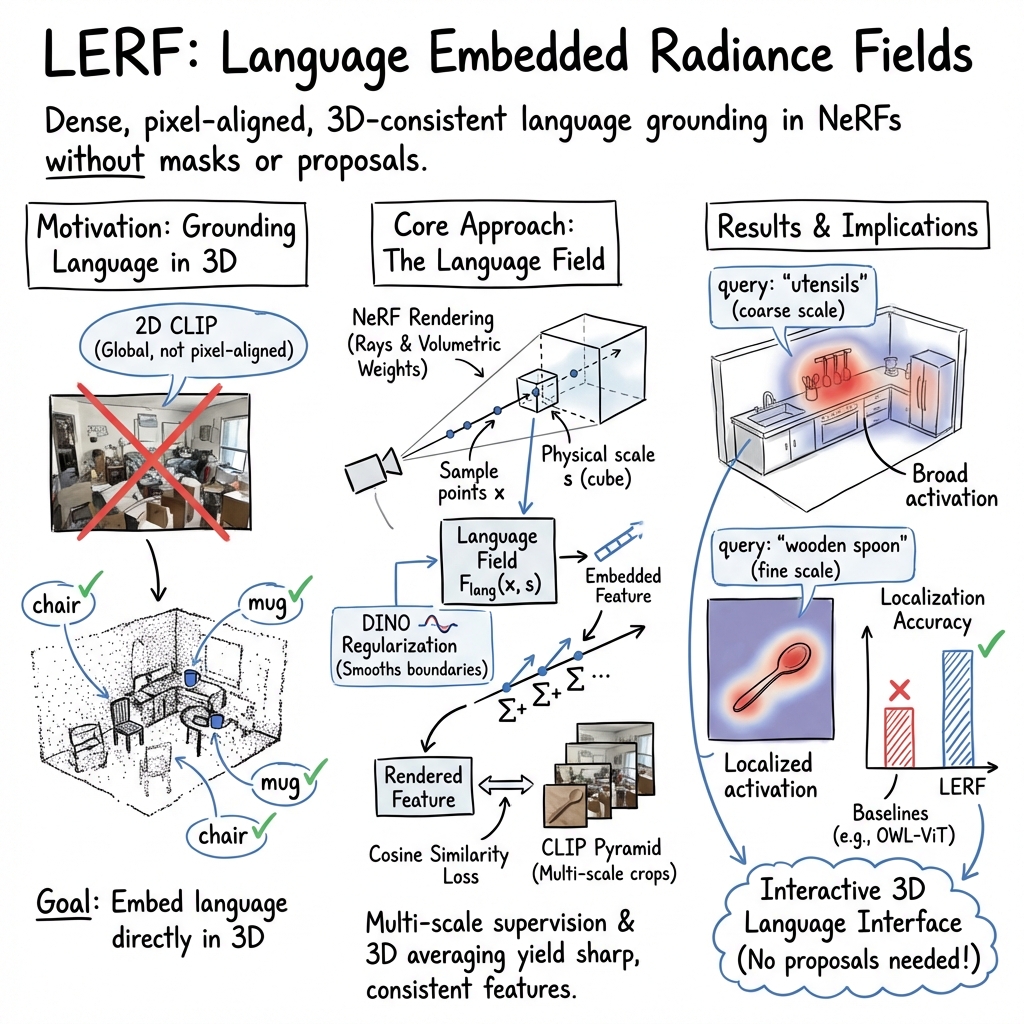

Abstract: Humans describe the physical world using natural language to refer to specific 3D locations based on a vast range of properties: visual appearance, semantics, abstract associations, or actionable affordances. In this work we propose Language Embedded Radiance Fields (LERFs), a method for grounding language embeddings from off-the-shelf models like CLIP into NeRF, which enable these types of open-ended language queries in 3D. LERF learns a dense, multi-scale language field inside NeRF by volume rendering CLIP embeddings along training rays, supervising these embeddings across training views to provide multi-view consistency and smooth the underlying language field. After optimization, LERF can extract 3D relevancy maps for a broad range of language prompts interactively in real-time, which has potential use cases in robotics, understanding vision-LLMs, and interacting with 3D scenes. LERF enables pixel-aligned, zero-shot queries on the distilled 3D CLIP embeddings without relying on region proposals or masks, supporting long-tail open-vocabulary queries hierarchically across the volume. The project website can be found at https://lerf.io .

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper introduces LERF, which stands for Language Embedded Radiance Fields. In simple terms, LERF lets you explore a 3D scene using everyday words. Imagine walking around a kitchen with your phone, building a 3D model of it, and then asking the computer “Where are the utensils?” or “Show me something yellow.” LERF connects those words to the right places in the 3D scene, and highlights where they are.

What questions does the paper ask?

- How can we link natural language (like “yellow,” “Waldo,” or “electricity”) to exact locations in a 3D scene?

- Can we make these language-based searches work across many viewpoints and scales (big areas like “kitchen counter” and small details like “fingers”)?

- Can we do this without special training on labeled datasets or relying on extra tools that draw boxes or masks around objects?

How did they do it? Methods explained simply

First, a few key ideas:

- NeRF: Think of a NeRF as a “smart 3D fog” built from many photos. If you look through the fog from any angle, it shows the right colors and shapes, so you can render realistic views of a scene.

- CLIP: CLIP is an AI model that learns how images and text relate. You can give it a word or phrase (like “yellow mug”) and it produces a “text fingerprint.” You can also give it an image or image crop, and it produces a matching “image fingerprint.” Fingerprints that are similar mean the text and image go together.

LERF combines these two:

- It adds a “language field” inside the NeRF. For every small 3D box in the scene (and at different physical sizes), LERF stores a CLIP-like fingerprint that tells you what words match that region.

- It trains this language field using many photos of the scene. The team takes crops of the images at multiple sizes (small details and bigger context) and precomputes their CLIP fingerprints. Then, for each 3D spot and scale, LERF learns to match the average of those fingerprints from different views. This multi-view, multi-scale learning makes the language signals consistent and smooth in 3D.

To make the language maps cleaner, they add DINO:

- DINO: another vision model that groups parts of objects without needing labels. LERF learns DINO features alongside CLIP, which helps reduce patchiness and makes object boundaries look better.

How LERF answers a text query:

- For a question like “Where is the yellow mug?”, LERF compares the text fingerprint to the 3D language fingerprints everywhere in the scene. It calculates a “relevancy score” for each pixel or point, and shows a heatmap of where the match is strongest.

- It automatically tests different physical sizes and picks the best one for that query, so it can handle both broad cues (“kitchen counter”) and tiny details (“letters on a mug”).

Training and usage:

- You can capture a scene with a handheld phone and build LERF in about 45 minutes.

- After that, queries run interactively in real time—no need to retrain for each new word or phrase.

What did they find, and why is it important?

The authors tested LERF on real-world scenes like kitchens, bookstores, and toy setups. They showed that:

- LERF can handle many kinds of words:

- Visual properties: “yellow”

- Abstract ideas: “electricity”

- Specific, rare objects: “Waldo”

- Reading text in the scene: words printed on mugs or books

- The results are consistent in 3D and more precise than using 2D-only methods, because LERF blends information from many views.

- Compared to popular tools:

- LERF beat a 3D version of LSeg (a segmentation model) on “long-tail” queries—unusual or specific things not seen in typical datasets.

- LERF also often outperformed OWL-ViT (a 2D open-vocabulary detector) when localizing objects from text prompts in challenging, in-the-wild scenes.

This matters because it shows we can search and understand 3D spaces using natural language, without needing pre-defined categories or hand-drawn boxes. It opens the door to more flexible, human-friendly ways of interacting with 3D environments.

Limitations in simple terms

- Language quirks: CLIP can act like a “bag of words,” sometimes missing nuances like “not red.” It can also confuse look-alike objects (e.g., “zucchini” may highlight other long green vegetables).

- Scene capture: LERF needs good multi-view photos and accurate camera positions. If the 3D reconstruction is weak, the language field suffers too.

- Scale and context: Some queries need multiple scales at once (like “table” needing both surface and legs), but LERF currently picks one best scale per query.

- Spatial reasoning: It may struggle with complex relationships like “the book to the left of the lamp.”

What’s the impact?

LERF makes 3D scenes searchable with words. That can help:

- Robotics: A robot could find “the wooden spoon for stirring” or “the yellow cable” by asking in natural language, and locate it precisely in 3D.

- Augmented reality and 3D editing: Creators can highlight, group, and edit scene parts using text prompts.

- Research: It helps scientists analyze how vision-LLMs behave in real 3D environments.

- Future systems: LERF is a general framework, so better language-vision models can plug in and immediately improve 3D text search.

In short, LERF is a step toward computers that understand and respond to our words inside rich, realistic 3D worlds.

Collections

Sign up for free to add this paper to one or more collections.