An Overview of LLMs: Recent Developments and Outlook

The paper authored by Chengwei Wei, Yun-Cheng Wang, Bin Wang, and C.-C. Jay Kuo provides a thorough review of LLMs (LMs), exploring their evolution, current state, and future prospects. The paper contrasts conventional LLMs (CLMs) with pre-trained LLMs (PLMs) and explores their various aspects including linguistic units, architectures, training methods, evaluation techniques, and applications.

Fundamentals of LLMs

LLMs are designed to paper the probability distributions over sequences of linguistic units, such as words or characters. Historically, CLMs predominantly utilized statistical approaches based on small corpora or data-driven approaches leveraging larger datasets. These models aim to predict the next linguistic unit in a sequence given its preceding context, functioning in a causal or auto-regressive manner.

PLMs, on the other hand, extend beyond simple causality. They employ self-supervised learning paradigms and serve as foundational models in modern NLP systems. The strength of PLMs lies in their ability to generalize across diverse downstream tasks, achieved through extensive pre-training on broad linguistic data followed by fine-tuning.

Types of LLMs

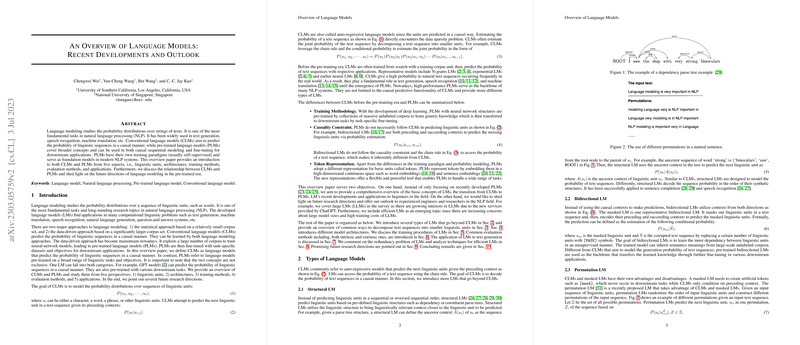

The paper categorizes LMs into several types:

- Conventional LLMs (CLMs): Primarily causal and auto-regressive, predicting the probability of the next unit based on prior context.

- Structural LMs: Utilize predefined linguistic structures like dependency or parse trees to bring semantically relevant context closer to the unit being predicted.

- Bidirectional LMs: Utilize contexts from both directions, such as masked LLMs (MLM), which predict masked tokens using both preceding and succeeding contexts.

- Permutation LMs: Combine the strengths of CLMs and MLMs by randomizing input sequences, generating various permutations for token prediction.

Linguistic Units and Tokenization

Tokenization methods are crucial for decomposing text sequences into manageable linguistic units:

- Characters: Simplify vocabulary but require longer contexts for accurate predictions.

- Words and Subwords: Commonly used but face challenges like Out-Of-Vocabulary (OOV) issues. Subword tokenizers like Byte Pair Encoding (BPE) and WordPiece have been developed to address these challenges.

- Phrases and Sentences: Used in specific applications such as speech recognition and text summarization to maintain semantic coherence.

Architectures of LLMs

The architectures of LMs have evolved significantly:

- N-gram Models: Simplified models that predict the next token based on the preceding N-1 tokens using the Markov assumption.

- Maximum Entropy Models: Utilize feature functions for token prediction but can be computationally intensive.

- Neural Network Models: Include Feed-forward Neural Networks (FNNs) and Recurrent Neural Networks (RNNs), both of which leverage continuous embedding spaces for better context management.

- Transformers: The recent state-of-the-art models that utilize attention mechanisms to capture long-term dependencies. Variants include encoder-only, decoder-only, and encoder-decoder based on the task requirements.

Training Methods for PLMs

PLMs are trained via large-scale self-supervised learning:

- Pre-training: Often involves masked LLMing or next-sentence prediction to learn generalizable language representations.

- Fine-Tuning: Adapts pre-trained models to specific downstream tasks using task-specific datasets. Techniques like adapter tuning and prompt tuning have emerged for more efficient fine-tuning.

Evaluation Methods

Evaluations are categorized into intrinsic and extrinsic methods:

- Intrinsic Evaluation: Metrics like perplexity and pseudo-log-likelihood scores (PLL) are used to measure how well an LM can predict natural text sequences.

- Extrinsic Evaluation: Performance on downstream tasks like the GLUE and SuperGLUE benchmarks provides insights into the practical utility of LMs.

Applications in Text Generation

The application of LMs in text generation spans various tasks including dialogue systems, automatic speech recognition (ASR), and machine translation. Efficient decoding methods like beam search and sampling-based techniques play vital roles in improving the quality of generated text.

Improving Efficiency

Given the increasing complexity and size of modern LMs, the paper highlights the importance of efficient model training and usage. Techniques such as knowledge distillation, pruning, and fast decoding methods are discussed to reduce model size and inference latency without compromising performance.

Future Directions

The paper outlines several promising research directions:

- Integration of LMs and Knowledge Graphs (KGs): Combining the structured knowledge of KGs with the contextual understanding of LMs can enhance reasoning capabilities.

- Incremental Learning: Developing methods to update LMs with new information without retraining from scratch.

- Lightweight Models: Creating cost-effective and environmentally friendly models.

- Domain-Specific Models: Exploring the benefits of specialized models over universal LMs for specific domains.

- Interpretable Models: Enhancing the transparency and explainability of LMs to avoid issues like hallucination in text generation.

- Detection of Machine-Generated Text: Developing reliable methods to differentiate between human-written and machine-generated content.

In conclusion, the paper comprehensively covers the landscape of LLMs, providing valuable insights into their development, applications, and future prospects in NLP research.