Scaling Vision Transformers to 22 Billion Parameters: An Overview

The paper "Scaling Vision Transformers to 22 Billion Parameters" presents the development and evaluation of a Vision Transformer (ViT) model with 22 billion parameters, referred to as ViT-22B. This significant increase in the scale of vision models moves closer to the capacities seen in LLMs. The authors address the challenges and propose methods for efficient training and evaluation of such a massive model.

Model Architecture and Innovations

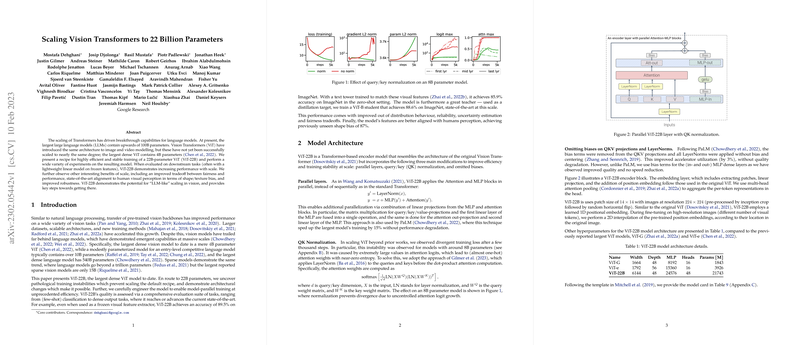

ViT-22B builds upon the standard Transformer architecture with notable modifications aimed at improving efficiency and training stability. Key modifications include:

- Parallel Layers: Inspired by techniques used in LLMs, the attention and MLP blocks are applied in parallel rather than sequentially, optimizing layer processing.

- Query/Key (QK) Normalization: To mitigate training instabilities observed in large-scale models, LayerNorm is applied to queries and keys prior to the attention computation, which stabilizes the attention weights and prevents loss divergence.

- Bias Removal: Biases are removed from the projections to enhance computational efficiency, a technique also utilized in other large-scale models like PaLM.

Training Infrastructure

The paper emphasizes the importance of infrastructure in handling the computational demands of such a large model. ViT-22B uses JAX and FLAX for implementation with a 2D logical chip mesh. Innovations like asynchronous parallel linear operations allow for overlapping communication and computation, leading to high matrix core utilization on custom hardware (TPU v4).

Experimental Evaluation

The authors present extensive benchmarks across several image classification tasks, zero-shot transfer learning, out-of-distribution testing, dense prediction tasks (like semantic segmentation and depth estimation), and video classification. Some key results include:

- ImageNet Performance: The ViT-22B model shows strong performance in linear probing (89.5% accuracy) and matches or surpasses the performance of smaller models, even when those are fully fine-tuned.

- Zero-shot Transfer: Using contrastive learning approaches, the model shows competitive results in zero-shot classification, indicating the versatility of learned representations.

- Robustness and OOD Generalization: ViT-22B demonstrates improved performance on challenging out-of-distribution datasets like ImageNet-C, and robustness to distribution shifts.

Implications and Future Directions

The findings suggest that scaling Vision Transformers to sizes previously reserved for LLMs can yield substantial improvements in performance and robustness, akin to the scaling benefits seen in LLMs. Furthermore, the authors observe favorable trends in fairness metrics and alignment with human perceptual biases, such as an increased shape bias.

Future research could explore the limits of scalability in vision models and their integration into multi-modal systems. The application of these models in real-world scenarios remains contingent on further insights into their robustness, interpretability, and ethical considerations.

Conclusion

This work on ViT-22B represents a significant step in bridging the gap between vision and LLM scaling. By successfully developing and evaluating such a large-scale vision model, the authors provide a foundation for further advancements in the field of computer vision, paving the way for even larger and more holistic AI systems.