Analyzing Leakage of Personally Identifiable Information in LLMs

The paper addresses the critical challenge of Personally Identifiable Information (PII) leakage in LLMs (LMs). The authors focus on introducing a rigorous framework to evaluate PII leakage through three main attack vectors: extraction, reconstruction, and inference. These considerations are vital as LMs are routinely fine-tuned on domain-specific datasets that often contain sensitive information. The research presented evaluates the effectiveness of existing mitigation strategies such as dataset scrubbing and differential privacy, highlighting potential vulnerabilities and suggesting pathways for improvement.

Contributions and Methodology

The authors develop and formalize three types of PII leakage attacks:

- Extraction: This involves extracting PII from LMs by leveraging their API to identify memorized information.

- Reconstruction: This attack aims to reconstruct PII by querying the LM with a known prefix and suffix, thereby revealing masked identities.

- Inference: This attack is more targeted, where an adversary, familiar with certain contextual information or candidate PII sets, aims to infer the correct sequence from a list of possibilities.

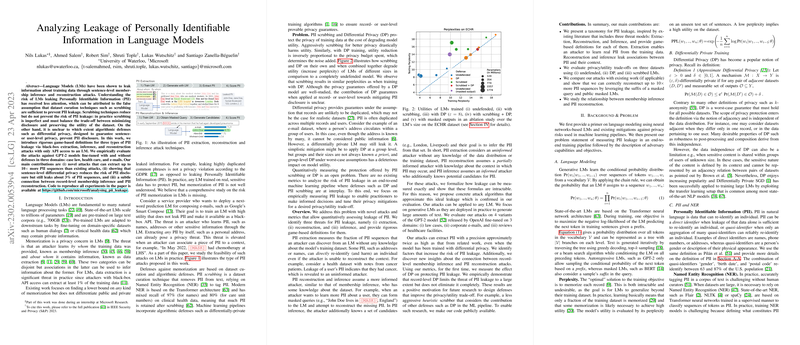

The paper evaluates these attacks on GPT-2 models fine-tuned in three domains: law, healthcare, and corporate emails, employing both models with no defenses and those with differential privacy mechanisms and scrubbing techniques.

Key Findings

- Enhanced Attack Efficacy: The paper presents novel attack strategies that can extract up to 10 times more PII sequences than existing methodologies. Particularly, these attacks enhance sensitivity to redundant data exposures, achieving significant extraction from models not equipped with adequate defenses.

- Partial Mitigation via Differential Privacy: While differential privacy provides some degree of protection, it is not wholly efficacious, as approximately 3% of PII sequences are still susceptible to leakage. This calls for further refinement in its application, particularly in data-rich and sensitive contexts.

- Interplay Between Membership Inference and PII Reconstruction: The research establishes a nuanced relationship between membership inference capabilities and PII reconstruction success, reinforcing the argument that leakage concerns extend beyond mere membership guessing.

Practical and Theoretical Implications

The paper provides actionable insights into the practical deployment of LMs, particularly in contexts with acute privacy concerns. The findings suggest that while scrubbing and differential privacy can reduce risk, they do not entirely mitigate PII leakage. Practitioners and researchers are encouraged to fine-tune these defenses for a balanced trade-off between privacy and utility. This could involve adopting more intelligent scrubbing strategies in conjunction with advanced privacy-preserving mechanisms.

Future Directions

The scope for future research includes developing more sophisticated models explicitly optimized for privacy preservation without compromising performance. This involves designing models that inherently disentangle the memorization of sensitive attributes from general language generation competencies. Furthermore, enhancing the robustness of differential privacy implementations in real-world datasets featuring high redundancy and diverse contexts remains a priority.

In summary, this paper highlights the inherent vulnerabilities and limitations of current defenses against PII leakage in LMs. It underscores the necessity for ongoing research and development of holistic and adaptive privacy-preserving strategies in machine learning pipelines. As LMs find greater utility in sensitive domains, these efforts will become increasingly crucial to safeguard individual privacy.