Abstract World Model in Reinforcement Learning

The field of Reinforcement Learning (RL) has seen significant advancements but still deals with the challenge of efficiently integrating prior knowledge into learning processes. A promising approach to improving exploration efficiency involves leveraging pretrained LLMs to provide guidance about the environment's workings. This poses a novel method of transferring knowledge from pretrained LLMs to RL agents, thereby equipping them with a sort of intuition about their environment.

LLMs and Reinforcement Learning

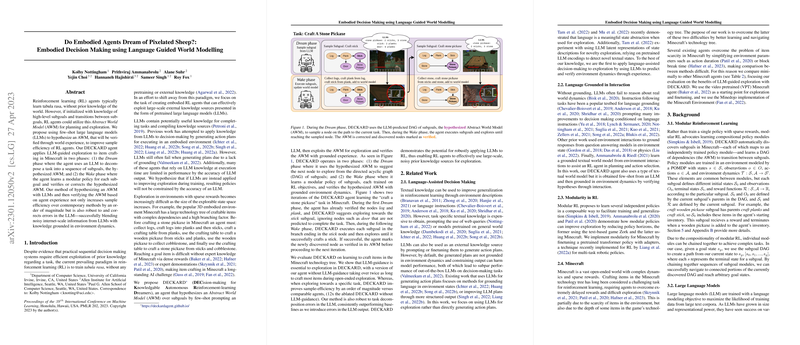

Leveraging the extensive knowledge captured by LLMs, the research introduces an Embodied Agent, known as DECKARD (DECision-making for Knowledgable Autonomous Reinforcement-learning Dreamers), which incorporates the use of language-guided world modelling. The method begins by using these LLMs to construct an Abstract World Model (AWM)—a hypothesized model that outlines the environment's subgoals and transitions between them. In essence, DECKARD first 'dreams up' a plan of action, which is then executed ('woken up to') and refined through actual interactions with the environment.

This results in a two-phase process:

- The Dream Phase: DECKARD predicts a sequence of subgoals, creating the AWM using few-shot prompting with LLMs.

- The Wake Phase: The agent acts to achieve these subgoals, learning and adjusting its policy based on environmental feedback, verifying and correcting the hypothesized AWM.

Exploration and Learning Efficiency

The agent's learning efficiency is significantly enhanced through this method. By equipping the agent with a preliminary map of potential objectives and their dependencies, it can navigate towards specific goals with greater purpose and foresight, reducing unnecessary explorations. Specifically, experiments conducted in the complex game environment of Minecraft demonstrated an order of magnitude improvement in sample efficiency—for certain tasks—when the LLM-guided DECKARD was employed compared to counterparts without such guidance.

Robustness Against Imperfect Knowledge

A critical aspect of this system is its resiliency against inaccuracies or incomplete information furnished by the LLM. By continuously grounding the language-based guidance in real-world experience, the agent self-corrects and fine-tunes its world model. This allows DECKARD to remain effective even when the initial guidance from the LLM includes errors or omissions, thus demonstrating a significant leap in using AI's "noisy" knowledge sources for practical decision-making.

Conclusion

The research showcases an innovative step toward more knowledgeable and efficient embodied agents. By symbiotically combining the broad but imprecise knowledge of LLMs with the grounded, experiential learning of RL, DECKARD represents a promising model for developing more autonomous and capable AI systems equipped with a preconceived yet adaptable understanding of their operating domains. This framework holds potential not just for gaming environments like Minecraft but also for various real-world applications where pre-existing knowledge can be harnessed to improve decision-making processes.