Emergent Analogical Reasoning in LLMs

The paper "Emergent Analogical Reasoning in LLMs" investigates the capacity of LLMs, specifically the GPT-3 model (text-davinci-003 variant), to perform zero-shot analogical reasoning. A primary goal of this paper is to discern whether human-like cognitive capabilities, such as analogical reasoning, emerge in LLMs with sufficient training data. The research also evaluates the model in a comparative setting against human participants to ascertain its efficacy.

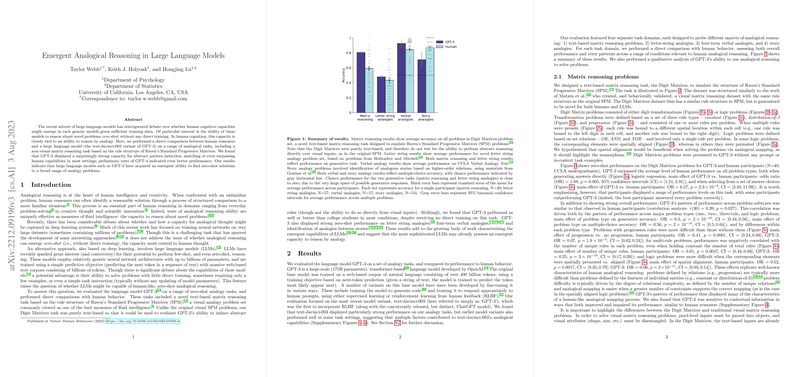

Summary of Findings

- Zero-Shot Reasoning and Analogies: The paper identifies that GPT-3 can solve analogy tasks without direct training on these tasks, operating in a zero-shot capacity. In many cases, its performance matched or outperformed human participants, suggesting an emergent ability for abstract pattern induction within LLMs.

- Task Domains and Results: The evaluation encompassed several analogy problem types:

- Digit Matrices: A text-based task inspired by Raven's Progressive Matrices. Results saw GPT-3 surpass human performance in generating and selecting analog responses.

- Letter String Analogies: These require flexible generalization abilities akin to human re-representation processes. GPT-3 displayed strong performance and a marked ability to handle tasks with increasing relational complexity.

- Four-Term Verbal Analogies: GPT-3 outperformed average humans in datasets spanning various relational categories, exhibiting sensitivity to semantic content similar to humans.

- Story Analogies: The model demonstrated an ability to discern higher-order relational analogies in narrative texts, though still somewhat exceeded by human performance, particularly in cross-domain analogies.

- Analogical Problem Solving: The research incorporated qualitative assessments using Duncker's radiation problem to demonstrate GPT-3's ability to apply analogical reasoning in problem-solving contexts. With prompting, GPT-3 successfully utilized analogical stories to propose solutions, albeit with limitations in non-textual, physical reasoning domains.

Implications and Future Directions

The emergence of zero-shot analogical reasoning in LLMs carries substantial theoretical and practical implications. Theoretically, it challenges long-held assumptions about the cognitive mechanisms necessary for abstract reasoning, traditionally believed to be outside the field of neural networks lacking explicit structural organization. Practically, these findings suggest potential applications in areas necessitating flexible reasoning and pattern recognition.

Despite these advancements, the limitations of LLMs in capturing the full spectrum of human analogical reasoning are evident. Areas such as long-term memory retrieval, integration of multimodal inputs, and physical world model understanding remain nascent challenges. Further advancements may involve:

- Enhancing model architectures to incorporate episodic memory-like mechanisms.

- Integrating visual and physical reasoning capabilities to enable more holistic problem-solving aptitudes.

The paper opens avenues for exploring how LLMs' inherent architectural features—self-attention, large-scale parameterization—might align with cognitive reasoning frameworks. Furthermore, it prompts inquiry into whether these emergent capabilities could aid in bridging the gap between human and artificial cognitive systems.

This research underscores a critical pivot in understanding AI's reasoning faculties, depicting a nuanced landscape where LLMs demonstrate capacities previously ascribed predominantly to human intelligence. However, discerning the qualitative aspects of reasoning and its application in broader cognitive contexts remains an ongoing pursuit.