Image Re-Identification Using Vision-LLMs: An Analysis of CLIP-ReID

The research presented in the paper, "CLIP-ReID: Exploiting Vision-LLM for Image Re-Identification without Concrete Text Labels," explores the application of vision-LLMs, specifically CLIP, to fine-grained image Re-Identification (ReID) tasks where labels lack explicit text descriptions. The aim is to enhance ReID performance by fully utilizing cross-modal capabilities inherent in CLIP, demonstrating competitive results across several ReID benchmarks.

Key Contributions and Methodology

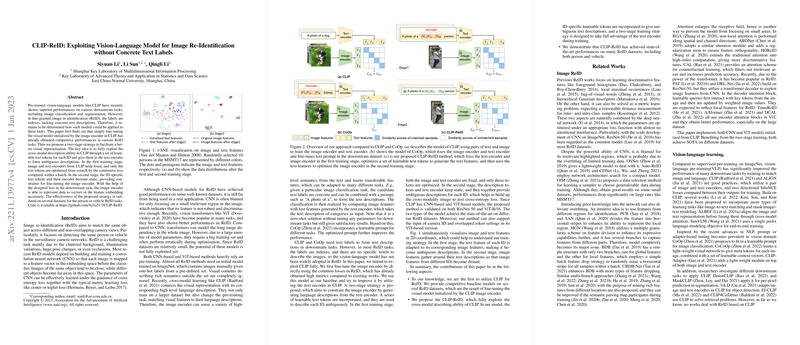

The paper begins by establishing a baseline for ReID by fine-tuning the visual model derived from the image encoder in CLIP, which shows substantial promise in improving ReID metrics. Building on this foundation, the authors propose a novel two-stage training strategy designed to harness CLIP's cross-modal capabilities more fully.

- Two-Stage Training Strategy:

- Stage One: This initial phase is pivotal for calibrating the textual component of the model. Image and text encoders from CLIP are held constant while optimizing a series of learnable text tokens—these serve to generate ambiguous text descriptions for each identity (ID). The process is guided by a contrastive loss applied across batch samples.

- Stage Two: Leveraging the text tokens optimized in the first stage, the image encoder undergoes fine-tuning. In this phase, the pre-trained text features remain static to serve as a constraint, facilitating improved feature representation by the image encoder using a devised downstream loss.

- Exploitation of CLIP's Cross-Modal Attributes: The methodology elegantly integrates ambiguous text generation capabilities provided by CLIP, extending an innovative mechanism for scenarios where textual labels are naturally unavailable. By doing so, it bridges the vision-language domain gap inherent in ReID tasks.

Experimental Results

Validation was conducted across multiple datasets including MSMT17, Market-1501, DukeMTMC-reID, Occluded-Duke, VeRi-776, and VehicleID. The method consistently demonstrates state-of-the-art (SOTA) performance:

- On the challenging MSMT17 dataset, the approach surpassed existing methods with a 63.0% mean Average Precision (mAP) and 84.4% Rank-1 accuracy using a CNN backbone. The ViT backbone further elevated performance to 73.4% mAP and 88.7% Rank-1, illustrating the profound potential for vision transformers in this context.

- Application to vehicle ReID datasets reaffirmed the method's robustness, achieving exceptional performance across the board.

Implications and Future Directions

The research advances the discourse on leveraging vision-LLMs in contexts historically dominated by single-modal approaches, illustrating the versatility and application breadth of models like CLIP beyond classification and segmentation. It underscores the prospect of utilizing cross-modal descriptions to refine image-centric tasks, suggesting broader generalization and transferability potentials of such models.

Moreover, the insights gained pave avenues for further exploration in domains where data labels are scarce or abstract. Future work could delve into refining semantic abstraction layers, improving computational efficiency, and extending the application of cross-modal learning to real-time systems in surveillance and autonomous navigation. The potential enhancements in robustness and adaptability are significant, suggesting a promising trajectory for continued research.

In summary, CLIP-ReID represents a meaningful step in applying state-of-the-art vision-LLMs to complex ReID tasks, yielding competitive results without traditional label constraints and opening wide-ranging implications for cross-modal learning applications.