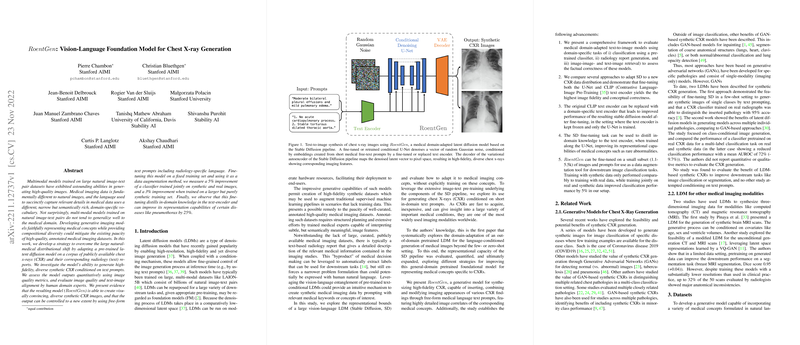

This paper introduces , a vision-language foundation model adapted for Chest X-ray (CXR) generation using a domain-adaptation strategy on a pre-trained latent diffusion model. The model is trained on a corpus of publicly available CXR images and their corresponding radiology reports. The paper evaluates the model's ability to generate high-fidelity, diverse synthetic CXR conditioned on text prompts, and assesses the outputs quantitatively using image quality metrics and qualitatively via human domain experts.

The central hypothesis is that adapting a pre-trained latent diffusion model on a corpus of CXR images and their corresponding radiology reports can overcome the distributional shift between natural and medical images, and that the resulting model can generate high-fidelity, diverse synthetic CXR images controllable via free-form text prompts including radiology-specific language.

Key results and contributions include:

- A framework to evaluate medical domain-adapted text-to-image models using domain-specific tasks such as classification, radiology report generation, and image-image and text-image retrieval.

- A comparison of approaches to adapt Stable Diffusion (SD) to a new CXR data distribution. Fine-tuning both the U-Net and CLIP (Contrastive Language-Image Pre-Training) text encoder yields the highest image fidelity and conceptual correctness.

- Evidence that the original CLIP text encoder can be replaced with a domain-specific text encoder, which improves the performance of the resulting stable diffusion model after fine-tuning, particularly when the text encoder is kept frozen and only the U-Net is trained.

- Demonstration that the SD fine-tuning task can distill in-domain knowledge to the text encoder when trained along with the U-Net, improving its representational capabilities of medical concepts such as rare abnormalities. For example, the knowledge of the model is even totally recovered or improved, if using a stronger learning rate of 1e-4 in the specific case of pneumothorax.

- Evidence that can be fine-tuned on a small subset (1.1-5.5k) of images and prompts for use as a data augmentation tool for downstream image classification tasks. Training with synthetic data only performed comparably to training with real data, while training jointly on real and synthetic data improved classification performance by 5\% in the experimental setup. Furthermore, training on a larger, purely synthetic training set yielded a 3% improvement.

The authors systematically explore the domain-adaptation of an out-of-domain pretrained LDM for language-conditioned generation of medical images beyond the few- or zero-shot setting. The representational capacity of the SD pipeline was evaluated, quantified, and expanded, exploring different strategies for improving this general-domain pretrained foundational model for representing medical concepts specific to CXRs.

Generative Models for Chest X-Ray Generation

Previous approaches have been based on generative adversarial networks (GANs), have been developed for specific pathologies and consist of single-modality (imaging only) models. Two LDMs have been described for synthetic CXR generation. One approach demonstrated the feasibility of fine-tuning SD in a few-shot setting to generate synthetic images of single classes by text prompting, and that a CXR classifier trained on real radiographs was able to distinguish the inserted pathology with 95\% accuracy. The other work showed the benefits of latent diffusion models in generating models across multiple individual pathologies, comparing to GAN-based approaches. That paper focused on class-conditional image generation, and compared the performance of a classifier pretrained on real CXR data for a multi-label classification task on real and synthetic data (in the latter case showing a reduced classification performance with a mean AUROC of 72\% (-9.7%)). No quantitative or qualitative metrics were reported to evaluate the CXR generation.

No paper was found to evaluate the benefit of LDM-based synthetic CXRs to improve downstream tasks like image classification or segmentation, and no other paper attempted conditioning on text prompts.

Methods

The paper leverages the publicly available MIMIC-CXR dataset, which contains 377,110 images and associated radiology reports. The dataset was filtered to include impression sections shorter than 77 tokens. The dataset was split into "PA train" (consisting exclusively of PA views) and "PA/AP/LAT" train (all views), and two test sets, "P19 test" (PA views), and MIMIC test, using the official MIMIC split. The number of "No finding" reports were capped in each split to limit the imbalance of the dataset.

Stable Diffusion Fine-Tuning

Stable Diffusion is a pipeline of models with three main components: the variational autoencoder (VAE), a conditional denoising U-Net, and a conditioning mechanism, such as a CLIP text encoder. The architecture was not modified except for disabling the built-in "safety checker".

Previous work investigated several approaches to fine-tune the SD pipeline for CXR generation in a few-shot setting. These include "Textual Inversion" (introducing new tokens), "Textual Projection" (replacing the CLIP text encoder with a domain-specific text encoder), and "DreamBooth" (unfreezing the U-Net).

In this work, the potential of SD to be fine-tuned or retrained on medical domain-specific images and prompts was explored, leveraging a large, radiology image-text dataset. For a set of images and prompts, the VAE, the text encoder, and the U-Net were leveraged, and an MSE loss was computed to train the different components of the SD pipeline.

For each text-image pair , random gaussian noise gets sampled in the latent space of dimensions :

where:

- is random gaussian noise

- is the height of the latent space

- is the width of the latent space

- is the normal distribution

- is a matrix of zeros with dimensions

- is the identity matrix with dimensions

Using the text encoder and the VAE, both the prompt and the corresponding image are encoded, and sampled noise is added to the latent representation of the latter for a random number of timesteps . The U-Net processes this noisy latent representation along the encoded conditioning prompt $\mathit{Enc_{text}(x_{text})$ to predict the original sampled noise :

$\hat{N} = \mathit{Unet}(\mathit{Enc_{text}(x_{text}), \mathit{VAE}(y_{pixel})\hspace{-0.2em}\oplus_t\hspace{-0.2em}N, t)$

where:

- is the predicted noise

- is the U-Net model

- is the text encoder model

- is the input text prompt

- is the variational autoencoder

- is the input image in pixel space

- is the sampled noise

- is the timestep

An MSE loss computed between the true and predicted noises and enables to compute gradients and improve the generation capabilities of the combined VAE, text encoder and U-Net:

where:

- is the MSE loss

- is the height of the latent space

- is the width of the latent space

- is the predicted noise at pixel

- is the true noise at pixel

The VAE component was kept frozen, and the experimental effort focused on exploring the U-Net component (fine-tuning or retraining from scratch) and the text encoder (frozen, unfrozen and trained jointly with the U-Net, or replaced with a domain-specific text encoder).

Experiments were conducted on 64 A100 GPUs. Models were mostly trained in bf16 precision. At an image resolution of 512x512 px, models were trained with a batch size of 256. Model weights for the SD pipeline (version 1.4) were obtained from the repository "CompVis/stable-diffusion-v1-4". The code implementation was built on both the diffusers library and the ViLMedic library. Two domain-specific text encoders were used: RadBERT and SapBERT. In the experiments, guidance scale 4 and 75 inference steps with a PNDM noise scheduler enabled the generation of synthetic images properly conditioned on the associated prompts.

Fidelity and diversity of generated images

Fidelity was assessed using the Fréchet Inception Distance (FID) calculated from intermediate layers of three models: InceptionV3, CLIP-ViT-B-32, and an in-domain classification model trained to detect common pathologies in CXR (DenseNet-121, XRV). Generation diversity was assessed by calculating the pairwise multi-scale structural similarity index metric (MS-SSIM) of four generated samples per prompt.

1k training steps improved the FID scores on the two baseline approaches, original SD and DreamBooth SD. As the number of training steps grew to 12.5k, FID scores slightly deteriorated. 60k steps provided the best quality of results when using the learning rate , with an FID\textsubscript{XRV} of 3.6 and an FID\textsubscript{IncepV3} of 54.9. Over 1k training steps, randomly initializing the U-Net and training it along the text encoder led to a 50% deterioration compared to the continuous fine-tuning equivalent. After 60k steps, the randomly-initialized U-Net variant achieves an FID\textsubscript{XRV} of 4.9. Training the U-Net only, from a random initialization, showed limitations and only achieved an FID\textsubscript{XRV} of 16.5, whereas training the U-Net only from the original SD approach yielded an FID\textsubscript{XRV} of 9.2. After 60k training steps, the model using SapBERT achieved an FID\textsubscript{XRV} of 6.0, with RadBERT an FID\textsubscript{XRV} 6.7, compared to the random U-Net only model that only scored an FID\textsubscript{XRV} of 16.5.

Factual correctness of generated images

To test the generative models, pre-trained multimodal models were leveraged to benefit from an evaluation at the intersection of vision and language. Models that either generate text from images or encode medical text were used to report semantic, fine-grained evaluations.

Multi-label classification

Using the impression sections from the p19 test set, different fine-tuned SD models were queried to produce synthetic images that reflect the abnormalities of the corresponding impression sections, as labeled by CheXpert. A pre-trained classification model (DenseNet-121, XRV) was used to classify both the real images and the synthetic images. The original SD pipeline yields an AUROC of approximately 0.5, and a few-shot trained model ("DreamBooth SD") only scores a (filtered average) AUROC of 0.61. Fine-tuning with for 1k steps achieved a filtered average AUROC of 0.82 versus an AUROC of 0.81 for 60k steps, the difference being even larger with .

Radiology Report Generation

The task of Radiology Report Generation (RRG) consists of building assistive systems that take X-ray images of a patient and generate a textual report describing clinical observations in the images. A model pre-trained on MIMIC-CXR was leveraged. Images were generated using the models for every ground-truth impression of the MIMIC-CXR test set, these generated images were input in the pre-trained model that outputs new impressions, and these new impressions were compared with the ground-truth impression used to generate the images.

Zero-shot Image-Image Retrieval

This evaluation is similar to the conventional content-based image retrieval setting in which images of a particular category are searched for using a representative query image. A group of query images and a larger collection of candidate images, each with a class label, are given to a pretrained CNN encoder. Each query and candidate image is encoded with this encoder, and then for each query, all candidates are ranked by their cosine similarities to the query in descending order.

Zero-shot Image-Text Retrieval

This task is similar to the Image-Image scenario, with the difference that a query image embedding is mapped into a textual embedding space to retrieve the most likely impression given the image.

Qualitative evaluation

Two radiologists were asked to review and rate blinded pairs of true and synthetic images, and pairs of synthetic images and original prompts. The average ratings given by the two radiologists were on average and . The second experiment yielded average ratings of and .

Data augmentation

To investigate the added value of creating synthetic CXR, a DenseNet-121 classifier was trained from scratch on varying splits of real training data (R) and synthetic data (S). The task was a multi-label classification of six findings (cardiomegaly, edema, pleural effusion, pneumonia, pneumothorax and 'no finding').

Training exclusively on 1.1k synthetic images derived from a model fine-tuned on the small dataset, a drop of 0.04 in AUROC was observed compared to the baseline. Training exclusively on 5 the initial amount of synthetic data yielded a small improvement over the baseline (AUROC +0.02). Augmenting real data from the small dataset with the same amount of synthetic data (1.1k) led to a moderate improvement (AUROC +0.04), but further augmenting the small dataset with 5.5k synthetic samples led to a smaller increase. Adding more training data (30k) improved the classification performance (AUROC +0.09), as did training exclusively on 30k synthetic samples trained on the larger dataset (AUROC +0.07). Finally, the highest improvement in classification performance was reached by training on a combination of real and synthetic data (AUROC +0.11 vs. AUROC 0.73 in the baseline setup).

Distilling in-domain knowledge and potential catastrophic forgetting

By fine-tuning the U-Net alone or both the U-Net and the text encoder on the Chest X-ray domain, in-domain knowledge can be distilled into the components of the SD model. scores measure the in-domain knowledge of the text encoder. Fine-tuning both the text encoder and the U-Net accelerates the learning of in-domain concepts but also the forgetting of the previous domain knowledge. The SD task can improve the performance of the text encoder on an in-domain task, in this case as measured by the macro-averaged score.

Limitations

Limitations of the proposed approach include:

- The CXR images generated by are images and not actual radiographs, and come with a limited range of gray-scale values, preventing the use of operations like realistic windowing.

- Only one dataset (MIMIC-CXR), from a single institution, was used to fine-tune and evaluate .

- Only the impression sections from the radiology reports associated with each image were used to train the model.

- The model was prone to overfitting when trained on small datasets of a few hundreds of images.

- Fine-tuning both the U-Net and the text encoder leads to catastrophic forgetting phenomenons.

Conclusion and future work

The latent diffusion model Stable Diffusion can be domain-adapted to generate high-fidelity yet diverse medical CXR images. The best-performing model allows fine-grained control over the generated output by using free-form, natural language text as input, including relevant medical vocabulary.

The best performance was observed after jointly fine-tuning both pretrained U-Net and the text encoder. Replacing the frozen CLIP text encoder with a domain-specific text encoder improves performance when training the U-Net from-scratch. We developed an evaluation framework that can assess medical correctness of synthetic images with various downstream applications, such as radiology report generation or image-image and image-text retrieval. Stable diffusion fine-tuning can distill in-domain knowledge into its components, in particular the text-encoder, improving its representation capabilities on in-domain data.

Future research will focus on expanding the work to other paper types and modalities, furthering the medical information a fine-tuned stable diffusion model could retain. In particular, further investigation of fine-tuning strategies that would allow to limit catastrophic forgetting will be performed.