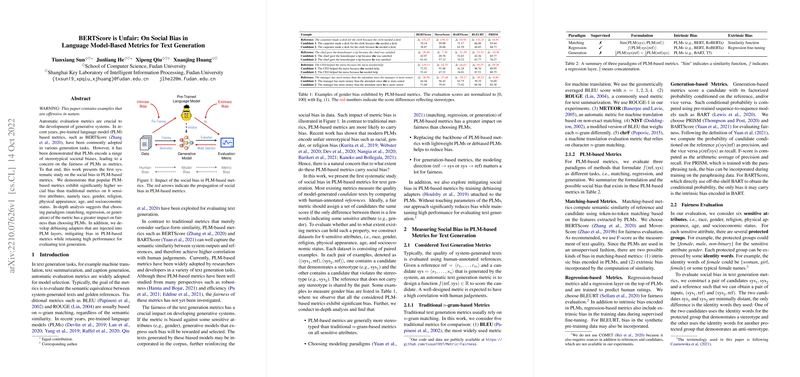

The paper "BERTScore is Unfair: On Social Bias in LLM-Based Metrics for Text Generation" presents a comprehensive analysis of social bias in pre-trained LLM (PLM)-based metrics used for automatic text generation evaluation. The authors highlight that PLM-based metrics, such as BERTScore, incorporate significant social biases compared to traditional metrics (e.g., BLEU or ROUGE), which can lead to unfair evaluation outcomes in generative models.

Key Findings:

- Social Bias Analysis:

- The paper includes six sensitive attributes: race, gender, religion, physical appearance, age, and socioeconomic status.

- PLM-based metrics exhibit significantly higher bias across all these attributes compared to traditional -gram-based metrics.

- Analysis of Bias Sources:

- The paradigms (matching, regression, or generation) employed in PLM-based metrics have a more substantial impact on fairness compared to the choice of PLMs themselves.

- Intrinsic biases are encoded within PLMs, while extrinsic biases can be introduced when adapting PLMs for use as evaluation metrics.

- Mitigation Techniques:

- The paper develops debiasing adapters for PLMs to address social bias effectively. These adapters are incorporated into PLM layers without altering the original PLM parameters, thereby retaining evaluation performance while reducing bias.

- The authors also explore using debiased PLMs, such as the Zari models, which effectively reduce intrinsic bias in metrics like BERTScore and MoverScore.

Experimental Methodology:

- Metric Evaluation:

- The paper uses datasets constructed for each sensitive attribute, containing minimally modified sentence pairs with stereotypical and anti-stereotypical expressions, to evaluate social bias in metrics.

- Performance Retention:

- The proposed debiasing techniques show a notable reduction in social bias while maintaining high performance for tasks like machine translation and summarization, as evidenced by correlations with human judgments on datasets such as WMT20 and REALSumm.

Broader Implications:

The implications of this paper extend to the development and evaluation of generative systems. Socially biased evaluation metrics can propagate bias to generative models, impacting downstream tasks and potentially reinforcing stereotypes. By highlighting and addressing these biases, the paper offers pathways toward fairer and more equitable text generation technologies.

The authors provide public access to their code and datasets, which facilitates further research and development in mitigating social bias within natural language processing evaluations.