Overview of SpeechUT: Bridging Speech and Text with Hidden-Unit for Encoder-Decoder Based Speech-Text Pre-training

The paper presents SpeechUT, a pre-training model designed to effectively bridge the modalities of speech and text using hidden-unit representations. This approach leverages both unpaired speech and text data, offering a novel solution for tasks such as automatic speech recognition (ASR) and speech translation (ST). SpeechUT's innovative design capitalizes on a shared unit encoder to align the outputs of a speech encoder and a text decoder, thus facilitating improved performance in cross-modal pre-training.

Key Contributions

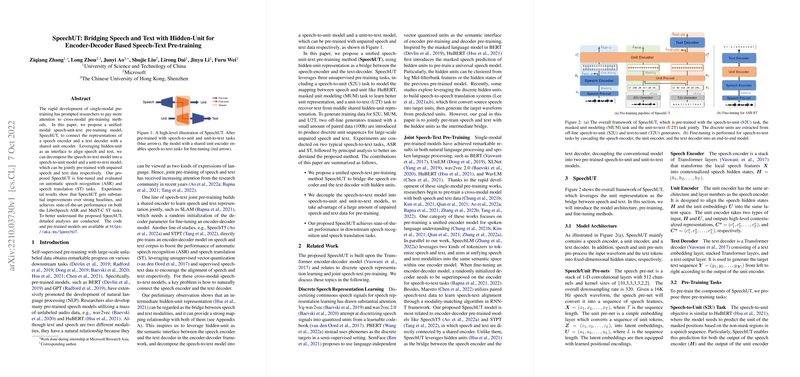

- Unified Speech-Unit-Text Pre-training Model: SpeechUT integrates a speech encoder and a text decoder via a unit encoder, facilitating seamless conversion between speech and text modalities. This structure allows the decomposition of tasks into speech-to-unit (S2U) and unit-to-text (U2T) models, which are pre-trained independently.

- Integration of Hidden-Units: The approach uses hidden-unit representations derived from models like HuBERT to align speech and text, providing a semantic interface between the two modalities.

- State-of-the-Art Performance: The model demonstrates significant improvements over existing baselines, achieving state-of-the-art results on the LibriSpeech ASR and MuST-C ST tasks.

Technical Insights

- Pre-training Tasks: SpeechUT performs multi-task learning with three principal tasks:

- Speech-to-Unit (S2U): Similar to HuBERT's masked prediction, this task predicts unit categories from masked speech features.

- Unit-to-Text (U2T): A sequence-to-sequence task that reconstructs the text from the intermediate unit representation.

- Masked Unit Modeling (MUM): Inspired by BERT, this task involves predicting masked unit tokens to enhance unit representation learning.

- Embedding Mixing Mechanism: This technique strategically replaces parts of the speech features with corresponding unit embeddings, enhancing alignment between speech and unit representations.

- Pre-training and Fine-tuning: The model is pre-trained with a combination of speech, unit, and text data. For ASR and ST tasks, all modules, including the text decoder, are fine-tuned without introducing new parameters.

Experimental Results

SpeechUT achieves remarkable results across various benchmarks. On the LibriSpeech ASR task, the model surpasses encoder-based and encoder-decoder models, such as wav2vec 2.0 and SpeechT5. Furthermore, SpeechUT attains superior BLEU scores in ST evaluations compared to recent works like STPT, even with reduced pre-training data.

Implications and Future Directions

The successful integration of hidden units as a bridge between speech and text opens avenues for more efficient and scalable pre-training methods. The decoupled pre-training strategy could inspire future research in unified multi-modal learning. Potential areas of exploration include multilingual extensions and refining the T2U generator to eliminate dependency on paired ASR data.

This paper positions SpeechUT as a powerful tool for advancing speech-related AI applications, underscoring the potential benefits of intermediary representations in cross-modal alignments.