Overview of ERNIE-ViL 2.0: Multi-view Contrastive Learning for Image-Text Pre-training

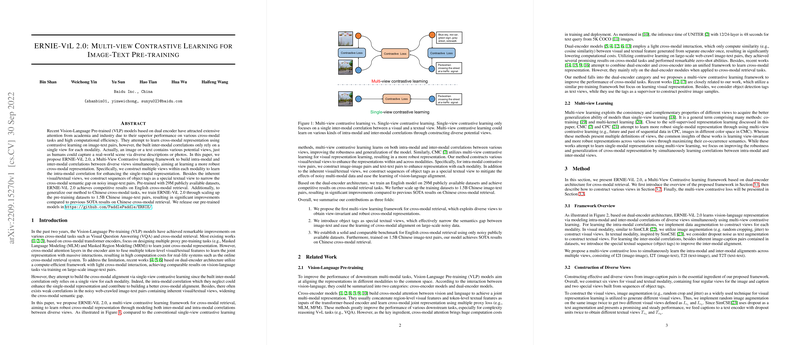

The paper, "ERNIE-ViL 2.0: Multi-view Contrastive Learning for Image-Text Pre-training," presents a novel approach in Vision-Language Pre-training (VLP) centered on enhancing the robustness of cross-modal representation through Multi-View Contrastive Learning. This research targets the limitations in existing dual-encoder VLP models which typically rely on single-view contrastive learning, thereby missing potential intra-modal correlations that could enhance alignment between image and text modalities.

Methodology

ERNIE-ViL 2.0 introduces an innovative framework that simultaneously constructs intra-modal and inter-modal correlations across multiple views. This is achieved by:

- Constructing multiple views within each modality to learn intra-modal correlations, thus enhancing individual modal representations.

- Incorporating object tags as special textual views to narrow semantic gaps in noisy image-text pairs, improving cross-modal alignment.

This framework is implemented on a dual-encoder architecture, leveraging large-scale datasets comprising 29 million English and 1.5 billion Chinese image-text pairs. The multi-view contrastive loss function is central to this approach, allowing simultaneous learning from different view pair correlations in a scalable and flexible manner.

Experimental Results

The research demonstrates significant improvements in cross-modal retrieval tasks. For English, the model achieved competitive performance on benchmarks like Flickr30K and MSCOCO, utilizing substantially fewer data compared to previous methods like ALIGN. For Chinese datasets, scaling the pre-training to 1.5 billion pairs led to significant improvements over state-of-the-art results, particularly in zero-shot scenarios on the AIC-ICC and COCO-CN datasets.

Implications and Future Directions

This paper's contributions are particularly impactful in the field of efficient VLP models. By proving that robust cross-modal representations can be achieved through multi-view contrastive learning, it opens up new directions for understanding and utilizing diverse data representations. Practically, ERNIE-ViL 2.0 offers a pathway to more computationally efficient models without compromising performance, crucial for real-world applications like online retrieval systems.

The implications extend further into cross-modal semantic understanding, suggesting a shift towards models that can inherently manage the noise and variability within large datasets through improved data view construction and alignment techniques.

Future research could explore integrating such multi-view learning frameworks within cross-encoder architectures, potentially enhancing performance in more complex vision-language reasoning tasks. Additionally, expanding this approach to other languages and less curated datasets could further validate its versatility and applicability across different domains and data qualities.

In conclusion, ERNIE-ViL 2.0 stands as a significant contribution to the ongoing enhancement of VLP models, emphasizing the potential of multi-view contrastive frameworks in achieving efficient and scalable cross-modal learning.