The paper "Layer or Representation Space: What makes BERT-based Evaluation Metrics Robust?" investigates the robustness of BERTScore, a popular metric for evaluating text generation, in the face of domain divergence and noisy inputs. This paper challenges the common practice of evaluating embedding-based metrics only on standard datasets, which share similarities with the data used during the pretraining of the embeddings, thereby limiting their generalization to varied domains.

Key Insights and Contributions

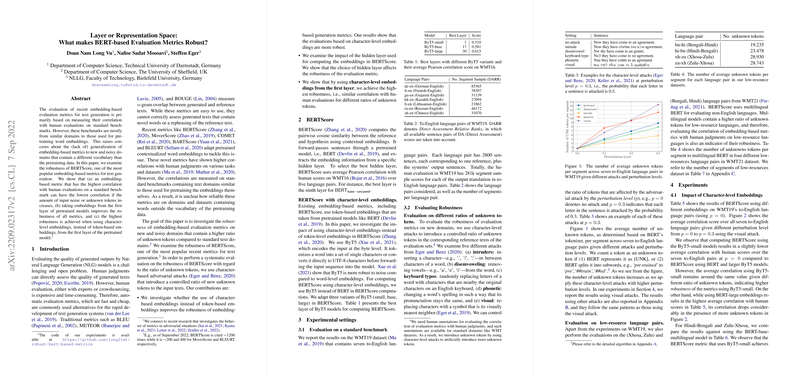

- Variable Correlation with Noise Levels: The paper reveals that BERTScore, while having high correlation with human evaluations on standard datasets, can significantly degrade in correlation when the input contains noise or a high ratio of unknown tokens.

- Layer Selection and Robustness: It is demonstrated that selecting embeddings from the first layer of a pretrained model enhances robustness across different metrics. The robustness of evaluation metrics is assessed through adversarial attacks that introduce varying levels of unknown tokens into input texts.

- Character-level Embeddings: The research finds that utilizing character-level embeddings, rather than traditional token-level embeddings, results in more robust evaluation metrics. Specifically, ByT5, a byte-level model, when used in BERTScore computations, showed improved robustness against noise.

Experimental Setup

- Datasets and Attacks: The experiments were conducted using WMT19 data, which comprises multiple language pairs. Robustness was assessed by introducing character-level adversarial attacks, such as inserting intrusive characters, disemvoweling, keyboard typos, phonetic spelling changes, and visual perturbations.

- Correlation Metrics: The paper utilized Kendall's rank correlation to quantify the alignment of BERTScore with human judgment across different noise levels. ByT5 embeddings were particularly noted for maintaining consistent performance across varying noise levels, suggesting greater robustness.

Model and Methodology Impact

- Impact of Layer Choice: The paper highlights the sensitivity of BERTScore’s performance to the choice of hidden layer from which embeddings are extracted. Using embeddings from the first layer proved beneficial in maintaining robustness against adversarial inputs.

- Efficiency and Applicability: By leveraging first-layer embeddings, the evaluation process is expedited, crucial for real-time applications. This provides an efficient way to utilize large models like ByT5 for robust text evaluation.

Future Directions

The paper suggests extending this analysis to consider other robustness factors such as factuality, fluency, and grammatical issues, beyond spelling variation. Additionally, there is an intent to explore pixel-based text representations to enhance the robustness of text evaluation metrics.

In summary, this research addresses the substantial challenges in evaluating the robustness of BERT-based metrics across diverse and noisy domains, emphasizing layer selection and granularity of embeddings as vital components for enhancing reliability. The findings prompt a reevaluation of existing metrics and foster the development of more generally applicable and robust text evaluation methodologies.