An Analysis of "Prompt-to-Prompt Image Editing with Cross Attention Control"

The paper "Prompt-to-Prompt Image Editing with Cross Attention Control" by Amir Hertz et al. offers a detailed exploration into the domain of text-driven image editing using large-scale text-conditioned image generation models. This research investigates the potential of leveraging cross-attention mechanisms within diffusion models to enable intuitive and effective image manipulation strictly via textual inputs.

Key Contributions

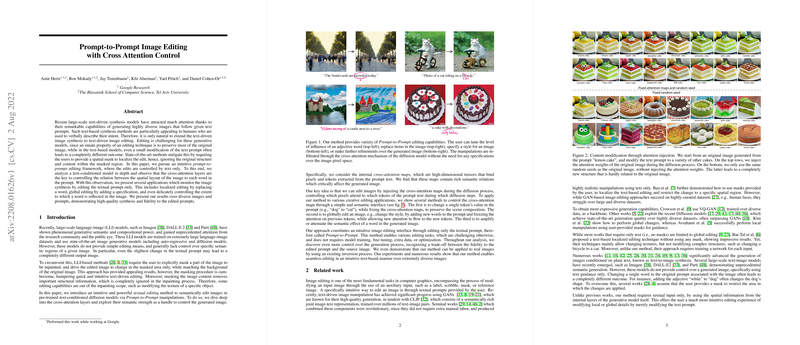

The core contribution of the paper lies in its introduction of an intuitive editing framework that exploits the cross-attention layers in diffusion models for Prompt-to-Prompt image editing. This technique allows users to manipulate generated images by merely modifying the text prompt. The framework facilitates several editing tasks without the need for spatial masks or additional data, which differentiates it from prior methods.

Methodology

A significant discovery outlined in the paper is that the cross-attention layers in text-to-image diffusion models hold semantic relationships between text tokens and spatial regions of an image. By modifying these attention maps, implicit control over image generation can be achieved.

Key Methodological Steps:

- Cross-Attention Analysis: The paper explores the cross-attention layers within the diffusion model, emphasizing their role in connecting image pixels to text tokens. The authors identify that these layers are crucial for maintaining the image structure in response to textual changes.

- Prompt-to-Prompt Framework: The proposed method involves generating two images simultaneously using the original and modified text prompts, and then injecting the cross-attention maps from the original generation into the modified prompt generation.

- Editing Applications:

- Word Swap: Modifying specific words in the text prompt and controlling the extent of structural preservation.

- Adding Phrases: Extending the text prompt with new attributes while preserving existing semantics and geometry.

- Attention Re-weighting: Adjusting the influence of specific words in the text prompt to control their impact on the generated image.

Results and Implications

Numerical and Qualitative Analysis:

The method is extensively evaluated over various scenarios, showcasing its versatility in handling both localized and global edits. The results include:

- Word Swap: Demonstrates the preservation of image structure while replacing elements (e.g., replacing "bicycle" with "car").

- Phrase Addition: Successfully adds new attributes (e.g., "snowy" added to a "mountain scene") while maintaining the context and structure.

- Attention Re-weighting: Provides fine-grained control over image attributes, such as the extent of "fluffiness" in a "fluffy red ball."

Empirical Findings:

The empirical results validate that cross-attention control can significantly enhance the fidelity of modified images to their original counterparts. Further, these manipulations are achieved without retraining or fine-tuning the underlying diffusion model.

Practical and Theoretical Implications

The introduction of the Prompt-to-Prompt framework has several practical and theoretical implications:

- Practical Usability: This method simplifies the user interaction model for image editing, reducing the barrier for non-expert users to perform complex edits.

- Theoretical Insights: It sheds light on the importance of cross-attention mechanisms in diffusion models and their potential for fine-tuned control in generated outputs.

- Model Agnostics: The approach is potentially extensible to other text-to-image models, indicating a broad applicability across different architectures and datasets.

Future Directions

Several future research avenues are suggested by this work:

- Refinement of Inversion Methods: Improving the accuracy of real image inversion to minimize distortions and enhance editability.

- Higher-Resolution Attention Maps: Incorporating cross-attention mechanisms in higher-resolution layers to enable more precise localized edits.

- Extended Control Mechanisms: Exploring further enhancements in semantic control, such as spatial manipulations and object movements within images.

Conclusion

The paper "Prompt-to-Prompt Image Editing with Cross Attention Control" stands as a significant contribution to the field of text-driven image manipulation. By harnessing the potential of cross-attention maps within diffusion models, the authors present a method that offers intuitive and flexible editing capabilities, paving the way for more accessible and sophisticated image generation tools. The implications of this research extend beyond the current scope, offering a foundational framework that can inspire further innovations in generative models and their applications.