Essay on "Inner Monologue: Embodied Reasoning through Planning with LLMs"

The paper "Inner Monologue: Embodied Reasoning through Planning with LLMs" by Huang et al. explores the integration of LLMs into robotic planning and control tasks. The primary focus is on leveraging the inherent reasoning capabilities of LLMs to enhance embodied agents' ability to perform complex tasks through interaction and feedback from their environment.

Overview

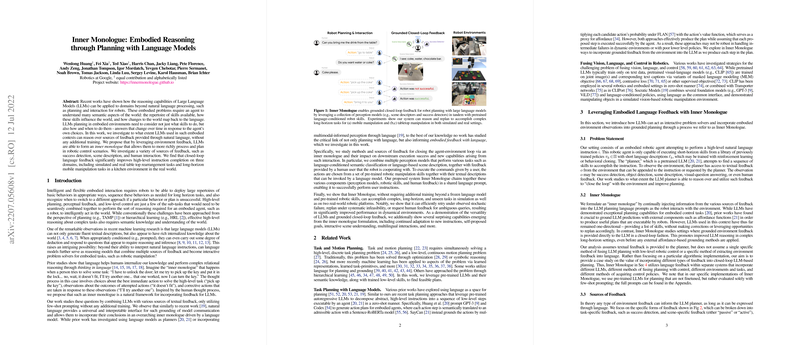

The paper addresses the challenge of applying LLMs to embodied contexts where robots operate in dynamic environments. The key idea is to use natural language feedback mechanisms to foster an "inner monologue" within LLMs. This monologue enables the models to effectively process and execute robotic tasks by considering various feedback types, such as success detection and human interaction, without additional training.

The concept is tested across several domains, including simulated and real environments involving tabletop rearrangement and kitchen-based mobile manipulation. By utilizing different types of feedback—like scene descriptions and success indications—the paper demonstrates significant improvements in task completion rates compared to baseline methods without feedback integration.

Methodology

The approach combines LLMs with various feedback sources to create a closed-loop system, where language not only serves as an interface for planning but also enables dynamic adaptation during execution. Key components investigated include:

- Success Detection: Binary feedback about the completion of specific robotic actions.

- Scene Descriptions: Updates on the scene state that inform the LLM about current task progress.

- Human Feedback: Direct interaction with humans, allowing the LLM to ask questions and incorporate guidance.

The method employs few-shot prompting of pre-trained LLMs to ensure scalability across different embodiments without requiring retraining for LLMs.

Experimental Results

The research showcases experiments in three distinct environments to validate the effectiveness of the proposed approach:

- Simulated Tabletop Rearrangement: Here, Inner Monologue outperforms a multi-task CLIPort baseline, with success rates improving substantially when both object and scene feedback are integrated.

- Real-World Tabletop Rearrangement: In a real-world setting, the system adapts to noisy detections and suboptimal conditions, achieving a 90% success rate in tasks like block stacking and object sorting when combining object recognition and success feedback.

- Real-World Kitchen Mobile Manipulation: The paper extends to mobile manipulators, where Inner Monologue shows increased robustness in handling adversarial disturbances by effectively replanning, improving success rates over the SayCan baseline.

Discussion and Implications

The findings illustrate that integrating environment feedback significantly enhances LLM-based planning in robotics. The approach not only improves task success rates but also elicits emergent behaviors such as continued adaptation to new instructions, goal proposal under infeasibility, and multilingual interaction capabilities.

Within the theoretical and practical implications, the paper opens new avenues for using LLMs as intrinsic reasoning models that can autonomously interact with complex environments. The lack of additional training required for LLMs underscores the importance of leveraging pre-trained models' flexibility.

Future work could focus on fully automating the feedback provision through advanced vision and scene understanding models, reducing the reliance on human-annotated feedback. Additionally, expanding the task domains and exploring enhanced feedback fusion strategies would further bolster the efficacy of LLMs in robotic applications.

Overall, this work represents a significant step toward intelligent, adaptable robotic systems capable of reasoning and interacting with their environment in a manner analogous to human cognitive processes.