Bridging the Gap between Object and Image-level Representations for Open-Vocabulary Detection

The paper entitled "Bridging the Gap between Object and Image-level Representations for Open-Vocabulary Detection" addresses a critical challenge in open-vocabulary detection (OVD). The authors propose a novel approach that enhances the generalization capability of OVD models to detect novel objects, utilizing a synergy of object-centric alignment and pseudo-labeling techniques.

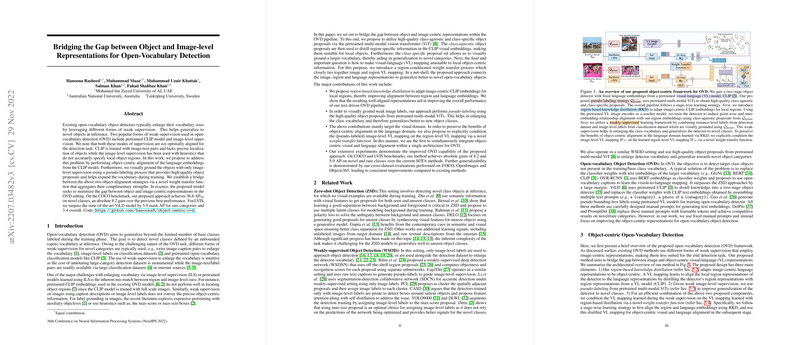

The core problem with existing OVD models is their reliance on image-centric representations, primarily through the use of pretrained models like CLIP and image-level supervision. These approaches, although beneficial in enlarging vocabulary, do not effectively localize objects or align image and object-level cues. By identifying this gap, the authors propose two key mechanisms: a region-based knowledge distillation (RKD) strategy and a pseudo-labeling process using a multi-modal vision transformer (ViT), enhancing the object-centric capabilities of the detection models.

Proposed Methodology

- Region-based Knowledge Distillation (RKD): The RKD approach adapts the image-centric CLIP embeddings to be more object-focused. Utilizing high-quality class-agnostic proposals obtained from pretrained multi-modal ViTs, this method distills knowledge from these proposals, ensuring better alignment between region and language embeddings. This strategy is particularly effective as it provides local, region-specific features that enhance the detection performance over novel classes.

- Pseudo-labeling for Image-level Supervision (ILS): To generalize the detector to novel categories without exhaustive annotation, the paper introduces an ILS approach using pseudo-labels. Through a pseudo-labeling process, high-quality class-specific proposals are generated, enabling the model to be trained on larger vocabularies while maintaining effective localization. This mechanism significantly enhances the detector’s vocabulary and its ability to generalize to unseen classes.

- Weight Transfer Function: The innovative weight transfer function bridges the two aforementioned strategies. By conditioning the language-to-visual mapping of the ILS on the object-centric alignment obtained from the RKD, this function ensures an integrated representation that optimally leverages the advantages of both approaches. This encapsulates the paper's central contribution, establishing a novel framework that effectively connects image, region, and language representations within the OVD pipeline.

Results and Implications

The empirical results demonstrate substantial improvements, with the proposed framework achieving notable gains on benchmark datasets such as COCO and LVIS. Specifically, the model achieves a noteworthy 36.6 AP on novel classes of COCO, marking an 8.2 gain over previous methods, and thereby setting a new state-of-the-art for OVD tasks. Further evaluations show analogous improvements in cross-dataset evaluations over OpenImages and Objects365, highlighting the robustness and generalizability of the proposed model.

Conclusion and Future Prospects

This research makes significant strides in addressing the shortcomings of existing OVD systems by advancing object-centric alignment methodologies and integrating image and region-level representations. The proposed framework sets a new standard for OVD approaches, offering promising directions for future research. Potential avenues include exploring more sophisticated pseudo-labeling strategies and intricate weight transfer functions that can further enhance the adaptability and efficiency of OVD systems.

The implications of this work extend beyond traditional object detection tasks, laying a foundation for more intuitive and capable vision-language interactions in AI systems. Future research might delve into optimizing the balance between image-level and object-level cues, reducing computational overhead without compromising performance, and expanding open-vocabulary detection to encompass a broader spectrum of real-world applications.