Scaling Autoregressive Models for Content-Rich Text-to-Image Generation

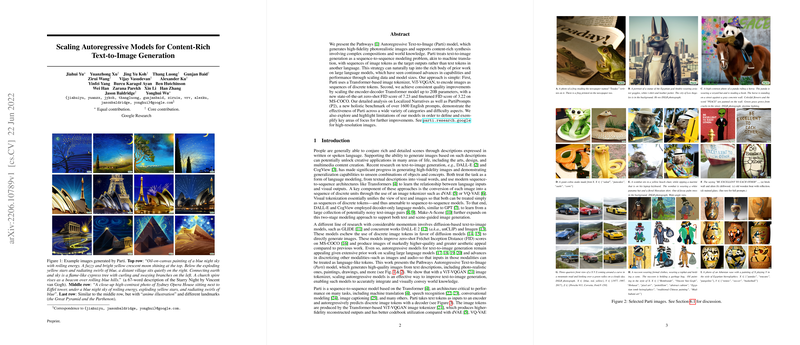

The paper "Scaling Autoregressive Models for Content-Rich Text-to-Image Generation" presents an advanced methodology for generating high-fidelity, photorealistic images from textual descriptions using autoregressive models. This approach, termed the Pathways Autoregressive Text-to-Image (Parti) model, leverages concepts from sequence-to-sequence modeling, widely used in natural language processing tasks such as machine translation.

Methodology Overview

The proposed model operates in two stages:

- Image Tokenization: Utilizing a Transformer-based image tokenizer, ViT-VQGAN, images are first encoded as sequences of discrete tokens. This tokenizer improves upon previous models like dVAE and VQ-VAE by producing higher fidelity outputs and better codebook utilization.

- Autoregressive Modeling: An encoder-decoder Transformer model is trained to predict sequences of image tokens from sequences of text tokens. The model leverages large-scale LLM architectures, scaling up to 20 billion parameters.

Key Results and Features

- State-of-the-Art Performance: The 20B-parti model achieves a zero-shot FID score of 7.23 and a finetuned FID score of 3.22 on the MS-COCO dataset, surpassing existing autoregressive models like Make-A-Scene and recent diffusion models such as DALL-E 2 and Imagen.

- Versatile Benchmarking: The model's efficacy is evaluated not only on the MS-COCO dataset but also on the more challenging Localized Narratives dataset. Additionally, the authors introduce the PartiPrompts benchmark containing over 1600 prompts across diverse categories to holistically assess model performance.

- Improvement with Scale: The research demonstrates that scaling the model size from 350M to 20B parameters consistently enhances text-image alignment and overall image quality.

- Robust Evaluative Framework: Human evaluations are conducted to compare the 20B-parameters parti model with a strong retrieval baseline and XMC-GAN, affirming the model’s superiority in both image realism and alignment with text descriptions.

Practical and Theoretical Considerations

Practical Implications

The Parti model has significant implications for fields like digital art, content creation, and design. Its capacity to generate detailed and contextually rich images from complex textual prompts could revolutionize these areas, reducing time and technical barriers for non-experts. It could also serve as a tool for enhancing educational materials and supporting creative industries with novel visual content.

Theoretical Implications

From a theoretical perspective, this work underscores the versatile applicability of transformer-based models beyond traditional NLP tasks, extending their utility to complex multimodal generation tasks. The research delineates how scaling models significantly improves performance, an insight that could inform future work on the scalability of multimodal models.

Future Prospects

Several avenues for future development are suggested:

- Integration with Diffusion Models: While autoregressive models show promising results, combining them with diffusion models could further improve image generation quality by leveraging the strengths of both modeling approaches.

- Bias and Safety: Extensive work is needed to understand and mitigate biases inherent in training data, ensuring ethical deployment of the technology.

- Enhanced Control and Precision: Current limitations in handling negation, complex spatial relations, and stylistic precisions could be addressed, potentially by integrating fine-tuning strategies or incorporating additional constraints during sampling.

Conclusion

The "Scaling Autoregressive Models for Content-Rich Text-to-Image Generation" paper advances the state-of-the-art in text-to-image generation, demonstrating the impressive capabilities of large-scale autoregressive models. The detailed analysis of performance, robust benchmarking, and clear delineation of future work provide a comprehensive framework for ongoing research and application in both academic and practical contexts. The paper's contributions pave the way for more sophisticated and ethically sound applications of text-to-image generation technologies.