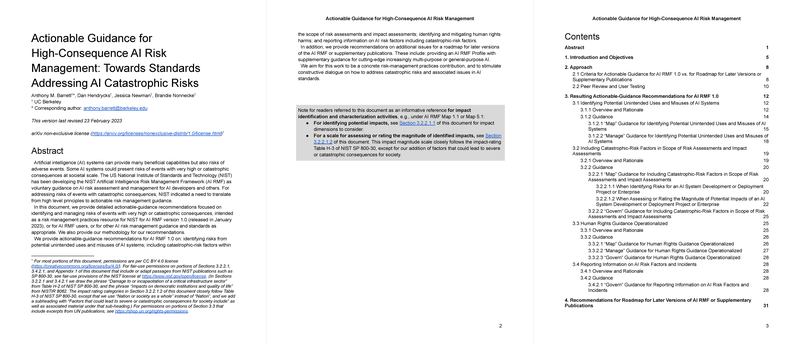

Actionable Guidance for High-Consequence AI Risk Management: Towards Standards Addressing AI Catastrophic Risks

This paper, authored by Barrett, Hendrycks, Newman, and Nonnecke, addresses the pressing need for rigorous standards and guidelines to manage the catastrophic risks associated with advanced AI systems. Focused on translating high-level risk management principles into actionable guidance, the authors contribute specific recommendations for the National Institute of Standards and Technology's (NIST) artificial intelligence Risk Management Framework (AI RMF).

Overview

AI systems, while offering transformative potential, pose risks of catastrophic events on a societal scale. Recognizing this, NIST has initiated the development of the AI RMF, providing voluntary guidelines for AI risk assessment and management. However, the current framework lacks sufficient detail concerning high-impact risks, which motivated the authors to propose actionable guidance.

Key Contributions

- Risk Identification and Management: The paper advocates for systematic processes to foresee potential unintended uses and misuses of AI systems. It highlights the necessity of integrating human rights considerations into AI development and proposes methodologies for impact identification and assessment that account for catastrophic risk factors.

- Scope and Impact Assessments: Focusing on early identification, the authors recommend strategies to include catastrophic-risk factors within risk and impact assessments. Emphasis is placed on evaluating long-term impacts and enhancing the risk management practices to address novel "Black Swan" events and systemic risks, which are often overlooked.

- AI RMF 1.0 Recommendations: Detailed recommendations for NIST's AI RMF 1.0 include criteria for risk assessment during different stages of AI development, highlighting the importance of lifecycle compatibility, enterprise risk management compatibility, and integration with existing standards.

- Future Directions: The authors suggest creating an AI RMF Profile dedicated to multi-purpose or general-purpose AI systems, such as foundation models exemplified by systems like GPT-3 or DALL-E, which pose unique risks due to their broad applicability and emergent properties.

Methodological Approach

The paper outlines procedures for drafting guidance through a proactive approach, focusing on tractable components with immediate relevance. These recommendations are framed to be easily incorporated into existing ERM processes and are designed to align with other international standards (e.g., ISO/IEC, IEEE).

Implications and Future Directions

The authors stress the importance of addressing the cascading failures of advanced AI systems, which could occur across multiple sectors. By aligning AI system objectives with human values and integrating rigorous risk management protocols, the paper aims to mitigate catastrophic risks while fostering innovation. The long-term aspiration is that these guidelines will inform legislative mandates and industry practices, promoting safer AI deployment.

The proposed roadmap for the AI RMF includes development of more extensive governance mechanisms, characterization of AI generality, and recursive improvement potential, all aimed at navigating the transformative impacts and curtailing the risks of emerging AI technologies.

In conclusion, this paper delivers a comprehensive guide to managing AI risks, synthesizing practical guidance with a strategic vision for future risk management frameworks. It prompts a necessary discourse on AI governance and underscores the urgency of developing robust, scalable solutions for AI risk mitigation in high-consequence scenarios.