Analyzing BridgeTower: Enhancements in Vision-Language Representation Learning

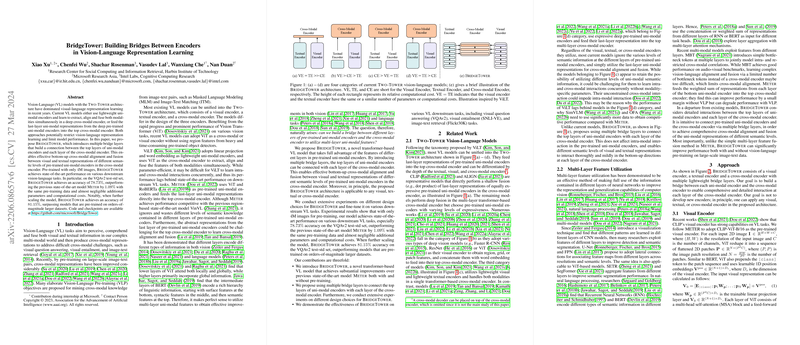

The research paper titled "BridgeTower: Building Bridges Between Encoders in Vision-Language Representation Learning" presents a novel approach to vision-language (VL) representation learning, addressing some inherent limitations in the existing Two-Tower VL models. The authors introduce BridgeTower, a design that connects uni-modal encoder layers with each layer of the cross-modal encoder through multiple bridge layers. This innovation aims to improve cross-modal alignment and fusion by leveraging bottom-up interactions between different semantic levels of pre-trained uni-modal encoders.

Existing Challenges in Vision-LLMs

Conventional VL models predominantly adopt a Two-Tower architecture comprising separate visual and textual encoders, followed by a cross-modal encoder. These models generally fall into two categories: ultralightweight uni-modal encoders for simultaneous alignment and fusion or deep pre-trained uni-modal encoders feeding into a top cross-modal encoder. Both approaches have shown potential restrictions, impairing effective vision-language representation learning. Specifically, these models overlook the rich semantic knowledge embedded across different layers of pre-trained uni-modal encoders, focusing solely on last-layer outputs for cross-modal interactions. Moreover, this static utilization can hinder intra-modal and cross-modal interaction learning when intra-modal and cross-modal tasks converge simultaneously, particularly when using lightweight encoders like ViLT.

The BridgeTower Approach

BridgeTower introduces innovative multiple bridge layers to establish connections throughout the cross-modal encoder's layers with top-layer representations from both visual and textual encoders. This design ensures thorough bottom-up alignment and fusion of multi-level semantic representations from the visual and textual encoders within the cross-modal encoder, distinguishing different semantic levels in the process.

The paper thoroughly experiments with differing designs of bridge layers and various transformer-based architectures across downstream tasks to validate its approach. With pre-training on only four million images, BridgeTower achieved state-of-the-art performance, outperforming models like Meter, which also used a similar pre-training scale. For instance, on the VQAv2 test-std set, BridgeTower displayed a substantial improvement in accuracy, achieving 78.73%, surpassing Meter by 1.09% with scarcely any additional parameters or computational demands.

Practical and Theoretical Implications

From a practical standpoint, BridgeTower's architecture is flexible and adaptable to any transformer-based model, whether it be uni-modal or multi-modal in nature. Given the minimal addition of computational resources and parameters, it provides a scalable solution without compromising efficiency. Theoretically, BridgeTower challenges the standardized approach in representation learning to go beyond relying solely on last-layer encoder outputs. It encourages multi-layer interaction in cross-modal learning processes, which may open new avenues for innovative VL representation designs.

Future Directions

Anticipating future developments, the bridge layer approach can be further optimized and expanded across larger datasets and different tasks such as visual language generation or integrative uni-modal tasks, potentially serving as a foundational framework for more diverse and complex multi-modal learning challenges. Additionally, exploring different pre-training objectives and integrating tasks focusing simultaneously on image and text structures could improve knowledge extraction and representation usability.

In conclusion, the BridgeTower introduces significant improvements in vision-LLMs by harnessing and integrating the multi-layered semantic capabilities of pre-trained encoders. Further research in this direction could yield even greater improvements in understanding and leveraging multi-modal data efficiently and effectively.