Injecting Domain Adaptation with Learning-to-hash for Effective and Efficient Zero-shot Dense Retrieval

This paper addresses a significant challenge in neural information retrieval: achieving efficient and effective retrieval across diverse and unseen domains. Traditional dense retrieval models excel at overcoming the lexical gap but incur high memory usage, especially when handling vast document corpora. This paper explores innovative approaches to compress dense representations using learning-to-hash (LTH) techniques, particularly focusing on zero-shot retrieval.

Overview and Methods

Dense retrieval models, while effective, often face scalability issues due to large memory requirements when storing dense indexes. LTH techniques, such as BPR and JPQ, offer solutions by converting dense embeddings into compact binary vectors, thus reducing memory consumption significantly. However, previous efforts have primarily focused on in-domain retrieval, lacking a comprehensive evaluation across multiple domains.

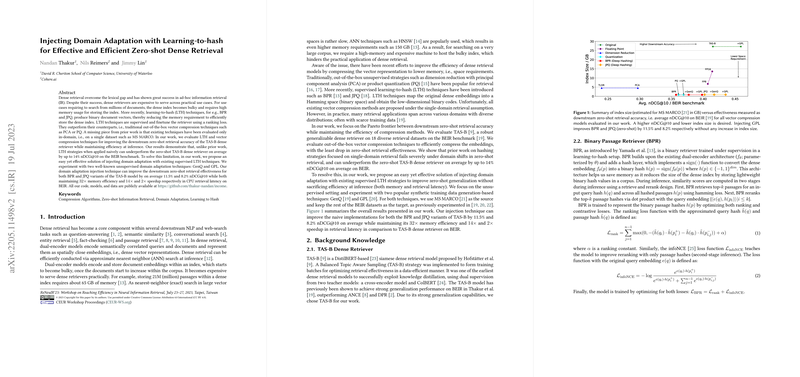

This paper evaluates the downstream zero-shot retrieval performance of the TAS-B dense retriever complemented by LTH strategies on the BEIR benchmark. Notably, the paper identifies a performance gap when LTH techniques are applied naively, observing up to a 14% drop in nDCG@10 compared to the TAS-B dense retriever. To address this shortfall, the authors propose incorporating domain adaptation techniques into the LTH framework.

Two unsupervised domain adaptation strategies, GenQ and GPL, are explored. By integrating these with existing LTH techniques, the paper demonstrates improved retrieval effectiveness. Specifically, the domain adaptation injects significant improvements in nDCG@10: boosting BPR by 11.5% and JPQ by 8.2%, while maintaining substantial memory efficiency and speedup in retrieval latency.

Experimental Results

The experimental results underscore the limitations of applying LTH strategies without domain adaptation. On average, BPR underperformed the zero-shot TAS-B retriever by 5.8 nDCG@10 points when domain adaptation was not used. Conversely, with domain adaptation, both BPR and JPQ achieved remarkable improvements in retrieval accuracy, effectively closing the gap with the zero-shot baseline.

Significantly, the GPL method within the JPQ framework not only improved the model's performance but also surpassed the original TAS-B retriever in a zero-shot setting by 2.0 nDCG@10 points on the BEIR benchmark. This indicates the potential of leveraging domain adaptation to enhance the robustness and applicability of LTH strategies beyond commonly evaluated scenarios.

Practical and Theoretical Implications

The paper's findings highlight the crucial role of domain adaptation in deploying efficient and accurate retrieval systems across varied domains. By effectively injecting domain adaptation into LTH strategies, it becomes feasible to achieve high retrieval quality without compromising on memory efficiency. This has practical implications for real-world applications where memory and computation resources are often constrained.

Theoretically, the paper suggests avenues for future research exploring deeper integration of domain adaptation and LTH methodologies. As AI continues to evolve, fostering generalizations across heterogeneous domains while maintaining efficiency becomes increasingly essential.

Conclusion

This paper provides critical insights into enhancing zero-shot dense retrieval systems through innovative domain adaptation techniques in combination with LTH. By achieving significant improvements in both retrieval accuracy and resource efficiency, the research offers a promising direction for advancing neural information retrieval capabilities. Future developments may explore more robust models and additional compression algorithms to further optimize performance across diverse retrieval tasks.