- The paper introduces a novel Brownian Bridge diffusion process that enables direct, bidirectional image translation without relying on conditional generation.

- It demonstrates improved efficiency and image quality, outperforming models like CycleGAN and LDM on key performance metrics.

- The approach is robust across varied datasets, suggesting promising applications in semantic synthesis and style translation.

Analysis of "BBDM: Image-to-image Translation with Brownian Bridge Diffusion Models"

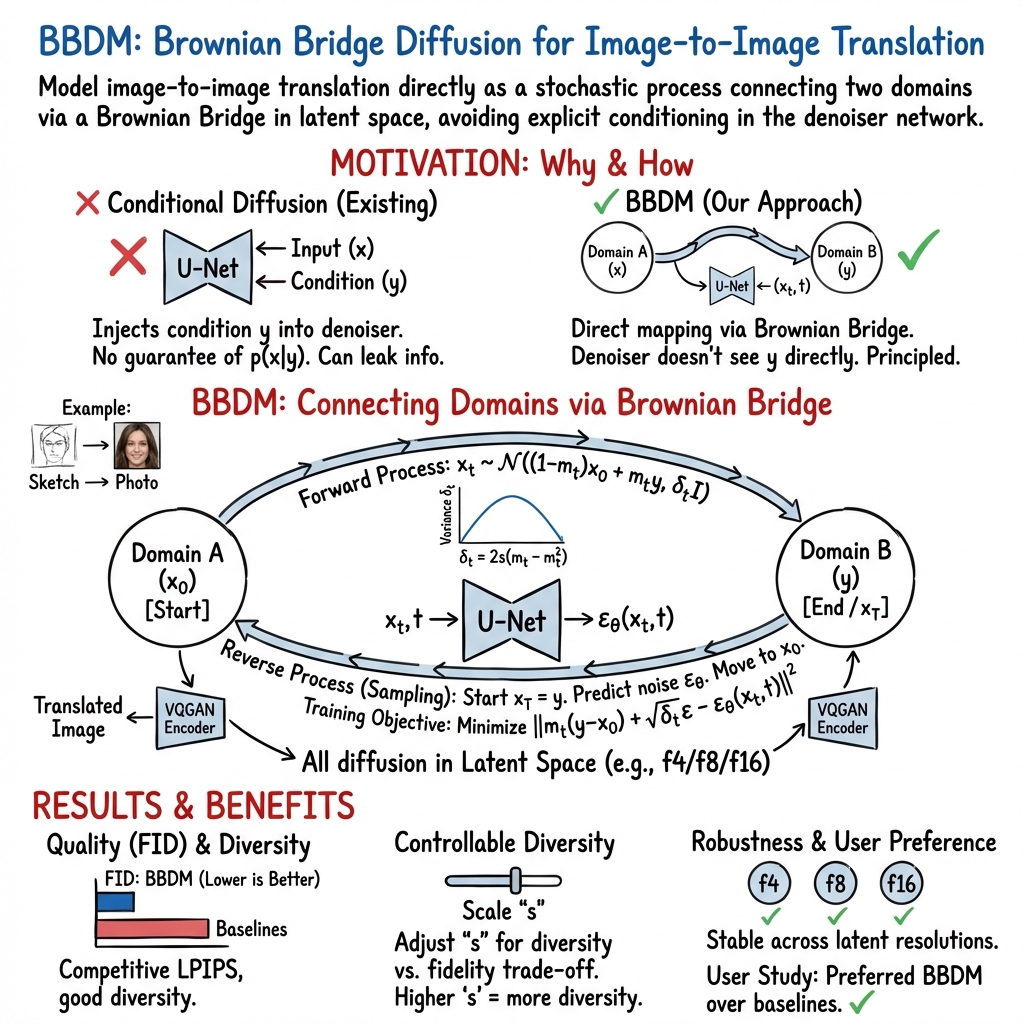

The paper presents a new methodology for image-to-image translation utilizing Brownian Bridge Diffusion Models (BBDM). This approach diverges from the conventional diffusion-based models by integrating a stochastic Brownian bridge process to form a bidirectional mapping between image domains, consequently avoiding the pitfalls associated with conditional generation.

Contribution and Methodology

The authors propose a novel image-to-image translation framework that, unlike existing diffusion models, applies a Brownian bridge process to the translation task. The conventional diffusion models treat the problem as a conditional generation, leading to significant challenges due to domain gaps. The primary contribution of the BBDM is the establishment of a direct translation mechanism devoid of auxiliary conditional generation, utilizing a Brownian bridge as its operational backbone. The authors claim that this is the first instance of employing Brownian bridge processes in this context.

Key contributions include:

- Introduction of a Brownian Bridge diffusion process to model the translation between image domains, moving away from traditional conditional generation models.

- Presentation of a methodology that allows direct learning of translations through a bidirectional diffusion process, thus mitigating conditional information reliance.

- Demonstration of the model's efficacy across various image-to-image translation tasks, supported by quantitative and qualitative performance metrics.

The Brownian bridge model is incorporated into the VQGAN latent space, where it constructs transformations characterized by a stochastic process conditioned on both the initial and final states. The paper details the mathematical foundation of this approach, contrasting it with existing models like LDM and highlighting the accelerated sampling process that maintains high image quality within reduced inference time.

Results and Implications

The experiments reveal the efficacy of BBDM across multiple tasks such as semantic image synthesis and style translation, with improvements in FID and LPIPS metrics over existing models such as Pix2Pix, CycleGAN, and LDM. The authors demonstrate that BBDM achieves consistently high stability and diversity in generated outputs, a testament to the stochastic properties of the Brownian bridge utilized in their process.

Comparative analyses substantiate that BBDM outperforms several state-of-the-art image translation models in both quality and computational efficiency. The reduced dependency on conditional information manipulation makes BBDM particularly robust in applications with domain variations, as evidenced by the evaluation over diverse datasets like CelebAMask-HQ, edges2shoes, and edges2handbags.

Speculation on Future Developments

The implications of this research are twofold: firstly, it offers a compelling alternative to the current paradigms governing image-to-image translation; secondly, it opens pathways for further research into stochastic processes like Brownian bridges in generative tasks. This approach may likely be extended beyond image synthesis to multi-modal tasks such as text-to-image translation, subject to further optimization and exploration of the parameter space, particularly concerning sampling diversity control.

Furthermore, the improved generalization capabilities suggested by the cross-domain performance of BBDM warrant examination in diverse real-world applications, potentially enabling more nuanced and controllable image translation mechanisms without extensive retraining.

In conclusion, the Brownian Bridge Diffusion Model represents a significant advancement in the translation of images across domains, reducing reliance on conditional generation processes and providing a more flexible and effective framework for achieving high-quality image translations. The robustness and performance benefits demonstrated by BBDM indicate promising avenues for theoretical exploration and practical deployment in AI-driven image synthesis and beyond.