Insights into LLMs Learning from Explanations in Context

The paper "Can LLMs learn from explanations in context?" investigates the role of explanations in enhancing the few-shot learning capabilities of LLMs (LMs). With the advent of large LMs capable of in-context learning, there is significant interest in understanding and improving their task adaptation mechanisms. This research examines whether augmenting few-shot prompts with explanations can enhance performance on a diverse set of challenging language tasks.

Methodology and Experiments

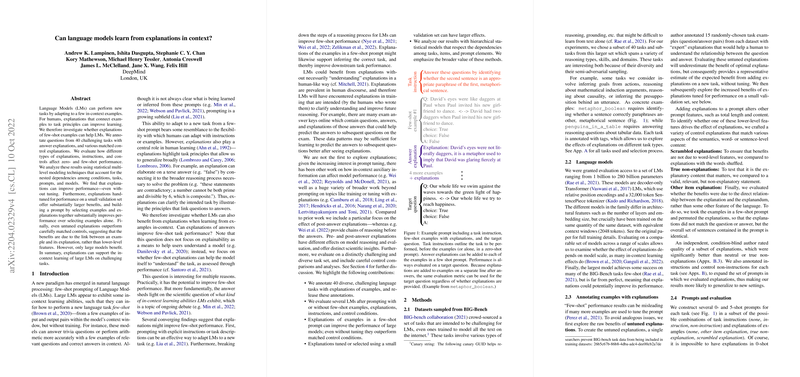

The authors evaluate the efficacy of explanations by annotating 40 tasks from the BIG-Bench benchmark with explanations for example answers. These tasks span a variety of skills, intending to challenge LMs substantially. The paper's focus is on tasks where the largest model from a suite of LMs, trained on the same data, shows sub-optimal performances in a standard few-shot setup. The performance is evaluated on models ranging from 1 to 280 billion parameters to ascertain whether the model's capacity influences its ability to benefit from explanations.

In constructing prompts, various explanations and control explanations are considered to isolate the effect of true explanatory content. Controls include scrambled explanations and content-matched non-explanations to ensure that any performance gain is attributable to the explanatory linkage rather than confounding factors like word count or semantic density. Additionally, the paper assesses the impact of task instructions versus explanations.

Key Findings

- Explanations Enhance Large Models: The paper finds that explanations provide a meaningful improvement to the largest model's few-shot performance. This benefit is notably absent in smaller models, suggesting that the ability to utilize explanations effectively emerges only at large scales, aligning with other phenomena observed in LLMs.

- Distinct Role of Explanations: Explanations prove to be more beneficial compared to adding task instructions alone. This indicates that merely understanding task boundaries through instructions is less effective than linking examples to task principles via explanations. Furthermore, explanations generally outperform carefully constructed control conditions, reaffirming that their value lies in elucidating the example rather than superficial characteristics such as added text or semantic variety.

- Tuning Amplifies Explanation Benefits: Selectively curating explanations based on a small validation set significantly boosts model performance. This process underscores the potential of explanations when optimally tuned, demonstrating that their utility is not merely theoretical but practical, contingent on the quality and relevance of explanatory content.

Theoretical and Practical Implications

At the theoretical level, this research underscores the sophisticated in-context learning capabilities that large LMs possess. The ability to benefit from explanations implies the models' capacity for higher-order reasoning processes and task abstraction. These insights contribute to ongoing discussions in the field regarding the mechanisms underlying few-shot learning.

Practically, the findings suggest promising avenues for enhancing LMs' adaptability to new tasks by strategically leveraging explanations. This could be particularly relevant for applications where task-specific retraining is intractable or where real-time adaptability is crucial.

Future Directions

The research opens several avenues for future exploration. One potential area is the examination of interactive explanation frameworks, where models could iteratively refine their understanding of tasks through dialogue-like interactions. Additionally, integrating explanations in more complex reasoning spaces, combining them with other task decomposition strategies such as pre-answer reasoning, could further clarify their distinct and combined benefits.

In conclusion, this paper establishes explanations as a potent mechanism for enhancing the few-shot learning performance of LLMs, providing both theoretical insights and practical implications for the future development and application of these AI systems.