Competition-Level Code Generation with AlphaCode

The paper "Competition-Level Code Generation with AlphaCode" introduces AlphaCode, an advanced system aimed at solving competitive programming problems through automatic code generation. The authors developed AlphaCode to address the complexity of generating code for tasks requiring substantial algorithmic reasoning and robust natural language understanding, evident in competitive programming environments.

Overview and Key Contributions

AlphaCode consists of several key components:

- Extensive and Clean Dataset: The authors compiled a comprehensive dataset from competitive programming platforms, ensuring rigorous temporal splits to prevent data leakage and to keep training and evaluation sets distinct. This dataset includes both correct and incorrect submissions, enhancing the model's ability to learn from varied examples.

- Transformer-Based Architecture: Leveraging large, efficient transformer models pre-trained on a vast corpus from GitHub and subsequently fine-tuned on the curated competitive programming dataset, the authors tailored an architecture to handle both the complexity and the length of competitive programming problems and solutions.

- Large-Scale Sampling and Filtering: By generating millions of samples per problem, AlphaCode uses a combination of example-based filtering and behavior clustering to select a manageable subset of candidate solutions for final evaluation.

Strong Numerical Results

The performance of AlphaCode was evaluated both on synthetic benchmarks and in real competitive programming contests:

- Synthetic Benchmarks: On the CodeContests dataset, which includes unseen problems split temporally, AlphaCode achieved a solve rate of 34.2% with up to one million samples. The test set performance was consistently strong, achieving a 29.6% solve rate with 100,000 samples.

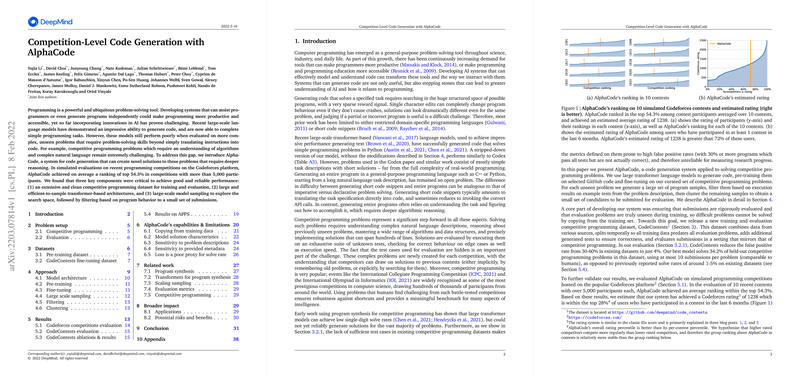

- Competitive Programming Contests: Evaluated on 10 recent Codeforces contests, AlphaCode achieved an average ranking within the top 54.3%, outperforming 72% of participants who had competed at least once in the last six months. Notably, AlphaCode achieved this ranking even when constrained to 10 submissions per problem.

Implications and Future Directions

Practical Implications

- Productivity Tools: AlphaCode demonstrates the potential for substantial productivity tools in software development, particularly in automating routine and complex coding tasks. By understanding and generating sophisticated algorithms, such tools can support developers in creating correct and optimized code snippets.

- Educational Aids: Such models can serve as educational tools, helping novice programmers learn through examples generated for various problem statements, thus bridging the gap between learning materials and practical problem-solving.

- Competitive Programming and Interviews: The system can act as a preparation tool for competitive programming and technical interviews, offering exposure to a wide range of problem types and difficulty levels.

Theoretical Implications

- Advances in Program Synthesis: The success of AlphaCode underscores the advancements in program synthesis using large-scale transformer models, highlighting the feasibility of generating non-trivial programs that require deeper reasoning than simple code completions.

- Evaluation Metrics: The authors emphasized the importance of robust evaluation metrics, including reducing the false positive rate in synthetic benchmarks and adhering to rigorous evaluation protocols that mirror the testing conditions of actual programming contests.

Speculating on Future Developments

The research encapsulates significant strides towards generalizable AI for coding tasks. Future developments may include:

- Enhanced Sampling Techniques: Improving the efficiency and diversity of large-scale sampling to further increase the solve rates while reducing computational overhead.

- Cross-Domain Adaptability: Extending the system to handle multi-domain tasks, enabling a broader range of applications beyond competitive programming.

- Interactivity and Real-Time Feedback: Integrating real-time feedback mechanisms whereby the model can adaptively refine its solutions based on immediate evaluations, mimicking a more interactive problem-solving process.

- Integrating Formal Verification: Combining code generation with formal verification methods to ensure that the generated solutions are not only syntactically correct but also meet formal correctness criteria, enhancing reliability and robustness.

In conclusion, AlphaCode represents a notable advancement in AI-driven code generation, demonstrating the potential to automate complex programming tasks. By combining extensive datasets, sophisticated models, and innovative sampling techniques, AlphaCode sets a benchmark for future research in AI and program synthesis. The system's implications span practical applications, educational tools, and theoretical insights, paving the way for continued progress in AI-assisted programming.