Improved Universal Sentence Embeddings with Prompt-based Contrastive Learning and Energy-based Learning

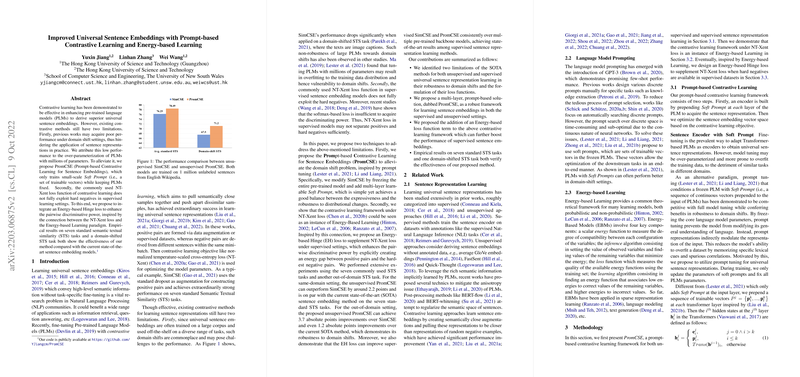

The paper "Improved Universal Sentence Embeddings with Prompt-based Contrastive Learning and Energy-based Learning" presents an innovative approach to enhancing universal sentence embeddings through the application of Prompt-based Contrastive Learning (PromCSE) and Energy-based Learning techniques. This work addresses two pivotal limitations within existing contrastive learning methodologies used in pre-trained LLMs (PLMs): vulnerability to domain shifts and inefficient exploitation of hard negatives.

The necessity for robust universal sentence embeddings, which deliver high-level semantic information applicable across various tasks like information retrieval and question answering, has been extensively discussed within the field of NLP. Prior works using contrastive learning have demonstrated success; however, challenges persist in terms of performance degradation under domain shift conditions and suboptimal handling of hard negatives during supervised learning, as seen with standard NT-Xent loss functions.

PromCSE: Prompt-based Contrastive Learning Framework

PromCSE, introduced in this paper, innovatively tackles the domain shift problem by leveraging the concept of prompt tuning. This approach incorporates a Soft Prompt - a sequence of trainable vectors - prepended at each layer of the Transformer within the PLM. By freezing the PLM's parameters and only training the soft prompts, PromCSE achieves a balance between expressiveness and robustness. The model is trained using the NT-Xent loss, a widely used objective in contrastive learning, which aids in refining sentence representations by minimizing semantic distances between similar sentence pairs.

Empirical evaluations on seven standard STS tasks reveal that PromCSE outperforms existing state-of-the-art models such as SimCSE and DiffCSE. Specifically, unsupervised PromCSE-BERT models demonstrate a notable improvement, nearly 2.2 points higher than the unsupervised SimCSE-BERT, indicating enhanced performance even without task-specific supervision.

Energy-based Learning and Energy-based Hinge Loss

The paper further explores the intersection of contrastive learning and Energy-based Learning by demonstrating that NT-Xent loss can be interpreted as an instance of Energy-based Learning loss functions. This insight facilitates the introduction of an Energy-based Hinge (EH) loss, designed to improve the pair-wise discriminative power by explicitly maximizing the separation between positive pairs and the hardest negatives. The effectiveness of EH loss was validated by the achievement of state-of-the-art results among supervised sentence representation learning models.

Numerical Results and Comparative Performance

Detailed experimental results emphasize the effectiveness of these innovations. On the domain-shifted CxC-STS tasks, unsupervised PromCSE outperforms unsupervised SimCSE-BERT by 3.7 points, underscoring PromCSE's robustness to domain variations. The incorporation of EH loss in supervised settings further enhances performance across multiple PLMs, suggesting its utility in contexts where hard negatives are involved. By achieving superior results on both standard and domain-shifted STS tasks, PromCSE demonstrates its capability to advance the field of universal sentence representation significantly.

Implications and Future Directions

This research contributes to foundational understanding and methodological advancements in the development of universal sentence embeddings. Practically, it suggests that soft prompting and energy-based techniques can significantly enhance sentence representation models' robustness and discriminative power, especially across varied domains.

Future development in this field could explore automatic methods for identifying or generating hard negatives to enhance unsupervised learning settings further. The framework established by this paper paves the way for such innovations, promising improvements in NLP tasks requiring nuanced semantic understanding and adaptation to diverse contexts. Additionally, addressing the integration of these techniques into broader neural architectures and tasks beyond STS could potentially extend their applicability and impact.