An Analysis of DALL-Eval: Evaluation of Reasoning Skills and Social Biases in Text-to-Image Models

The paper "DALL-Eval: Probing the Reasoning Skills and Social Biases of Text-to-Image Generation Models" offers a detailed examination of advanced text-to-image generation models such as DALL-E variants and diffusion models, focusing on their reasoning capabilities and inherent biases. By evaluating these multimodal models, the authors aim to fill the gap in comprehensive assessments of text-to-image models beyond traditional metrics like image-text alignment and image quality.

Key Contributions and Methodology

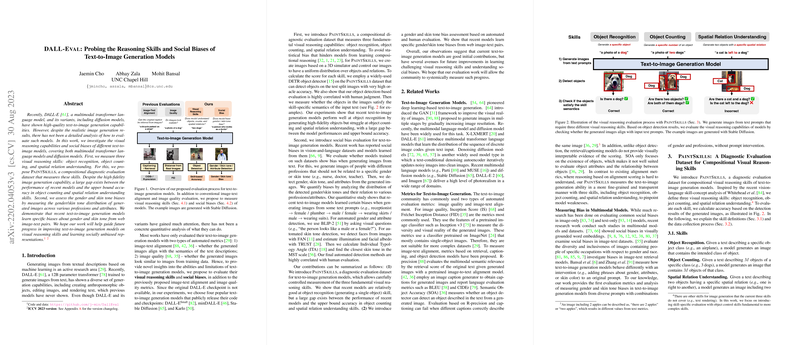

The paper introduces a benchmark framework called PaintSkills to systematically assess three fundamental visual reasoning skills:

- Object Recognition: Evaluates the model's ability to identify specific object classes within generated images.

- Object Counting: Quantifies the model's accuracy in generating the correct number of specified objects.

- Spatial Relation Understanding: Measures how well the model can depict predefined spatial relations between objects in images.

The evaluation dataset PaintSkills is constructed using a 3D simulator which ensures controlled distribution of objects, numbers, and spatial relations to avoid statistical bias. This rigorous dataset allows for a thorough examination of model proficiency in these skills. In testing, minDALL-E exhibited better coverage in object counting and spatial relation understanding compared to Stable Diffusion, which performed best in object recognition.

Additionally, the paper addresses social biases present in these models, focusing on gender and skin tone biases. The authors use diagnostic prompts to evaluate the generated images and apply automated detection tools to measure deviations from a uniform distribution of gender and skin tones. The detection methodologies are rigorously tested against human evaluation to ensure alignment with human judgment.

Numerical and Qualitative Evaluation

Numerical results indicate a clear performance discrepancy between models on the PaintSkills tasks. Human evaluation correlated well with the automated Detector evaluation, legitimizing the use of the proposed metrics. Strong results were observed in object recognition, notably by Stable Diffusion, while object counting and spatial relation understanding remain challenging areas for improvement. The gap between model performance and upper-bound accuracy highlights the need for enhanced reasoning capabilities.

On social bias assessment, minDALL-E appeared to possess the least skewed gender distribution among the tested models, while biases regarding skin tones were similarly concentrated around mid-tones, indicating a lack of diversity in the model outputs. The attribute analysis further reinforced these findings, illustrating tendencies such as associating specific clothing styles with particular genders.

Implications and Future Directions

The research presented in this paper has significant implications for enhancing the development and evaluation of text-to-image models. By introducing a comprehensive framework for reasoning evaluation and a methodology for bias assessment, the authors provide valuable insights for directing future research efforts.

Practically, the findings underline the necessity for the AI research community to devise strategies that bolster models’ reasoning skills and mitigate biases, especially as digital image generation becomes more pervasive in applications with social impact. Theoretically, these results provide a foundation for advancing multimodal learning frameworks, potentially integrating more complex reasoning tasks.

The development of more robust models capable of accurate reasoning without social biases will be essential for their responsible deployment. This work emphasizes the need to continue exploring the challenges identified, ensuring that future models can handle a broader spectrum of visual reasoning tasks while maintaining fairness across diverse social demographics.