Overview of "Are we really making much progress? Revisiting, benchmarking, and refining heterogeneous graph neural networks"

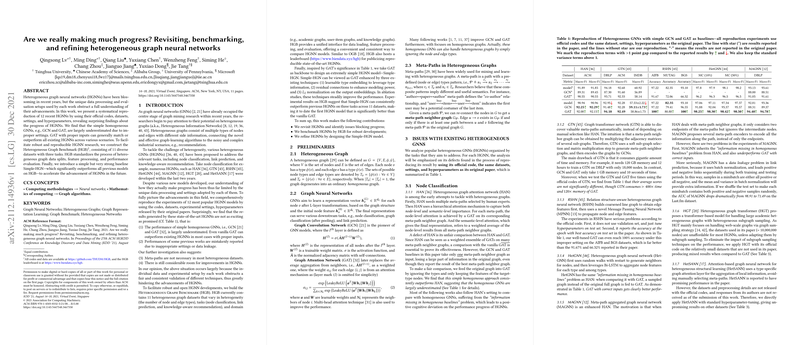

The paper "Are we really making much progress? Revisiting, benchmarking, and refining heterogeneous graph neural networks" presents a methodical evaluation and benchmarking of Heterogeneous Graph Neural Networks (HGNNs). The work focuses on identifying discrepancies in the perceived progress of HGNNs. To accomplish this, it systematically reproduces experiments using the official codes, datasets, settings, and hyperparameters of 12 recent HGNN models. The authors reveal surprising findings about the advancement pace in HGNNs, suggesting that homogeneous Graph Neural Networks (GNNs), specifically GCN and GAT, are often underestimated. Through this exploration, they propose the Heterogeneous Graph Benchmark (HGB) to foster reproducibility in HGNN research and introduce a robust baseline model, Simple-HGN, which claims to outperform existing models significantly.

Key Insights from the Study

The paper claims that upon careful reproduction and scrutiny of existing models:

- Underestimation of Homogeneous GNNs: Simple homogeneous GNNs such as GCN and GAT, when used under proper settings, can match or even outperform current HGNNs across various benchmarks. This finding challenges the established view that heterogeneity inherently benefits performance.

- Data Leakage and Improper Settings: Some HGNNs have misleading performance outcomes due to inappropriate experiments, like tuning on test sets, or data leakage in results reporting.

- Meta-path Necessity Questioned: The research questions whether meta-paths are necessary for most heterogeneous datasets, considering the comparable outcomes from homogeneous GNNs.

- Considerable Improvement Room: The paper suggests ample opportunity for performance enhancements in HGNNs.

Introduction of Heterogeneous Graph Benchmark (HGB)

To standardize heterogeneous graph research and facilitate consistent evaluation, the authors present HGB. This benchmark encompasses 11 datasets of varied domains and tasks (node classification, link prediction, and knowledge-aware recommendation), standardizing data splits, feature processing, and evaluation processes. It emulates the success of Open Graph Benchmark (OGB) by offering a leaderboard to publicly showcase state-of-the-art HGNNs.

Proposed Baseline: Simple-HGN

As a concluding contribution, the paper introduces Simple-HGN, deemed a simple yet highly effective baseline that significantly surpasses previous HGNNs in performance across all tasks on HGB. The model's architecture draws on the backbone of GAT, enhanced with three additional components: learnable type embedding, residual connections, and normalization on the output embeddings.

Speculation and Future Considerations

This paper triggers important discussions about the current paradigms in applying graph neural networks to heterogeneous graphs and encourages the community to reevaluate unnecessary complexities in model architectures. It posits that effective leveraging of type information in GAT and straightforward enhancements could eliminate the need for advanced or more complex models that rely on meta-paths.

Implications and Future Work in AI

Practically, the findings indicate potential cost savings and efficiency improvements by revisiting and simplifying models for heterogeneous network tasks. Theoretically, this paper paves the way for further exploration into simpler and more interpretable GNN architectures that could compete or even outperform complex alternatives. Future work could involve exploring alternative methods for ensembling homogeneous GNNs or developing new forms of type-aware attention mechanisms that optimally balance complexity and performance. The paper insists upon fostering a benchmarking culture to drive reproducibility, ensuring that true progress can be validated against a consistent backdrop of standardized datasets and evaluation methods.