An Expert Overview of SLIP: Self-supervision Meets Language-Image Pre-training

In the paper titled "SLIP: Self-supervision Meets Language-Image Pre-training," the authors present a novel approach that combines self-supervised learning with language-based supervision within the framework of the Contrastive Language-Image Pre-training (CLIP) model. The core objective of the paper is to investigate whether integrating self-supervised learning enhances the representation learning capabilities of language-supervised visual models. The authors introduce SLIP, a multi-task learning framework designed to exploit the synergy between self-supervised learning methodologies and the pre-training undertaken by CLIP.

Key Contributions and Methodology

At a fundamental level, SLIP extends the capabilities of the CLIP model by introducing an additional self-supervised learning objective into its training process. This integration is crucial given the unique strengths both methodologies bring to visual representation learning. Self-supervised learning has been successful in learning robust representations without reliance on labeled data, a process crucial for scaling models to large datasets. Meanwhile, language-image supervision, as operationalized in CLIP, leverages natural language embeddings to guide image representation, enabling effective zero-shot transfer capabilities.

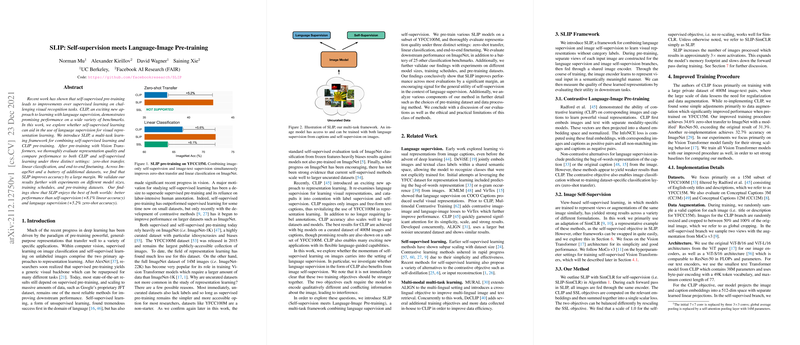

The authors utilize Vision Transformers as the backbone for SLIP, employing multi-task learning to ensure that both self-supervised and language-supervised objectives are met concurrently during training. Evaluations of SLIP are conducted across three primary tasks: zero-shot transfer, linear classification, and end-to-end finetuning, with exceptional performance gains reported over both self-supervised baselines and existing language supervision techniques like CLIP.

Empirical Evaluations and Results

The empirical evaluations underscore the efficacy of SLIP across several benchmark datasets, including ImageNet, CIFAR, and more. Notably, on zero-shot transfer tasks, SLIP demonstrates a significant performance improvement, achieving, for example, an 8.1% increase in linear accuracy and a 5.2% boost in zero-shot accuracy compared to its CLIP counterpart. Additional ablation studies reveal that the multi-task nature of SLIP effectively ties together the strengths of self-supervision and language-image supervision, leading to superior downstream performance across various classification benchmarks.

Theoretical and Practical Implications

From a theoretical standpoint, the success of SLIP indicates that combining different supervision paradigms can yield complementary gains and more generalized representations. SLIP offers a promising direction towards overcoming existing limitations associated with reliance on curated datasets, as it shows robust performance even when trained on uncurated datasets such as YFCC100M. Practically, this leads to more adaptable visual models that require less manual curation and annotation, potentially reducing costs and extending applicability to broader datasets and domains.

Future Directions

The promising results indicate several avenues for future research. While the integration of self-supervision with language cues is shown to be effective, further exploration into the scaling behavior of SLIP with massive datasets and diverse architectures could yield deeper insights into its limits and potential. There is also room to explore incorporating SLIP into other multi-modal learning frameworks, potentially improving models dealing with complex datasets involving audio or video data alongside text and images.

Overall, SLIP stands as a significant advancement in the domain of self-supervised and language-supervised learning. By judiciously combining the mechanisms of self-supervision and natural language guidance, SLIP paves the way for more efficient, scalable, and effective visual representation learning routines. As the field progresses, such hybrid approaches that amalgamate different learning paradigms might define the path toward more sophisticated and versatile neural architectures.