Introduction

Advancements in AI have led to the integration of AI systems into various sectors, enhancing decision-making processes in fields such as healthcare, finance, and criminal justice. These complex systems, capable of predictive analytics, aim to augment human decisions. Nonetheless, while AI systems excel in their predictive capabilities, their implementation raises significant considerations, particularly regarding the interaction between humans and AI during decision-making processes. Understanding this human-AI interplay is essential for developing AI that is both effective and trustworthy.

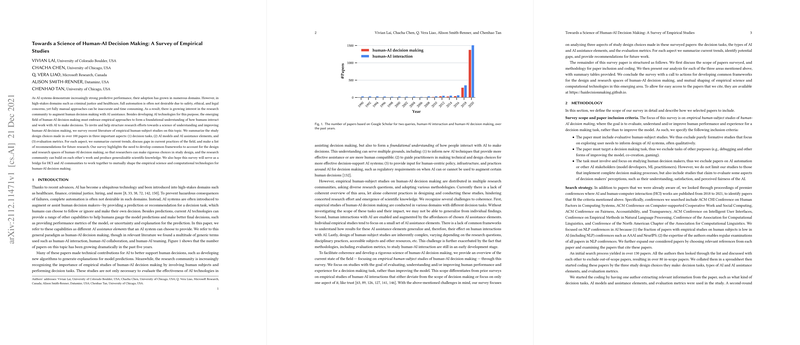

Decision Tasks in Human-AI Interaction

Empirical studies on human-AI decision-making have examined a wide array of tasks across numerous domains. These tasks range from recidivism prediction in law to medical diagnoses, from financial assessments to recommendations for leisure activities. Each task possesses unique characteristics, such as the level of risk involved, the expertise required, and the subjectivity of decisions. Recognizing these characteristics is crucial for interpreting paper results and understanding their limitations and generalizability.

Notably, decision tasks often stem from high-stakes domains where errors can have serious implications. However, these tasks are frequently studied using datasets and models that may not align with real-world complexities. Moreover, most tasks examined concentrate on "AI for discovery," where AI assists in uncovering patterns and insights that humans may overlook, as opposed to tasks where AI simply emulates human capabilities.

AI Models and Assistance Elements

Incorporating AI assistance into decision-making extends beyond merely providing predictions. It includes information about model predictions such as confidence scores, feature importance, and explanations. These elements aim to provide insights into the model's reasoning, enhancing transparency and supporting human decision-makers.

Apart from predictive models, different systems examine user agency, interventions in cognitive processes, and the presentation of model documentation. These aspects critically shape user experience and are essential for fostering effective human-AI collaboration. While the roles of deep learning models have received significant attention, many studies still gravitate toward simpler, traditional models due to their interpretability.

Evaluation Metrics for Human-AI Decision Making

The evaluation of AI-enhanced decision-making processes encompasses various metrics, covering both the effectiveness of decision tasks and the subjective perceptions of AI systems. Objectively, accuracy and efficiency are predominant factors used to gauge decision performance. Subjectively, user trust, understanding of AI, and satisfaction play integral roles in evaluating and refining AI-assisted decision-making systems.

A recurring issue is the divergence between subjective and objective metrics, which sometimes results in disparate portrayals of user trust and satisfaction. For instance, individuals may report a high level of trust in an AI system but demonstrate behaviors indicating reliance or skepticism. Establishing cohesive, validated metrics is imperative for producing reliable and comparable research outcomes.

Towards a Unified Approach

The path to a comprehensive science of human-AI decision-making requires bridging multiple research efforts and developing frameworks that capture the nuances of decision tasks and AI assistance elements. Fostering collaboration between the human-computer interaction and AI communities could lead to breakthroughs in creating AI systems that not only support but also align with human decision-making patterns.

Ultimately, the goal is to create AI systems that are not only proficient in their predictive capabilities but are also attuned to human needs, ethical considerations, and the complexities of real-world applications. This pursuit calls for ongoing research, a genuine understanding of human interactions with AI, and a commitment to an AI future that complements and enhances human decision-making.