Analysis of "Simple Local Attentions Remain Competitive for Long-Context Tasks"

The paper "Simple Local Attentions Remain Competitive for Long-Context Tasks" by Xiong et al. provides a detailed examination of efficient attention mechanisms within the transformer architecture, specifically for tasks that require processing long text sequences beyond typical model limits. This research contributes significantly to the comparative evaluation of various attention model architectures under a practical, pretrain-and-finetune framework, offering insights into the applicability and efficiency of these approaches in real-world scenarios.

Methodological Approach

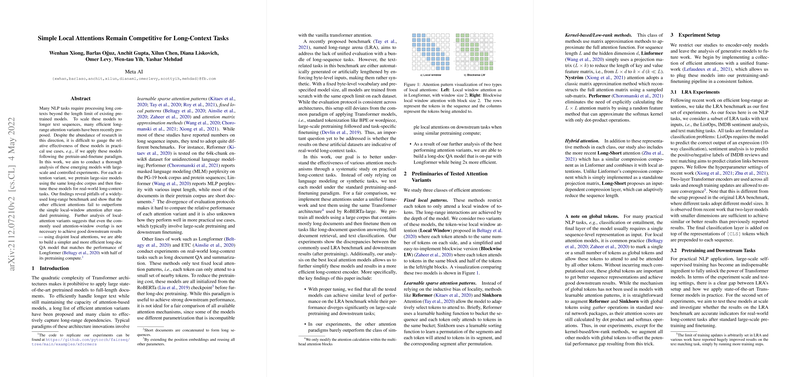

The paper focuses on three categories of efficient attention mechanisms: fixed local patterns, learnable sparse attention patterns, and kernel-based/low-rank methods. Notably, each class is represented by models like Local Window Attention, Reformer, and Linformer, respectively. The authors implement these attention variants within a consistent framework, facilitating a controlled comparison.

To provide a robust evaluation, the paper utilizes large-scale pretraining on a corpus comprising long documents, ensuring fairness by initiating training from scratch rather than leveraging pre-existing model checkpoints such as RoBERTa. This approach is pivotal in ensuring that different architectural parametrizations do not unfairly influence the results, offering a truly comparative analysis of the attention mechanisms themselves.

Key Findings

The results of this paper indicate several noteworthy conclusions:

- Performance of Local Attentions: Simple local attentions, characterized by their restriction to a token's immediate neighbors, demonstrated competitive performance in long-context downstream tasks. The paper shows that after standard pretraining, other complex attention mechanisms failed to substantially outperform these laid-back approaches.

- Inadequacies of Long-Range Arena (LRA) Benchmark: The authors reveal discrepancies between results obtained from the LRA benchmark and those from large-scale pretraining followed by downstream evaluation. The LRA does not adequately predict the performance on practical tasks due to its synthetic nature.

- Efficiency and Simplification: By analyzing attention-window overlaps, the authors demonstrate that these models can be made more efficient. Specifically, a disjoint local attention variant performed comparably to Longformer, achieving similar downstream results with significantly reduced computational demands.

Implications and Future Directions

The implications of these findings highlight the enduring efficacy of simpler local attention mechanisms, challenging the necessity for more complex architectures in certain contexts. This reaffirms the importance of locality biases inherent in natural language processing tasks.

Future research could explore the integration of these insights into generative models or investigate further optimizations in pretraining strategies, such as dynamic or staged context window scaling to enhance efficiency without sacrificing model performance.

Moreover, this work calls for the reconsideration of benchmark design to better reflect the nuances of real-world task performance, thereby guiding the development of more effective and practical long-range attention models. The potential divergence between synthetic benchmarks and actual downstream utility underscores the need for benchmarks that incorporate more realistic, contextually varied datasets.

In conclusion, this paper presents a compelling argument for re-evaluating the complexities introduced into transformer-based models and reaffirms the enduring value of simple, computationally efficient attention mechanisms in processing long-context textual data.