An Examination of VL-Adapter for Efficient Transfer Learning in Vision-and-LLMs

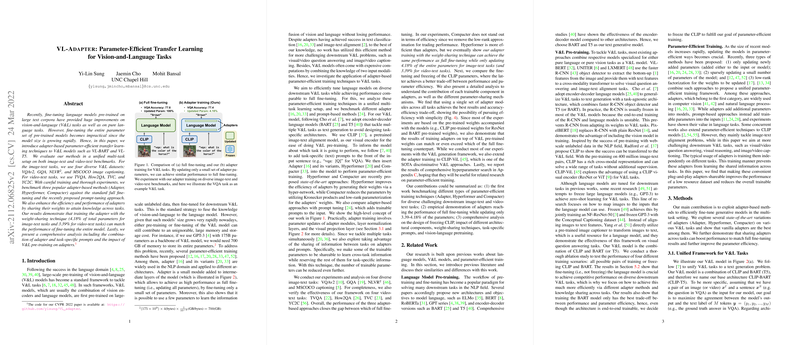

The paper introduces "VL-Adapter," a parameter-efficient transfer learning approach designed to optimize vision-and-language (V-L) models, specifically VL-BART and VL-T5. This approach is motivated by the increasing impracticability of fine-tuning the entire parameter sets of large-scale models due to their rapid growth in size. The authors investigate adapter-based methods, a prominent technique in NLP and, to some extent, computer vision, to overcome this challenge in V-L applications.

Methodology Overview

The authors leverage adapter-based methods to address the memory and storage burdens of large vision-and-LLMs. Three specific methods are evaluated: Adapter, Hyperformer, and Compacter. Each method offers a mechanism to fine-tune models by modifying only a small subset of parameters. The paper is conducted within a multi-task learning framework, including image-text and video-text scenarios. The image-text tasks span datasets such as VQAv2, GQA, NLVR, and MSCOCO captioning, while video-text tasks include TVQA, How2QA, TVC, and YC2C.

- Adapter: A straightforward method involving small, trainable modules inserted into each layer of the model to fine-tune only a portion of parameters.

- Hyperformer: Utilizes hyper-networks to dynamically generate adapter weights conditioned on task and layer indices, allowing parameter sharing across tasks.

- Compacter: Employs parameter sharing and low-rank parameterization techniques to further reduce the model's trainable parameters.

Empirical Findings

The experimental results suggest that the Single Adapter method provides the best balance between performance and efficiency, closely matching the performance of full model fine-tuning while updating only a small fraction of the parameters (4.18% for image-text tasks and 3.39% for video-text tasks). In comparison, Hyperformer and Compacter demonstrate mixed results. While Hyperformer improves parameter efficiency, it does not surpass the Single Adapter in performance. Compacter’s use of Kronecker product approximations and low-rank factorization produces less notable results, presumably due to constraints limiting effective information fusion in vision-and-language contexts.

Theoretical and Practical Implications

The introduction of VL-Adapter has several implications in the fields of transfer learning and model optimization:

- Theoretical Implications: The success of sharing weights across tasks via adapters suggests new avenues for multi-task learning, emphasizing the potential to reduce redundancy while retaining task-specific knowledge within shared architectures. This challenges the conventional wisdom of task independence in multi-task setups.

- Practical Implications: VL-Adapter's effectiveness demonstrates a viable strategy for model fine-tuning within resource-constrained environments. The findings hold particular significance for applications requiring frequent updates or deployment across devices with limited computational resources.

Future Directions

Future developments could explore deeper integrations of adapter techniques with pre-training strategies to further enhance V-L model performance and efficiency. The exploration of adapter methods in other multimodal contexts beyond vision-and-language tasks may also yield valuable insights, potentially benefiting a broader range of AI applications.

In conclusion, by reducing computational demands through selective parameter optimization, VL-Adapter establishes a promising framework for balancing model efficiency with performance, a critical development in aligning the capabilities of modern AI systems with practical deployment needs.