An Analytical Review of SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning

The paper "SwinBERT: End-to-End Transformers with Sparse Attention for Video Captioning" introduces a novel approach to video captioning utilizing an end-to-end Transformer architecture. The paper challenges the conventional methodology that relies heavily on offline-extracted dense video features by employing SwinBERT, which processes raw video frames directly. This paper discusses the SwinBERT model's structure, the integration of a sparse attention mechanism, and presents empirical results across multiple datasets.

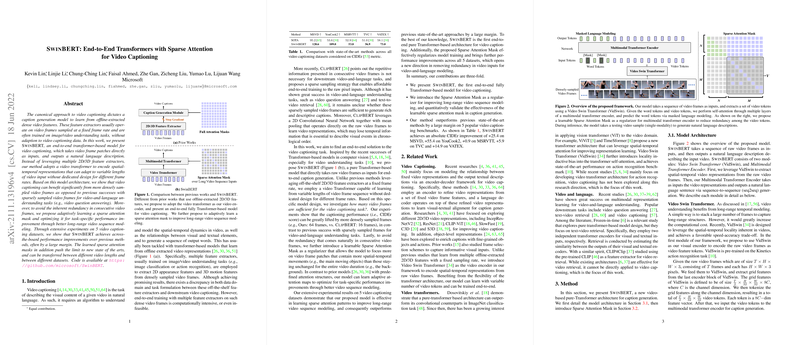

The SwinBERT model operates by using the Video Swin Transformer (VidSwin) to process video frames and generate token representations that are subsequently input to a multimodal Transformer encoder-decoder for natural language generation. A core component of the paper is the use of a Sparse Attention Mask, which acts as a regularizer to enhance long-range sequence modeling in videos by reducing redundancy and focusing on salient video features. This methodology ensures that SwinBERT can effectively manage variable-length video inputs without needing intricate designs for different frame rates.

Empirical results showcased on five datasets—MSVD, YouCook2, MSRVTT, VATEX, and TVC—highlight the robust performance enhancements brought by SwinBERT over existing methods. Specifically, the model achieves significant gains in CIDEr scores across all datasets, demonstrating the potential of end-to-end Transformers in video captioning tasks. On MSVD, the improvements were notably evident, with an increase of 25.4 points over previous state-of-the-art methods. Furthermore, its adaptable sparse attention mechanisms prove effective across different domains, enabling the transferability of learned patterns between datasets and video lengths, reflecting on its adaptability and generalization capabilities.

The introduction of the Sparse Attention Mask is noteworthy. It reflects an understanding of the inherent redundancy in video data and optimizes attention resources by adaptively focusing on dynamic regions within the video frame sequence. This emphasis on adaptive learning underscores the importance of efficient resource allocation in Transformer architectures, especially when dealing with the large input sizes characteristic of video data.

Speculative considerations point towards the potential future integration of large-scale video-language pre-training with SwinBERT to further exploit its capabilities. Given the ongoing advancements in Transformer networks in NLP and CV, it would be plausible to integrate SwinBERT within multi-modal learning frameworks, where text and video are simultaneously leveraged for richer context modeling.

In conclusion, SwinBERT emerges as a competent end-to-end architecture for video captioning with its innovative use of sparse attention, contributing valuable insights into efficient video-text alignment mechanisms in the domain of machine learning and computer vision. While there is room for enhancing computational speed through refined implementations of the sparse mask, SwinBERT sets a precedent for future work where dynamic attention models in Transformer networks can drive improvements in multi-modal understanding tasks.