Analysis of "ClipCap: CLIP Prefix for Image Captioning"

The paper "ClipCap: CLIP Prefix for Image Captioning" introduces a novel methodology for generating captions from images by leveraging the pre-trained CLIP model as a semantic pre-processing component. The authors utilize a mapping network to bridge the gap between image embeddings created by CLIP and text generation facilitated by a LLM, GPT-2. This approach positions itself as an efficient and less resource-intensive alternative to traditional image captioning models.

Methodological Approach

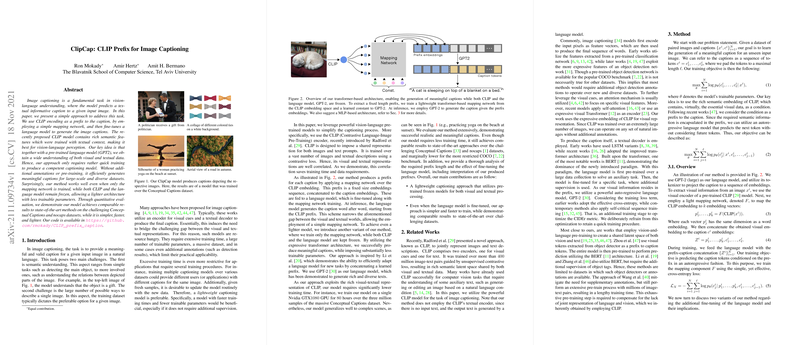

Central to this paper is the integration of the CLIP architecture, known for its robust joint representation of visual and textual data. The researchers propose using the CLIP encoder to create a fixed-length prefix from an image, which serves as an input to a mapping network. This network translates CLIP's embedding space into a format compatible with GPT-2, which remains unchanged during this process. The mapping network can operate via a lightweight multi-layer perceptron (MLP) or a more expressive transformer-based architecture.

Two configurations are explored: one that allows fine-tuning of GPT-2 and one keeping it static. The latter reduces computational demands significantly, maintaining satisfactory output quality while restricting the number of trainable parameters.

Numerical Evaluation

The proposed method was benchmarked against state-of-the-art models such as Oscar and VLP across datasets like Conceptual Captions, nocaps, and COCO. The paper highlights substantial efficiency gains, particularly in training time, where the ClipCap model requires significantly less computational power—effectively allowing training on less advanced hardware. Although it achieves numerically comparable results to existing solutions, especially with CLIP's strong visual processing capacities, slight underperformance is noted on certain metrics like COCO without additional object tags.

Implications and Future Directions

The results suggest that ClipCap is particularly adept at datasets with a rich variety of visual concepts, benefiting from CLIP's expansive pre-training framework. The architecture simplicity promises practical advantages for applications requiring rapid deployment of captioning models across diverse image corpora.

This work implies potential shifts in image captioning paradigms towards leveraging powerful vision-LLMs, reducing reliance on voluminous datasets or extensive training. Such direction could lead to applications in real-time image processing scenarios, where adaptability and low latency are critical.

Future expansions might focus on extending this prefix-based approach to tasks like visual question answering or interactive AI systems, exploring how CLIP's representations can optimize data interchangeability in multimodal AI environments. Additionally, enhancing the object recognition capabilities within CLIP or integrating additional contextual knowledge could further bolster the model's ability to handle denser semantic tasks.

Overall, the ClipCap model exemplifies a method where simplicity, efficiency, and adaptability converge, underscoring the transformative potential of pre-trained transformers in the AI landscape.