Training Verifiers to Solve Math Word Problems

In their paper, Cobbe et al. address a salient problem in modern LLMs: their inability to effectively perform multi-step mathematical reasoning. Despite the remarkable progress in LLMs' proficiency across various domains, mathematical problems present unique challenges that expose critical weaknesses in contemporary models. To this end, the researchers introduce a novel dataset, GSM8K, designed to facilitate the analysis and improvement of LLM capabilities in solving grade school math word problems, and propose a distinct method involving verifiers to enhance performance.

Key Contributions

The paper makes several notable contributions:

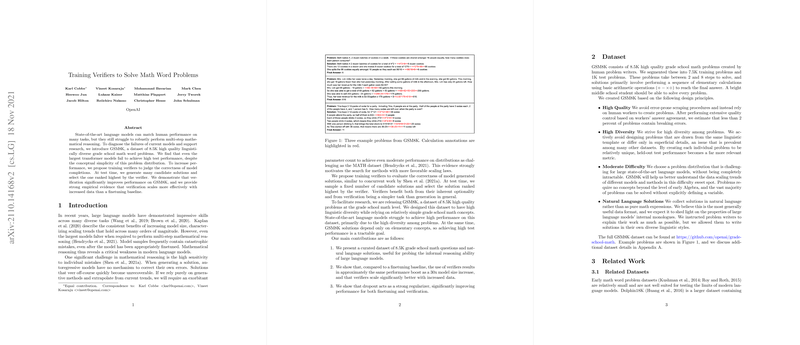

- GSM8K Dataset: The authors present GSM8K, a curated dataset of 8.5K grade school math problems. Unlike previous datasets characterized by limited linguistic templates and poor quality control, GSM8K boasts high diversity and quality, thus providing a rigorous testbed for evaluating LLMs' multi-step reasoning abilities.

- Verifiers for Solution Validation: The core proposition of this paper is the introduction of verifiers. These models are trained to evaluate the correctness of solutions generated by LLMs. By generating multiple candidate solutions and selecting the highest-ranked one, the authors show significant performance improvements over traditional finetuning baselines.

- Empirical Results: Demonstrating strong empirical evidence, the paper reveals that verifiers offer performance gains comparable to a 30x increase in model size, particularly with larger datasets, underscoring the scalability and potential of this approach.

Dataset Characteristics

The GSM8K dataset was meticulously constructed to ensure:

- High Quality: Problems were crafted by human workers with stringent quality checks to ensure less than 2% of problems contain errors.

- High Diversity: By avoiding repetitive templates, the dataset maintains linguistic richness, which is crucial for meaningful performance assessments.

- Moderate Difficulty: Problems are designed to challenge state-of-the-art LLMs but remain solvable using elementary concepts, thus providing a pragmatic benchmark.

- Natural Language Solutions: Solutions in GSM8K are documented in natural language to promote the development of LLMs that can articulate their rationale, enhancing interpretability.

Methodology and Results

The methodology involves two primary steps: finetuning and verification.

Finetuning

Finetuning adjusts model parameters using cross-entropy loss over the training data. The authors note that simply increasing the model size is not sufficient as even the largest GPT-3 model (175B parameters) struggles with 80% accuracy on GSM8K. This necessitates additional methods to mitigate the persistent errors in multi-step reasoning.

Verification

The authors propose verifiers to validate generated solutions. The process entails:

- Generating Multiple Solutions: Using a finetuned generator, multiple candidate solutions are sampled for each problem.

- Verifier Training: Verifiers are trained to predict the correctness of these solutions based on whether they yield the correct final answers.

- Selection: At test time, the verifier ranks the solutions, and the highest-ranked solution is selected.

Numerical Performance

The introduction of verifiers dramatically improves performance:

- On the full GSM8K dataset, a 6B parameter verifier outperforms a 175B parameter finetuned model, achieving similar gains to those obtained from a 30x increase in model size.

- Dropout regularization and token-level predictions further improve verifier robustness and generalization.

Implications and Future Work

The use of verifiers opens new avenues for enhancing LLMs' performance on tasks requiring precise, multi-step reasoning. The empirical results challenge the notion that sheer model size is the primary determinant of success in complex problem-solving scenarios. Instead, auxiliary methods such as verification exhibit superior scalability and efficiency.

Practical Implications: This paradigm can be extended to various domains where solution generation benefits from post-hoc validation, such as code synthesis, scientific discovery, and automated theorem proving.

Theoretical Implications: The findings prompt a reevaluation of scaling laws and suggest that models' internal verification mechanisms could be crucial in achieving human-like reasoning.

Future Directions: Subsequent research could explore incorporating verifiers into a broader range of reasoning tasks, integrating verification more deeply with generation, and addressing the challenge of generating adversarial examples that might fool verifiers.

Conclusion

Cobbe et al.'s paper makes a significant contribution to improving LLMs' mathematical reasoning abilities. By leveraging verifiers, the authors demonstrate a practical and scalable method that not only enhances performance but also provides deeper insights into the limitations and potential of LLMs. The GSM8K dataset paves the way for further research in this critical area, driving advancements in both the methodology and application of artificial intelligence.