WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing

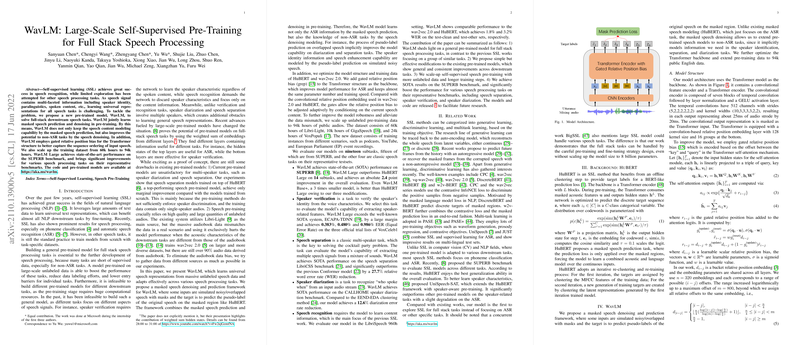

"WavLM: Large-Scale Self-Supervised Pre-Training for Full Stack Speech Processing" presents a novel pre-trained model, WavLM, optimized for a variety of speech processing tasks. The research extends the paradigm of self-supervised learning (SSL) in speech processing by addressing the limitations observed in previous models, such as HuBERT and wav2vec 2.0. The authors propose a dual approach of masked speech prediction and denoising, integrating a gated relative position bias within the Transformer architecture, and leveraging a significantly increased and diversified dataset for pre-training.

Methodology

The paper highlights several key innovations:

- Masked Speech Denoising and Prediction:

- By simulating noisy and overlapped speech inputs, WavLM trains on both masked speech prediction and denoising tasks.

- This synergy allows the model to retain high ASR performance while improving adaptability to non-ASR tasks such as speaker verification and diarization.

- Model Structure Optimization:

- WavLM introduces a gated relative position bias in the Transformer architecture.

- This enables the model to adaptively adjust to positional information, enhancing both performance and robustness.

- Increased Pre-Training Dataset:

- The training dataset was increased to 94k hours by incorporating data from Libri-Light, GigaSpeech, and VoxPopuli.

- The diversification of the dataset addresses domain mismatch issues by incorporating various acoustic environments and speaking styles.

Evaluation

Universal Representation Evaluation

WavLM's effectiveness was benchmarked on the SUPERB suite, encompassing a broad range of speech processing tasks categorized into content, speaker, semantics, paralinguistics, and generation aspects. The results demonstrate substantial improvements across almost all tasks, particularly excelling in speaker diarization with a relative error rate reduction of 22.6%. Notably, the WavLM Base+ model, despite being smaller, surpasses HuBERT Large, demonstrating the efficacy of the denoising task and data diversity.

Speaker Verification

The WavLM model was rigorously evaluated using the VoxCeleb dataset. The performance was quantified using the Equal Error Rate (EER), with WavLM Large achieving best-in-class results (Vox1-H EER of 0.986%). This indicates that WavLM performs significantly better in extracting speaker characteristics than current state-of-the-art models like ECAPA-TDNN.

Speaker Diarization

WavLM was tested on the CALLHOME dataset, achieving superior results compared to recent systems. The diarization error rate (DER) was notably improved, with WavLM Large achieving a DER of 10.35%, surpassing the previous best-performing methods. The model's ability to handle multi-speaker scenarios and background noise stood out as a key strength.

Speech Separation

On the LibriCSS benchmark, WavLM Large substantially reduced the word error rate (WER) across all overlap ratio conditions, demonstrating a 27.7% reduction in WER compared to the baseline Conformer model. This reinforces WavLM's capability to disentangle overlapping speech signals effectively.

Speech Recognition

WavLM was also evaluated for speech recognition on the LibriSpeech dataset under various labeled data setups. The results showed that WavLM consistently matched or outperformed benchmark models like wav2vec 2.0 and HuBERT, achieving a WER of 3.2% on the test-other set with a Transformer LLM when trained on the entire 960 hours of labeled data.

Implications and Future Work

The implications of WavLM are profound for both practical applications and theoretical research in speech processing. Practically, the ability to leverage large-scale pre-trained models for diverse speech tasks could streamline development workflows and enhance end-user experiences in real-world applications.

Theoretically, the integration of masked speech denoising into the pre-training process highlights new avenues for SSL research. Additionally, the use of a large, varied dataset for pre-training suggests that future work could explore further scaling and diversifying training data to enhance model robustness and generalization.

In conclusion, WavLM sets a new benchmark for full-stack speech processing tasks. Future research may focus on scaling model parameters and exploring joint training frameworks that integrate text and speech data to leverage their synergistic potential.